Artificial Intelligence News - Page 8

OpenAI CEO Sam Altman was asked if he trusts himself with the power of AGI

Sam Altman, the CEO of OpenAI, the company behind GPT-4, ChatGPT, Sora, and many other industry-leading AI technologies, has sat down for an interview with Lex Fridman to discuss multiple topics regarding artificial intelligence and the impressive creations being made at OpenAI.

Lex Fridman asked Altman if he trusts himself with the power of leading a company that could potentially create the first Artificial General Intelligence (AGI), an AI system that is capable of human-level and beyond intelligence. Altman responded honourably to the question, saying that he believes that its important that "I nor any other one person have total control over OpenAI, or over AGI, and I think you want a robust governance system."

The OpenAI CEO further explained that he "continues to not want super voting control over OpenAI" and "I continue to think that no company should be making these decisions and that we really need governments to put rules of the road in place."

YouTube rolls out safeguards to battle the rise of AI generated video

YouTube has taken to its blog to announce a new rule for its platform designed to protect users from being deceived by creators who are trying to pass off their content as authentic when it was created using the assistance of artificial intelligence-powered tools.

With the unveiling of OpenAI's Sora tool, which is designed to create photorealistic video content from user text prompts, and the popularity of ChatGPT, it isn't a reach to say that AI-powered tools such as Sora will be popular when they are fully developed and released.

Some of the video examples provided by Sora creator OpenAI featured tell-tale signs of AI-powered creation, but the vast majority at a glance, or even viewed by the untrained eye, wouldn't raise alarm bells for synthetic creation, further blurring the line between what's real and what's fake on the internet.

Continue reading: YouTube rolls out safeguards to battle the rise of AI generated video (full post)

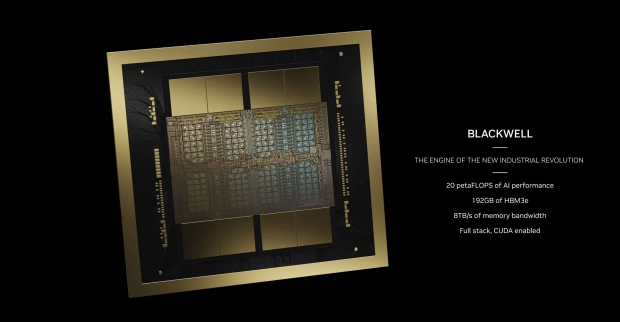

OpenAI, Microsoft, Amazon, Meta, Google, Tesla, and more are all on the NVIDIA Blackwell train

NVIDIA has unveiled its next-gen Blackwell AI GPU, which features 208 billion transistors, 192GB of super-fast HBM3E memory, a cutting-edge custom TSMC 4NP process, and groundbreaking new networking that sees NVIDIA NVLink deliver 1.8TB/s bidirectional throughput per GPU.

The company also announced its new NVIDIA GB200 Grace Blackwell Superchip, which connects two NVIDIA B200 Tensor Core GPUs to the NVIDIA Grace CPU "over a 900GB/s ultra-low-power NVLink chip-to-chip interconnect." With serious AI horsepower, NVIDIA's powerful next-gen AI hardware arrives during the AI boom, so, unsurprisingly, it announced its long list of Blackwell partners.

NVIDIA writes, "Among the many organizations expected to adopt Blackwell are Amazon Web Services, Dell Technologies, Google, Meta, Microsoft, OpenAI, Oracle, Tesla, and xAI." Even though most, if not all, of these companies are investing in creating their own AI chips and hardware for generative AI-which is used in everything from scientific research to medicine and chatbots-Blackwell will drive many of the advances over the next few years.

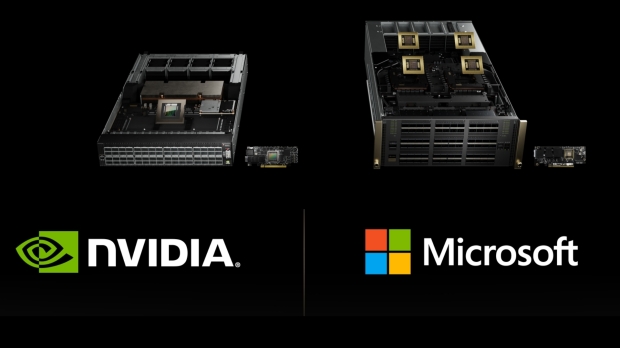

Microsoft and NVIDIA to integrate generative AI and Omniverse tech in Azure and Microsoft 365

It's been a massive day for AI news, with NVIDIA's premiere GTC 2024 AI conference underway. Earlier today, the company unveiled its next-gen Blackwell AI GPU - an unprecedented AI monster with 208 billion transistors and 192GB of super-fast HBM3E memory.

NVIDIA's first multi-GPU die is built on a cutting-edge TSMC 4NP process. It allows organizations to run real-time generative AI on "trillion-parameter large language models" at up to 25X less cost and energy consumption than its Hopper predecessor. Throw in the latest version of NVIDIA NVLink that delivers 1.8TB/s bidirectional throughput per GPU, and it's no wonder everyone is signing up to join the Blackwell family - including Microsoft.

Alongside the big Blackwell reveal, NVIDIA and Microsoft have announced that Microsoft Azure will adopt NVIDIA Grace Blackwell Superchips to help accelerate customer and first-party (Microsoft's own) AI offerings, Including Microsoft 365, aka the online versions of Microsoft Word, PowerPoint, Excel, and OneNote. NVIDIA GPUs and NVIDIA Triton Inference Server will help power Microsoft Copilot for Microsoft 365.

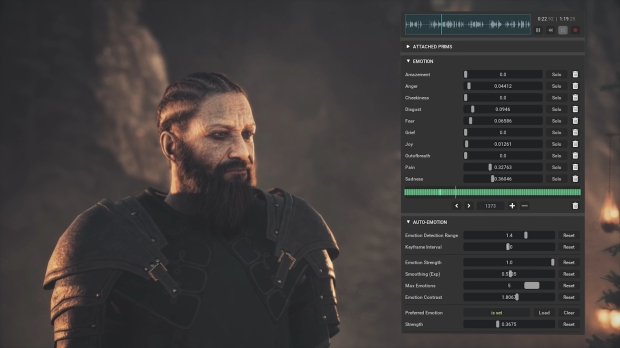

NVIDIA Audio2Face uses AI to generate lip synching and facial animation, showcased in two games

Localization is a big thing in gaming, where a game's dialogue and presentation are recorded in various languages to reach a global audience. Like how Netflix dubs all of its original programming into multiple languages, localization for games is a complex process that requires accurately translating dialogue and text, maintaining dramatic or comedic tones, and then re-recording dialogue with voice actors from different countries.

Where games differ from movies or television drama is that all performances are digital and completely malleable, which is why you've got a situation where Sony's PlayStation-exclusive Ghost of Tsushima (which is coming soon to PC) offers both English and native Japanese language options with full lip-syncing and correct facial animation.

Although it's possible to do this for dozens of languages, Ghost of Tsushima limits full lip-synching to two language options due to the task's complexity and the animation involved. This is where generative AI, specifically NVIDIA's Audio2Face technology, will step in.

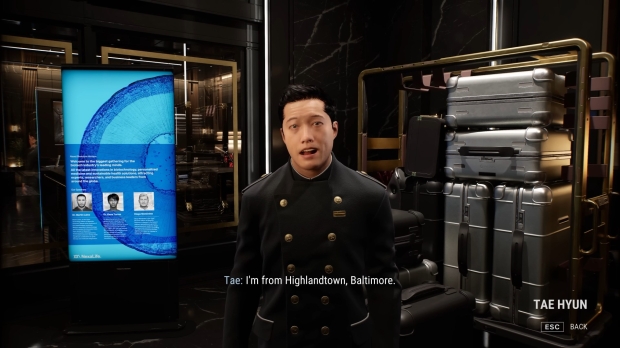

NVIDIA's Covert Protocol tech demo has you become a detective investigating AI Digital Humans

At GDC 2024, NVIDIA presented a new tech demo for a theoretical detective game, Covert Protocol, created in collaboration with Inworld. If you recall NVIDIA's recent AI collabs, which created cyberpunk-style tech demos featuring AI avatars you can interact with, this takes it all one step further. It presents an old-school adventure game where you're talking to characters to solve a mystery in a brand-new way.

In Covert Protocol, you play a private detective and explore a realistic environment, speaking to 'digital humans' as you piece together critical and key information. The game is powered by the Inworld AI Engine, which fully uses NVIDIA ACE (the technology we've been following over the past year) services to ensure that no two playthroughs are the same.

"This level of AI-driven interactivity and player agency opens up new possibilities for emergent gameplay," NVIDIA writes. "Players must think on their feet and adapt their strategies in real-time to navigate the intricacies of the game world."

NVIDIA creates Earth-2 digital twin: generative AI to simulate, visualize weather and climate

NVIDIA isn't just changing up the GPU and AI GPU game with its Blackwell AI GPU chips, announcing Earth-2 today during the GPU Technology Conference (GTC).

NVIDIA announced its new Earth-2 climate digital twin cloud platform so people could simulate and visualize weather and climate at scales never seen before. Earth-2's new cloud APIs are available on NVIDIA DGX Cloud, allowing virtually anyone to create AI-powered emulations to make interactive, high-resolution simulations ranging from the global atmosphere to localized cloud cover all the way through to typhoons and mega-storms.

The new Earth-2 APIs offer AI models that use new NVIDIA generative AI model technology called CorrDiff, using state-of-the-art diffusion modeling, capable of generating 12.5x higher resolution images than current numerical models that are 1000x faster and 3000x more energy efficient.

GIGABYTE teases DGX, Superchips, PCIe cards based on NVIDIA's new Blackwell B200 AI GPUs

GIGABYTE is showing off its next-gen compact GPU cluster scalable unit: a new rack with GIGABYTE G593-SD2 servers, which have NVIDIA HGX H100 8-GPU designs and Intel 5th Gen Xeon Scalable processors inside.

The company has said it will support NVIDIA's new Blackwell GPU that succeeds Hopper, with enterprise servers "ready for the market according to NVIDIA's production schedule". The new NVIDIA B200 Tensor Core GPU for generative AI and accelerated computing will have "significant benefits," says GIGABYTE, especially in LLM inference workloads.

GIGABYTE will have products for HGX baseboards, Superchips, and PCIe cards with more details to be provided "later this year," adds the company.

NVIDIA's new Blackwell-based DGX SuperPOD: ready for trillion-parameter scale for generative AI

NVIDIA has just revealed its new Blackwell B200 GPU, with its new DGX B200 systems ready for the future of AI supercomputing platforms for AI model training, fine-tuning, and inference.

The new NVIDIA DGX B200 is a sixth-generation system that's air-cooled in a traditional rack-mounted DGX design used worldwide. Inside, the new Blackwell GPU architecture powers the DGX B200 system using 8 x NVIDIA Blackwell GPUs and 2 x Intel 5th Gen Xeon CPUs.

Each DGX B200 system features up to 144 petaFLOPS of AI performance, an insane 1.4TB/sec of GPU memory (HBM3E) with a bonkers 64TB/sec of memory bandwidth, driving 15x faster real-time inference for trillion-parameter models over the previous-gen Hopper GPU architecture.

NVIDIA GB200 Grace Blackwell Superchip: 864GB HBM3E memory, 16TB/sec memory bandwidth

NVIDIA has finally announced its new Blackwell GPU, DGX system, and Superchip platforms all powered by Blackwell B200 AI GPU and Grace CPU.

The new NVIDIA GB200 Grace Blackwell Superchip is a processor for trillion-parameter-scale generative AI, with 40 petaFLOPS of AI performance, a whopping 864GB of ultra-fast HBM3E memory with an even more incredible 16TB/sec of memory bandwidth.

Each of the new GB200 Grace Blackwell Superchips will feature 2 x B200 AI GPUs and a single Grace CPU with 72 Arm-based Neoverse V2 cores. Alongside the 864GB HBM3E memory pool, 16TB/sec memory bandwidth is joined by a super-fast 3.6TB/sec NVLink connection.