Artificial Intelligence News - Page 1

Runway busted stealing 100,000+ YouTube videos for AI training

Artificial intelligence-powered tools and applications are certainly impressive in what they can generate, but where these AI companies get the data to train these impressive models remains ambiguous or completely closed off from public knowledge.

A new report revealed only last week that Apple, NVIDIA, Anthropic, and others used a public dataset containing hundreds of thousands of YouTube video transcripts to train their AI models. While Apple, NVIDIA, and others weren't the ones to download the transcripts, the data was still used to train AI models, which strictly violates YouTube's Terms of Service (ToS). Earlier in the year, YouTube's CEO stated that any data downloaded from its platform is a violation of its ToS.

Now, a new report from 404 Media states that the popular AI video generator company Runway trained its Gen-3 Alpha model on thousands of YouTube videos without obtaining permission from the creator or YouTube. The report also states the company used pirated content for AI model training. 404 Media was sent a spreadsheet that lists how many videos were taken from a specific source, and judging from the list, the sources are extensive and cover a large variety of channels.

Continue reading: Runway busted stealing 100,000+ YouTube videos for AI training (full post)

NVIDIA AI Foundry helps companies train and develop custom AI 'supermodels'

NVIDIA AI Foundry is a new enterprise service where NVIDIA will help companies build AI 'Supermodels' - custom Llama 3.1 generative AI models built with NVIDIA software and expertise. These AI 'Supermodels' are custom-trained for specific industry use cases using proprietary and synthetic data from Llama 3.1 405B and the NVIDIA Nemotron Reward model.

Hardware-wise, it's powered by the NVIDIA DGX Cloud AI platform, which allows it to access the most advanced AI hardware, architecture, and technologies. It's an NVIDIA AI Foundry, so that's to be expected. Meta's open-source Llama 3.1 405B model was announced earlier this week, offering capabilities and power previously limited to closed-source models. This new partnership between NVIDIA and Meta is a big deal for companies everywhere.

"Meta's openly available Llama 3.1 models mark a pivotal moment for the adoption of generative AI within the world's enterprises," said Jensen Huang, founder and CEO of NVIDIA.

Apple Intelligence expected to release with coming iOS 18 update

iOS 18 is Apple's biggest overhaul to its operating system in years. It will be the first update to introduce AI-powered features to the Cupertino company's product lineup.

The new operating system update coming to the iPhone, Mac, and iPad contains Apple's version of artificial intelligence, which it's calling Apple Intelligence. Last week, Apple rolled out the public beta for iOS 18, but it doesn't include the suite of Apple Intelligence features, leaving users confused as Apple said it would be included in iOS 18. However, it will likely first go to developer beta and then to a public opt-in beta before being pushed out to the wider public.

So, when will you be able to try Apple's new AI features? I can see Apple limiting access to its AI features all the way up until the launch of the iPhone 16, as the company won't want to prematurely release its biggest selling point for its new generation of devices only to see its customers go out and buy now-discounted previous generations that are still AI compatible.

Continue reading: Apple Intelligence expected to release with coming iOS 18 update (full post)

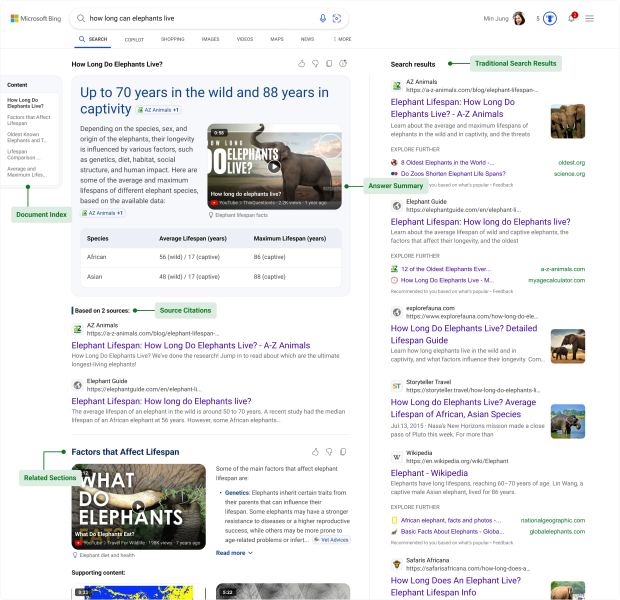

Microsoft unveils Bing Generative Search: AI enhanced, a complete overhaul of search on Bing

Microsoft has just announced Bing Generative Search, an overhaul and upgrade of Bing Search that is provides AI-generated results directly on the Bing search page.

At the top of Bing search you'll soon find an AI-generated answer that is created from small and large language models that have reviewed millions of sources to provide what Microsoft hopes is the most accurate (AI-powered) answer.

The AI-powered Bing Generative Search will break the answer down into a document index that provides more information about what you're searching for, if you want to know more. The Bing search page will also provide a list of sources that the AI-generated text was created from, and show traditional search results in the sidebar on the right, if you dont' care about the AI-powered Bing search.

SK hynix Q2 2024 financials: quarter revenues hit all-time high, global #1 AI memory provider

SK hynix has announced its Q2 2024 financial results, with quarter revenues hitting an all-time high, and SK hynix cementing its position as the leader of AI memory providers.

The South Korean memory giant recorded 16.4 trillion won in revenue, 5.46 trillion won in operating profit (with an operating margin of 33%), and 4.12 trillion won in net profit (with a net margin of 25%) in Q2 2024.

SK hynix notes that quarter revenues reached an all-time high, "far exceeding" the previous record of 13.81 trillion won in Q2 2022. SK hynix said that the continuous rise in overall prices of DRAM and NAND products, with unstoppable demand for AI memory, including HBM, led to a 32% increase in revenues compared to the prior quarter.

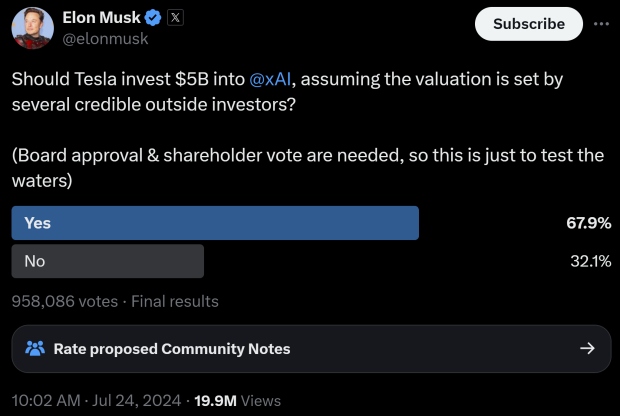

Elon Musk asks if Tesla should invest another $5 billion into xAI startup

How do you get $5 billion out of Tesla to invest into your AI startup? You poll your followers on X if you're Elon Musk, which is exactly what he did:

The Tesla and SpaceX founder asked his followers "should Tesla invest $5 billion into xAI, assuming the valuation is set by several credible outside investors? (Board approval & shareholders vote are needed, so this is just to test the waters).

Analyst says there's too much 'AI FOMO' for AI to stop its momentum, says AI halt is 'fiction'

Moor Insights & Strategy founder, CEO, and chief strategist Patrick Moorhead had some choice words for the AI market to come crashing down anytime soon, where in a chat with YahooFinance, he said: "This whole notion of AI coming to a screeching halt anytime soon is fiction".

Moorhead's comments are coming from some companies worried of an AI bust, and the entire market coming to a screeching halt, including SK Group chairman Chey Tae-won who recently said at the 47th KCCI Jeju forum, who compared the AI boom to the gold rush, and that "without making money, the AI boom could vanish, just as the gold rush disappeared".

NVIDIA to make $210 billion revenue from selling its Blackwell GB200 AI servers in 2025 alone

Morgan Stanley has said that the global supply chain will see a huge boost in orders from the industry for AI servers, with NVIDIA continuing (and clawing even more) market share to dominate the AI industry further.

NVIDIA is expected to ship between 60,000 and 70,000 units of its Blackwell GB200 AI servers, which will bring in $210 billion. Each server costs $2 million to $3 million, so 70,000 GB 200 AI servers at $3 million each = $210 billion. NVIDIA is making the NVL72 and NVL36 GB200 AI servers, as well as B100 and B200 AI GPUs on their own.

Morgan Stanley estimates that if NVIDIA's new NVL36 AI cabinet is used as the biggest seller by quantity, the overall demand for GB200 in 2025 will increase to 60,000 to 70,000 units. The big US cloud companies are the customers lining up -- or have already purchased -- NVL36 AI cabinets, but by 2025 we could expect NVL72 AI cabinets to be shipping in higher quantities than NVL36.

Meta releases 'world's largest' AI model trained on $400 million worth of GPUs

Meta has announced its release of Llama 3.1 405B, which is what the company is describing as the "world's largest" open-source large language model.

Meta explains via a new blog post that Llama 3.1 405B is "in a class of its own" with "state-of-the-art capabilities" that rival the leading AI models currently on the market when it comes to general knowledge, steerability, math, multilingual translation, and tool use. Meta directly compares Llama 3.1 405B with competing AI models, such as OpenAI's various GPT models, showcasing the recently released model trained on 15 trillion tokens. A token can be considered a fragment of a question and an answer.

To achieve the training of this 405 billion parameter model, Meta used 16,000 NVIDIA H100 GPUs, which cost $25,000 each. This means the AI model was trained by $400 million worth of NVIDIA GPUs, which required 30.84 million GPU hours and produced approximately 11,390 tons of CO2.

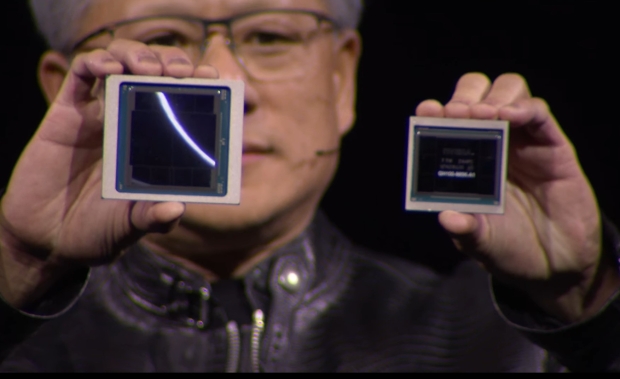

TSMC declined NVIDIA's request for a dedicated packaging manufacturing line for its GPUs

TSMC reportedly turned down a request by NVIDIA CEO Jensen Huang for the Taiwanese semiconductor giant to set up a dedicated packaging manufacturing line for NVIDIA's products.

In the world of chips, semiconductor fabrication and chip packaging have become increasingly important to pump out the amount of AI GPUs and advanced packaging as possible. Unlike fabrication capacity, packaging has struggled to keep up with the insatiable demand for chips, with TSMC having to dedicate considerable resources towards advanced packaging capacity.

NVIDIA CEO Jensen Huang visited TSMC headquarters earlier this year, meeting with TSMC founder Dr. Morris Chang and the firm's former chairman. Industry sources said Jensen requested the company set up a dedicated packaging line for NVIDIA GPUs.