Over 60 countries, including the U.S. and China, have held the first international summit on the use of AI in the military and warfare at The Hague, where they've signed a 'call to action' for the responsible use of the technology. This hopefully means that creating AI soldier bots capable of wiping out countless people isn't at the top of the list when developing new AI-based military platforms.

Unfortunately, it wasn't a formal or legally binding agreement, simply a pledge to develop and use AI with "international legal obligations and in a way that does not undermine international security, stability, and accountability." With the rise of AI platforms like ChatGPT and AI-assisted targeting systems and facial recognition being developed for military use, not to mention the issue of drones as a tool for warfare, it was a relatively light affair for what is a hot topic right now.

Regarding attendance at REAIM (Responsible AI in the Military), Russia and Ukraine did not attend the summit, with Israel being there but not signing the statement. It was the U.S. that put forward a framework for the responsible use of AI in the military, stating that AI warfare should include "appropriate levels of human judgment." However, this is a somewhat vague definition when talking about the potential for autonomous killer robots. China added that it opposed an AI arms race and that countries should similarly work through the United Nations regarding AI and the military.

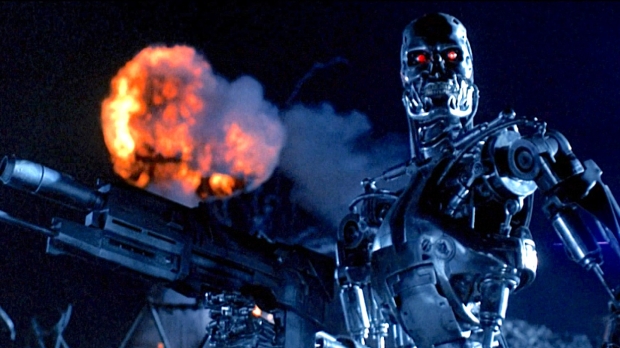

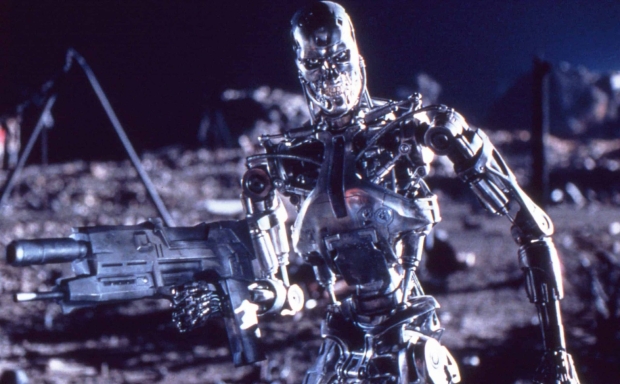

No doubt this won't be the last formal discussion or international summit relating to AI in the military, so here's hoping that there are steps taken to avoid a situation where an AI system called Skynet becomes self-aware and we all have to follow some dude named John Conner into battle.