NVIDIA's next-gen Hopper GPU architecture is one of the most monsterous pieces of technology the human race has ever created... but it wasn't just humans... artificial intelligence (AI) helped in a huge uway, too.

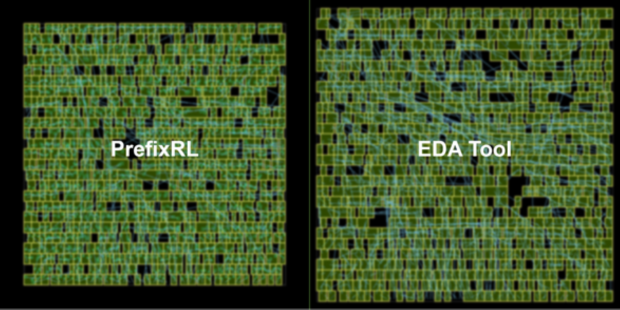

In a recent post on NVIDIA's own Developer website, the company underlines how it used AI to design the greatest GPU it has created so far: the new NVIDIA Hopper H100 GPU. NVIDIA normally designs most of its next-gen GPUs using Electronic Design Automation (EDA) tools, but AI helped out with Hopper H100 through the PrefixRL methodology, which is an optimization of Parallel Prefix Circuits using Deep Reinforcement Learning. This allows NVIDIA to design smaller, faster, and more power-efficient GPUs... all while having more performance.

Rajarshi Roy, Applied Deep Learning Research at NVIDIA, tweeted: "Arithmetic circuits were once the craft of human experts, and are now designed by AI in NVIDIA GPUs. H100 chips have nearly 13,000 AI designed circuits! How is this possible?" and then posted a link to the blog that he co-wrote on NVIDIA's website.

The authors explain: "Arithmetic circuits in computer chips are constructed using a network of logic gates (like NAND, NOR, and XOR) and wires. The desirable circuit should have the following characteristics:"

- Small: A lower area so that more circuits can fit on a chip.

- Fast: A lower delay to improve the performance of the chip.

- Consume less power: A lower power consumption of the chip.

NVIDIA has said that it used this method to design close to 13,000 AI-assisted circuits, which offer a 25% area reduction when put up against EDA tools (which are just as fast, and just as functionally the same as the new method powered by AI).

- Read more: NVIDIA H100 SXM in the flesh: 4nm Hopper GPU + 80GB HBM3 memory

- Read more: You can buy NVIDIA's next-gen Hopper GPU + 80GB HBM2e for $36,550

- Read more: NVIDIA H100 GPU full details: TSMC N4, HBM3, PCIe 5.0, 700W TDP, more

- Read more: NVIDIA reveals next-gen Hopper GPU architecture, H100 GPU announced

- Read more: NVIDIA can sustain the world's internet traffic with 20 x H100 GPUs

PrefixRL is said to be a very computational demanding task, where it uses 256 CPUs and 32,000 GPU hours for each and every physical simulation of each GPU. NVIDIA worked on this too, of course, developing "Raptor", which is NVIDIA's new in-house distributed reinforcement learning platform that taps NVIDIA hardware for this very thing.

- Raptor's ability to switch between NCCL for point-to-point transfer to transfer model parameters directly from the learner GPU to an inference GPU.

- Redis for asynchronous and smaller messages such as rewards or statistics.

- A JIT-compiled RPC to handle high volume and low latency requests such as uploading experience data.

- Read more: NVIDIA Hopper GPU is up to 40x faster with new DPX instructions

- Read more: NVIDIA is turning data centers into 'AI factories' with Hopper GPU

- Read more: NVIDIA Eos: the world's fastest AI supercomputer, 4608 x DGX H100 GPUs