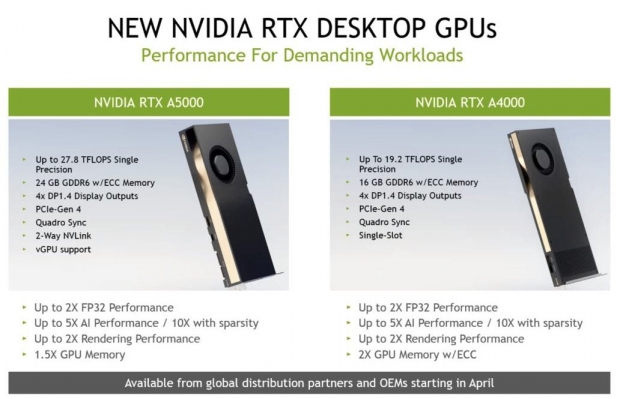

NVIDIA couldn't host a GPU Technology Conference (GTC 2021) event without announcing some new GPUs with the introduction of the new NVIDIA RTX A5000 and RTX A4000 GPUs.

The new NVIDIA RTX A5000 and A4000 like the flagship RTX A6000 pack new RT Cores, Tensor Cores, and CUDA cores that all super-speed AI, graphics, and real-time rendering. The new NVIDIA RTX A5000 packs the GA102 GPU with 28 billion transistors on Samsung's custom 8nm node, with 8192 CUDA cores and up to 27.8 TFLOPs of single precision compute performance.

We have 24GB of GDDR6 with ECC memory on a 384-bit memory bus with up to 768GB/sec of memory bandwidth that can be doubled when you put 2 x NVIDIA RTX A5000 GPUs together with NVLink -- you cannot use the NVIDIA RTX A4000 in NVLink so you're limited to just 16GB of GDDR6 on the RTX A4000 versus up to 48GB on the RTX A5000 in NVLink.

- Read more: NVIDIA teases Ampere Next GPU for 2022, Ampere Next Next GPU for 2024

- Read more: NVIDIA's new A30 Tensor Core GPU: up to 24GB of HBM2, PCIe 4.0 tech

(RTX A5000 on top, RTX A4000 on bottom)

Another thing that the RTX A5000 has over the RTX A4000 is that the higher-end RTX A5000 supports virtualization through NVIDIA RTX vWS software. This lets multiple high-performance virtual workstation instances be powered on the NVIDIA RTX A5000 that lets remote workers share resources and use the power of the RTX A5000s in that system.

- Read more: NVIDIA Atlan SoC: combines in-house Grace CPU + next-gen Ampere GPU

- Read more: NVIDIA's new DGX Station 320G: 4 x Ampere GPUs + 320GB HBM2e for $150K

- Read more: NVIDIA announces Grace: next-gen ARM-based CPU for giant-scale AI, HPC

NVIDIA's new RTX A4000 GPU is using the GA104 GPU with 17.4 billion transistors on Samsung's custom 8nm node, with 6144 CUDA cores and up to 19.2 TFLOPs of single precision compute performance. It has 16GB of GDDR6 memory on a 256-bit memory bus with up to 448GB/sec of memory bandwidth.

As for the TDP on each GPU we're looking at 230W for the RTX A5000 and 140W for the RTX A4000.