Two Weeks in the Mine

Heading Down the Mine

It has now been 14 days since I started my Ethereum journey, and how far I've come. I started out with a single 4-way GPU system that I turned into two PCs with seven graphics cards between them, and then into four systems with 12 graphics cards, and then it all went a little blurry...

I'm now sitting with 12 systems spread out across 40 graphics cards, thanks to the biggest asset of them all: PCIe x1 to PCIe x16 risers. Without these, I would be stuck at closer to 20-25 graphics cards. There's a mix of NVIDIA and AMD in there, with a bunch of NVIDIA GeForce GTX 1060s because of their great power efficiency - mixed in with GTX 1070s, GTX 1080s, and GTX 1080 Ti cards. Spread throughout their own, and intertwined systems are also the cryptocurrency beasts: Radeon R9 Fury series cards, and the impossible to find Radeon RX 400 and RX 500 series cards.

There's a heap of RX 470/480/570/580 cards, but the R9 Nano, R9 Fury, and R9 Fury X cards are still Ethereum mining behemoths. They have special places in three different systems, with one dedicated to Fiji-based GPUs, while another two systems are dedicated to Radeon RX series graphics cards. For the systems with 750W/850W PSUs, I've used the power efficient GTX 1060s thanks to their single 6-pin PCIe power connector.

The Last Two Weeks

I started on June 6, and by June 7, you can see I was hitting 300MH/s without a problem. At that point, I wrote the "I'm making $900 per month mining Ethereum, I want MORE!" article, which has since blown up. This was when the bug started, and off I went on this adventure.

After reaching 300MH/s, I was keen to double it within a few days and slowly venture up to the peak of 1GH/s of Ethereum mining. The price was rising every day, and so was the difficulty - timing was key.

I had three more systems to use, so I filled them with the fastest hardware I had - but it was too much for my small three bedroom house and its weak power delivery. I reached just shy of 500MH/s within 24 hours of adding new systems but had to switch out the 1200W/1500W PSUs with smaller, more power efficient PSUs.

Another 48 hours later and some PSU shuffling around, without additional hardware purchases, I hit another wall @ 600MH/s with the last system I had in parts assembled.

One Week Later & LOTS of Hardware Purchases

One Week Later...

After enjoying what I had already completed with the 600MH/s milestone, I needed more power efficiency. At this time, all of the systems were sprawled out across the house. From where I started in my main living area (which is used as a large office), I shifted into:

- Entire living area

- Main dining area

- Laundry

- Spare room 1

- Spare room 2

On top of all of this, there was mess EVERYWHERE. Networking and power cables down the hallways, grabbing every single PCIe power connector for every single PSU as they were all tapped out, and balancing the power between blackouts and killing 6/8 connector power boards.

SO MUCH NEW HARDWARE

I went out and spent just over $10,000 on a bunch of motherboards, CPUs, SSDs, PSUs, and 22 graphics cards. I've just ordered another $3500 worth of gear half way through writing this (and it's only day 14) - with three more CPU/motherboard combos, and six EVGA GeForce GTX 1060 6GB graphics cards. Oh, and I have 20 x new PCIe x1 to x16 risers on the way.

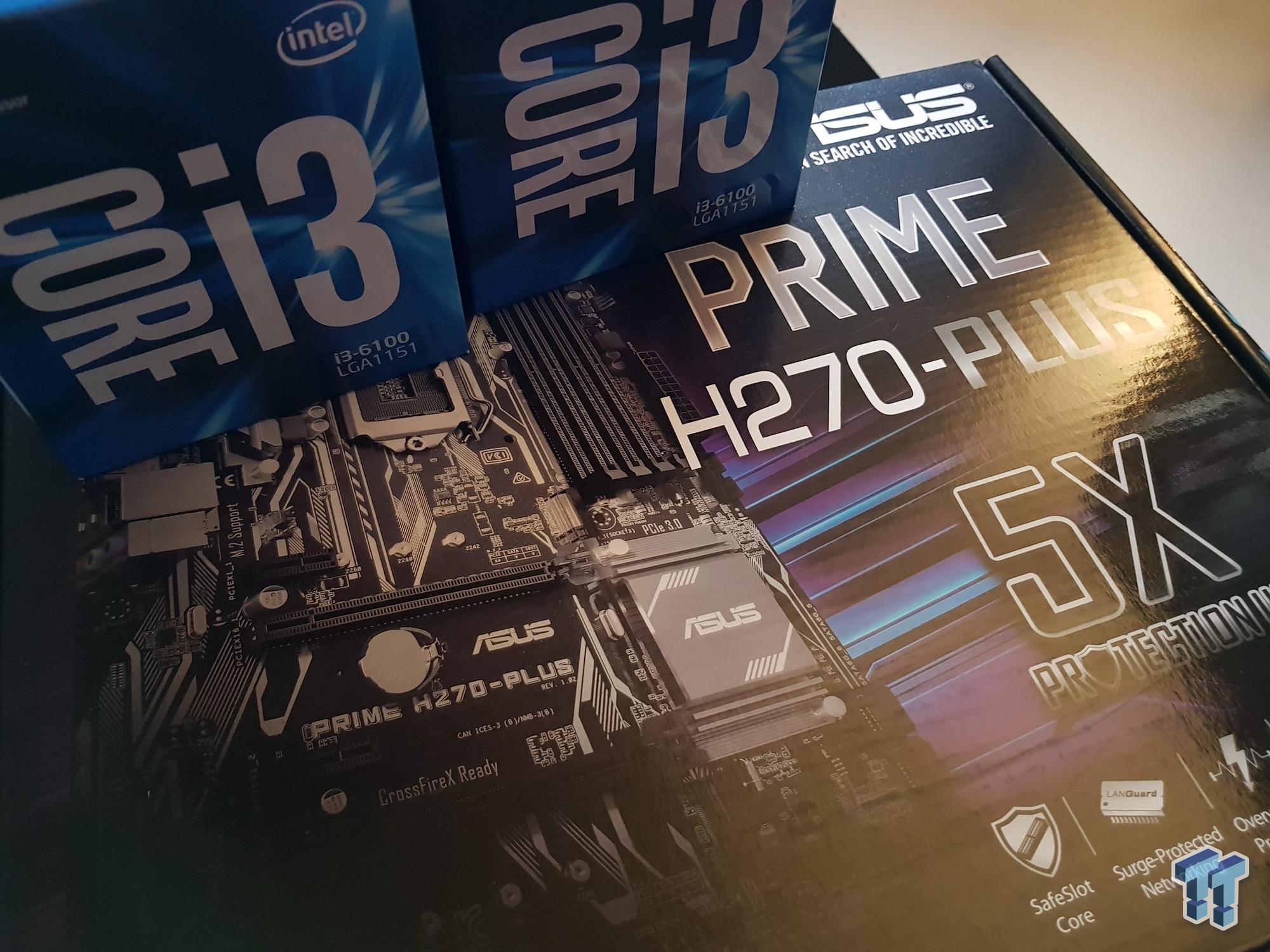

I'm using the resourceful ASUS PRIME H270-PLUS motherboard, as it has six PCIe slots - and with six risers, you can get a heap of power efficient graphics cards into a single system. I've got two of these set up with six GTX 1060s, each sipping around 500W of power total. I chose the Core i3-6100 as I couldn't get my hand on the Celeron G3930 processor at the time, but with the order of three new CPU/motherboard combos, I managed to scoop up the Celeron processor which saves $80+ per CPU ($240 savings total or so).

Here we have the two motherboards that helped me get past 700MH/s... and below, some of the new GTX 1060s that helped me get under the power limit of the entire house.

Temporary Set Up Before the Move!

Temporary Setup

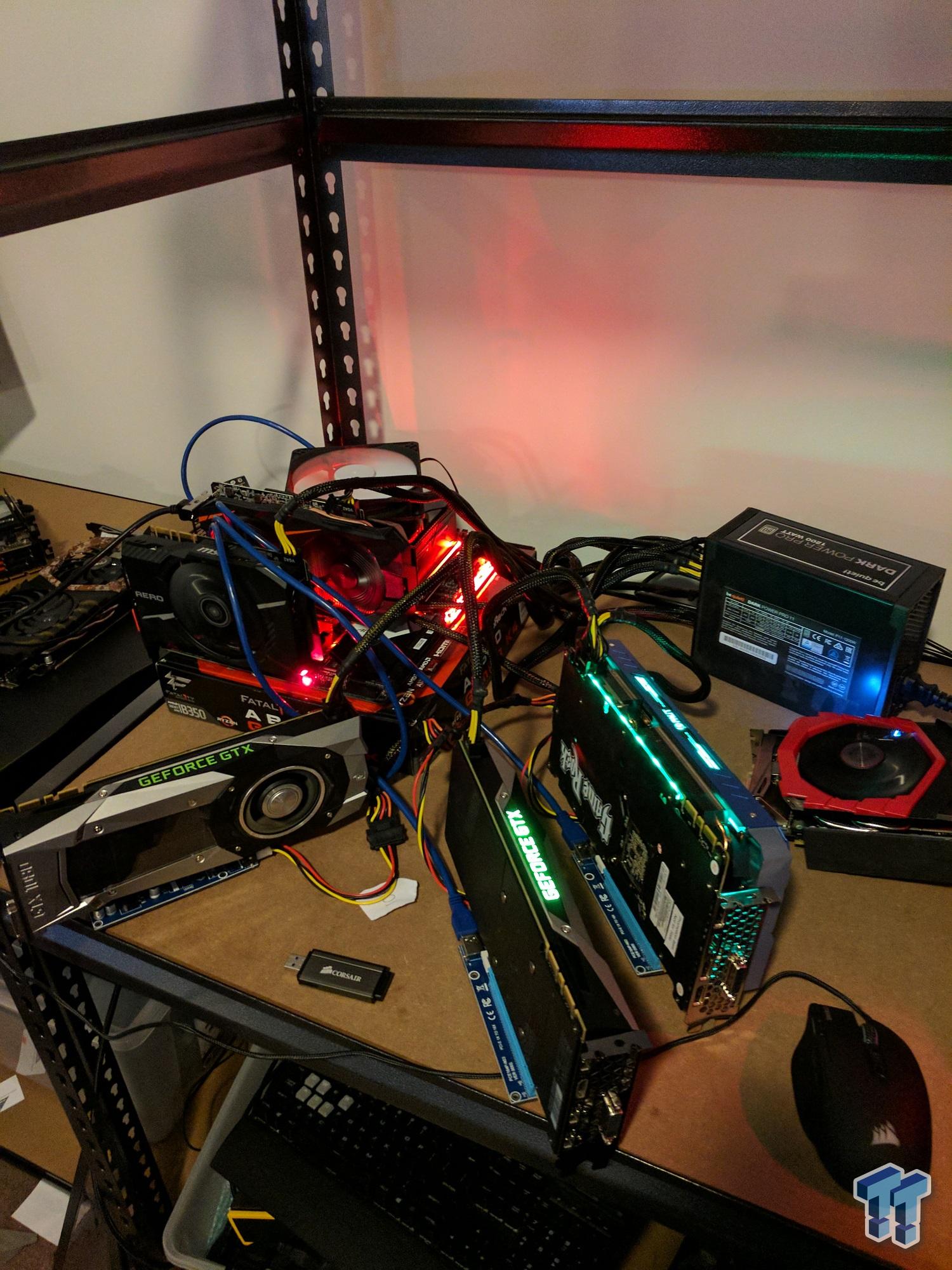

A little bit of space between the cards is good, but not for long term use. This was purely up and running for a few days to see the power consumption and heat levels, as we cleared out the other rooms and acquired new hardware. Oh, and lots and lots of troubleshooting. I was doing 15 hours a day at this point...

Crazy, eh? Don't worry, the cards were only used like this for around 24 hours before it was all moved up onto new shelving - and we also shuffled a bunch of them off, so we could make way for the new GTX 1060s.

The first set of them...

The entire stack of cards that I purchased in the last five days, on top of the Radeon R9 Fury/Fury X, Radeon RX 400/500 series, and GeForce GTX 10 series, oh and TITAN X cards.

This is the haul of PSUs that are split between what Corsair supplied for other builds, on top of Corsair PSUs that I purchased over the last few days. The Corsair PSUs have been rock freakin' solid, with the AX1500i powering the 4-way GTX 10 series system that has two GTX 1080 Ti, one TITAN X(P), and one GTX 1080 graphics card.

Even More Testing, But We're Nearly There

I found that some motherboards didn't like five or six graphics cards installed, but there were some that would take four graphics cards with PCIe risers if the main x16 port was used. But not with a PCIe riser, an actual physical installation of a graphics card, meaning it sometimes used the PCIe x1 port next to it. Some of the motherboards had a x1 port behind the main x16 port, meaning I could use between four and five cards in even the most simplest or old PCs.

Don't try this at home, folks - well, do try it - just not like this.

Some shelves that Ryan Hooper (our resident case modder) built for the Ethereum mining machines. I have four of these holding 20 graphics cards total, but we also moved into other shelving as well as the requirements went up on the amount of systems being used at once.

And another one.

Power Efficiency is KING = Opens the Door for 1GH/s

It's almost like I was being mocked by my Ethereum mining addiction, as I shuffled around the systems for the big move and I hit 850MH/s... breaching 900MH/s for a brief second. It was after this that we decided to do some tweaking with the systems and the networking, and it all fell apart.

12 hours of troubleshooting split across a very long night and a seven-hour straight stint the morning of the day I wrote this article (it's now 9PM, and I've been here since 8:30AM solid work), and the systems are up and running, and I've easily breached the 1GH/s barrier. The barrier felt amazing to break through, with a new milestone of 1.18GH/s peak, stabilizing at 1.1-1.15GH/s. Amazing stuff.

Nearly There...

Initial Setup, Testing, and Troubleshooting

As I said above, at first everything was... well, everywhere. It was set up in the most basic form, as a test - to see what graphics cards would fit where, and how much power we'd be using across multiple systems. After an adjustment onto some better shelving for breathing room, the cards began running cooler - just in time for a new target.

Final Form Before the Big Move

This was the final form before the big move because I shifted off a bunch of the graphics cards using heaps of power (looking at you, Radeon R9 295X2 - two of those were killing me). I changed out the two R9 295X2's (four GPUs in total) with 15 x GTX 1060s for the same power draw... around 1400W total.

The shift to these stands really did help, but it was the move into the garage with additional industrial fans that changed everything. We moved to even more systems, and used every single one of the 30 x PCIe risers that I purchased. Meaning we were finally at 40 graphics cards... and then, the big move - where all of the problems started, and it caused major outages of my entire system.

We tore down the power system, networking setup, and moved all of the cards onto specific PSUs for specific loads - down to the very last PCIe power connector.

Then... the move began.

Some Power & Networking Tweaks

This is the NTD box to my NBN connection (National Broadband Network here in Australia, fiber to the home @ 100/40Mbps). Lots of network cabling to all of my miners.

See! Also, some electricity reaching over, instead of under and seeing me trip over it.

Cooling is one of THE most important parts of mining, especially at this level. Beyond this, you're going to need many more (I have two big fans). Alternatively, strong AC units. We are lucky at the moment as it is winter in Australia.

Reaching 1GH/s... and Beyond!

The New Temporary Setup, Powering 1GH/s+

This is one shelf with three systems, with a total of 17 graphics cards in total.

Another shelf with two systems, and a total of seven graphics cards in total. We now have 24 graphics cards so far.

This is the six GPU system with the very capable and budget ASUS PRIME H270-PLUS motherboard, with six GTX 1060s. The power draw from this system was less than 600W, and with some tweaking, it was just over 500W with more MH/s.

Another system, this time with four graphics cards attached.

The hottest system is the one with four GTX 10 series Founders Edition cards, with the GTX 1080, TITAN X(P) and two GTX 1080 Ti cards. With some tweaking, this system is a 128MH/s monster - but the fans need to be cranked. So I put a fan in front of them which helped immensely. Below that is another system with two GTX 1070s.

And finally, our Fiji-powered system with two AMD Radeon R9 Fury X graphics cards, and the super-small R9 Nano.

Total GPUs = 43

Now we're at 43 graphics cards, and we're cooking along with 1.1GH/s or so (1100MH/s). It fluctuates because there are so many cards - somewhere between 950MH/s and 1.2GH/s.

Step 1: Mine Ethereum, Step 2: ??? Step 3: PROFIT!!!

Disclaimer: This Isn't For Everyone

This isn't for everyone. I have purchased tens of thousands of dollars worth of hardware over the years, and have put it to good use. I should've been mining years ago. I was too consumed by everything else around me that I never sat down and put myself through it. I'm spending considerable sums of money because this is what I love: technology. I don't spend money going out to bars frequently; I'm not into sports; I'm a very work hard/family/tech addicted guy. This is a rush for me; the profits are a nice dessert.

Well, I pulled myself up and ran into Ethereum mining - late to the game? Yes. But I make up for it with 1GH/s of Ethereum mining power, which will diminish over time as the difficulty gets harder, and now that everyone is diving into it. I'm riding it right now, but will be diversifying into other cryptocurrencies in the next couple of weeks, including Siacoin and Zcash. There will be future articles covering those separately, and against each other with Ethereum.

I will be moving to AMD Radeon RX Vega graphics cards as soon as I can, taking down a few of my GTX 10 series machines as the new Vega GPU mixed with HBM2 is said to result in "monster cryptocurrency performance." If my sources are correct, Radeon RX Vega is going to be THE graphics card for Ethereum mining. AMD Radeon RX 580s are already keeping up with the GTX 1070 when both cards are not tweaked, and it beats the GTX 1080 without a problem, too. It loses to the GTX 1080 Ti, but the GTX 1080 Ti is almost 2.5x its price.

Global GPU Shortage

The problem is, both sides are selling out of virtually all mid and high-end graphics cards. It started with a huge tide shift of Radeon RX 500 series stock, with the RX 570 and RX 580 selling out. This forced the price of RX 500 series through the roof. It had a follow on effect with the older RX 470 and RX 480, before spreading to every other Radeon card on the market.

Older Radeon R9 290, 290X, 390, 390X cards started increasing in price on the second-hand markets globally, and soon too joined the Radeon R9 Fury range of cards (R9 Nano, R9 Fury, R9 Fury X). The Radeon RX 295X2 was even selling for $1000+ on Amazon and eBay last week, which is bonkers. After the global supply of Radeon cards was in effect, everyone started diving into the GTX 1060s, which were forced off shelves and prices started increasing.

Over two weeks, I went from spending $290 AUD (around $220 USD) for a GTX 1060 3GB to over $360 ($273 USD), for the same product. Radeon RX 570s and RX 580s disappeared from e-tailers sites and flew off retailers' shelves. The GTX 1070 even started rising in price at my local retailers in South Australia, from ~$450 AUD ($342 USD) to $550+ AUD ($418 USD).

Coming Out For Some Light...

It literally feels like I've been down in a mine for a week. I have been doing 15 hour days trying to get this all nailed and at 1GH/s before I wrote another article on it, and here I am. Roughly 1.1GH/s which is a massive achievement. I juggled multiple high-end graphics cards out with freshly purchased GTX 1060s for the perfect mix of lowered temperatures but radically reduced power consumption.

To the point where I could add in 12 x GTX 1060s across just a couple of PCs, all in less than 1200W. This is compared to just two R9 295X2s (dual GPUs, so four GPUs total) which were drawing 1200W-1400W under load. Crazy stuff, but when switched, I went from ~100MH/s between the four GPUs on the R9 295X2s to ~250MH/s on the 12 x GTX 1060s. Winner, winner, chicken dinner.

I'm still getting the power consumption numbers together, so expect another article when it's all cleaned up - sorry for the mess, I had to get it working after the near 16-hour downtime a couple of days ago. After that, I'll be doing some FLIR testing with my new thermal imaging camera for some more awesome Ethereum content.

For now, I'm experiencing some sunlight, as it has been a hard slog in the mine for a few weeks. I've been at 1GH/s+ for 24 hours now, and slept after writing all of this article apart from this final paragraph, and my systems are fine. No overheating issues, no crashes, just Ethereum mining goodness. It's getting more difficult by the day to mine ETH, but that's why I'm buying more hardware, right?!

United

States: Find other tech and computer products like this

over at

United

States: Find other tech and computer products like this

over at  United

Kingdom: Find other tech and computer products like this

over at

United

Kingdom: Find other tech and computer products like this

over at  Australia:

Find other tech and computer products like this over at

Australia:

Find other tech and computer products like this over at  Canada:

Find other tech and computer products like this over at

Canada:

Find other tech and computer products like this over at  Deutschland:

Finde andere Technik- und Computerprodukte wie dieses auf

Deutschland:

Finde andere Technik- und Computerprodukte wie dieses auf