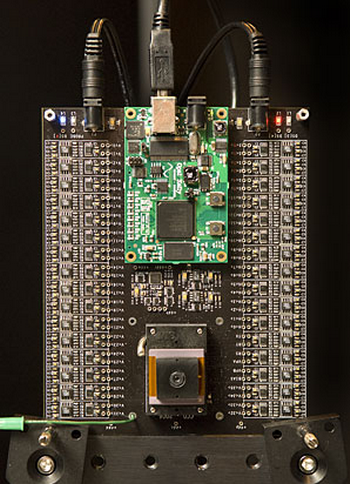

Stanford researchers have developed a super 3D camera that has 12,616 lenses, so compared to the human eyes where we have only two lenses to make a depth judgement, the 3D camera chip has a whole lot more, with each one of the 12,616 lenses at a slightly different perspective and all the images combine to a single image giving the ultimate in depth perception.

Uses for such a device are endless, including robotic eyes for very fine tuned work, biological imaging, 3D printing, creation of 3D objects or people to inhabit virtual worlds, or 3D modelling of buildings.

The photos created by this camera have almost everything in focus in the combined picture, of objects both near and far, but the beauty of this product is that each layer, or lens perspective can be filtered in or out, giving a "map" or contoured outline of objects, allowing computers to map them in new ways.

The team from Stanford comprise of Keith Fife, a graduate student working with El Gamal, and H.-S. Philip Wong, two electrical engineering professors. The multi-aperture camera could be as cheap as modern digital cameras and could even be mounted into a mobile phone.

Read more about it at the Stanford website.