SK hynix is reportedly increasing its spending on advanced chip packaging, where it wants to maintain its leadership in AI development with High Bandwidth Memory, or HBM.

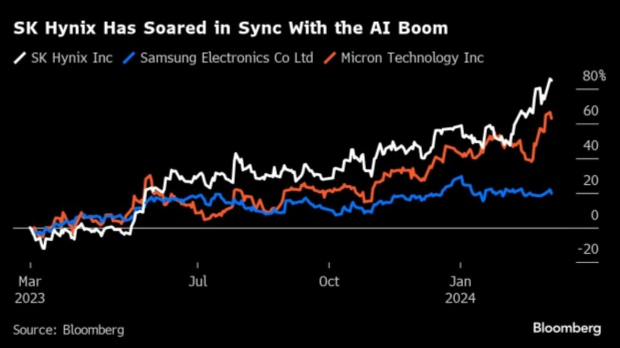

HBM market share between SK hynix, Micron, and Samsung (source: Bloomberg)

In a new report from Bloomberg, the South Korean giant is investing more than $1 billion in South Korea this year to expand and improve the final steps of its chip manufacturing technology. Lee Kang-Wook, who leads up packaging development at SK hynix -- and was a former Samsung engineer -- said during an interview recently: "The first 50 years of the semiconductor industry has been about the front-end. But the next 50 years is going to be all about the back-end," or packaging.

Lee specializes in advanced ways of combining and connecting semiconductors, which have been the bedrock of the AI industry for the last few years. The executive started out in 2000, earning his PhD in 3D integration technology for micro-systems from Japan's Tohoku University under Mitsumasa Koyanagi, who was the man responsible for inventing stacker capacitor DRAM used in smartphones.

In 2002, Lee joined as principal engineer at Samsung's memory division, where he led the development of Through-Silicon Via (TSV)-based 3D packaging technologies. This would later become the foundational work for HBM, as it's high-bandwidth memory that stacks chips on top of one another, connecting them with TSVs for faster, and much more energy-effiicient data processing.

NVIDIA is using SK hynix's latest HBM3 and HBM3E memory modules for its current, beefed-up, and next-gen AI GPUs, including the Hopper H100, Hopper H200 coming very soon (the first AI GPU with HBM3E memory) and its next-gen Blackwell B100 AI GPU.

- Read more: SK hynix reportedly supplied new 12-layer HBM3E samples to NVIDIA last month

- Read more: SK hynix VP wants to become 'total AI memory provider' for AI GPUs with HBM

- Read more: SK hynix says its HBM supply is sold out for 2024, huge growth expected in 2025

- Read more: TSMC rumor: teaming with SK hynix on next-gen HBM memory for AI GPUs

- Read more: SK hynix: next-gen HBM4 enters mass production in 2026 for next-gen AI GPUs

- Read more: SK hynix announces next-gen HBM4 memory development kicks off in 2024

SK hynix shares have increased 45% over the last 6 months alone, currently sitting at record highs riding the AI GPU boom, leading the memory giant to become the second most valuable company in South Korea, second to Samsung.

Sanjeev Rana, an analyst at CLSA Securities Korea, said: "SK Hynix's management had better insights into where this industry is headed and they were well prepared. When the opportunity came their way, they grabbed it with both hands". But as for Samsung, the analyst says "they were caught napping."

This announcement from SK hynix is on the heels of its HBM competitor -- Samsung -- which just announced its new 36GB HBM3E 12-Hi stack, which was on the heels of SK hynix's new 24GB HBM3E 8-Hi stack being in mass production announcement.