The artificial intelligence race has already begun, and now leading technologists, along with AI experts, are calling for it to grind to a halt.

The recent launch of OpenAI's new language model, GPT-4, has made waves across the internet, with countless reports demonstrating its impressive capabilities. Perhaps what is most impressive is just how much better GPT-4 is compared to its predecessors and just how fast OpenAI's developers were able to achieve this. Notably, OpenAI released its GPT-3 in 2020, which was superseded by GPT-3.5, which was released in November 2022 alongside ChatGPT. GPT-4 was released on March 14, 2023, and is the newest OpenAI model.

The improvements between each of these iterations are simply staggering. GPT-4 was trained on a much larger and more diverse data set than GPT-3.5 or GPT-4 and features 100 trillion parameters, compared to GPT -3's 175 billion parameters. This leap in parameters and an increased dataset size enables GPT-4 to provide much more accurate responses, overall be more reliable, creative, and handle more nuanced instructions. Furthermore, OpenAI threw in a new feature with GPT-4, text-to-image capabilities.

This brief outline of the history of OpenAI's language model is intended to demonstrate the impressive speed of AI development and the leaps in power with every iteration.

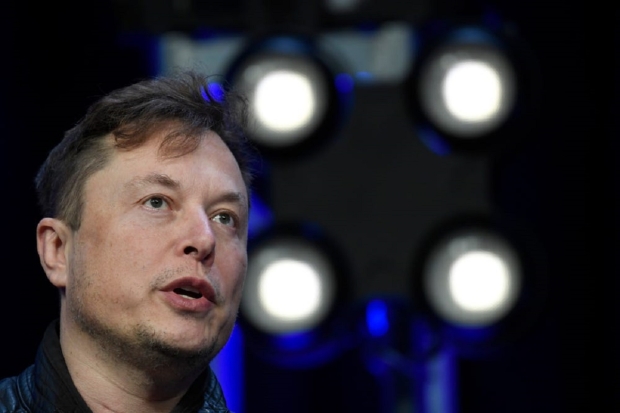

Elon Musk, Apple co-founder Steve Wozniak, Pinterest co-founder Evan Sharp, and Stability AI CEO Emad Mostaque have all added their names to an open letter penned by the Future of Life Institute, a non-profit that concentrates on reducing existential risk from powerful technologies. The recently signed letter outlines warnings that OpenAI's GPT-4 artificial intelligence is becoming "human-competitive at general tasks" and are a potential threat to society and humanity as a whole.

The letter calls upon all AI developers to temporarily halt development on language models more powerful than GPT-4 for at least six months while researchers can assess the power of the new technology and how it would impact the human race. The letter cites major concerns about the AI being able to spread misinformation, its impact on the labor industry through automation, and how it will influence society.

Furthermore, the letter warns that through the AI race, developers may fail to predict or reliably control these powerful new AI systems, putting civilization at risk simply because they couldn't wait for a proper assessment to be conducted.

Lastly, the letter calls upon AI developers to take a six-month break to work with policymakers and regulators to create governance systems for AI.