NVIDIA CEO Jensen Huang told CNBC this week that the new Blackwell B200 AI GPUs will cost $30,000 to $40,000 each.

In the CNBC interview, Jensen also said that NVIDIA spent a whopping $10 billion on R&D efforts into Blackwell, which is a monumental amount of money for what will be the best AI GPU technology the world has ever seen. At a price of $30,000 to $40,000 each, that's not bad considering that it was expected that NVIDIA would charge $50,000 to $60,000 each.

We don't know if Jensen meant the Blackwell B100 AI GPU or the flagship B200 AI GPU... but considering B200 was the one that NVIDIA showed off and detailed some of its specifications for at GTC 2024 this week, you'd expect Jensen meant B200. NVIDIA will be making some mighty profits if B200 costs $6000 to make, with a selling price of 5x that... profits for later this year and into 2025 are going to make the recent record gains seem like a tiny wave compared to the AI GPU financials with a B200-powered tsunami.

- Read more: NVIDIA GB200 Grace Blackwell Superchip: 864GB HBM3E, 16TB/sec bandwidth

- Read more: NVIDIA Blackwell AI GPU: multi-chip die, 208B transistors, 192GB HBM3E

- Read more: NVIDIA's new Blackwell-based DGX SuperPOD: ready for trillion-parameter scale for generative AI

Now, as for NVIDIA's new Blackwell B200 AI GPU: the new NVIDIA B200 AI GPU features a whopping 208 billion transistors made on TSMC's new N4P process node. It also has 192GB of ultra-fast HBM3E memory with 8TB/sec of memory bandwidth. NVIDIA is not using a single GPU die here, but a multi-GPU die with a small line between the dies differentiating the two dies, a first for NVIDIA.

The two chips think they're a single chip, with 10TB/sec of bandwidth between the GPU dies, which have no idea they're separate. The two B100 GPU dies think they're a single chip, with no memory locality issues and no cache issues... it just thinks it's a single GPU and does its (AI) thing at blistering speeds, which is thanks to NV-HBI (NVIDIA High Bandwidth Interface).

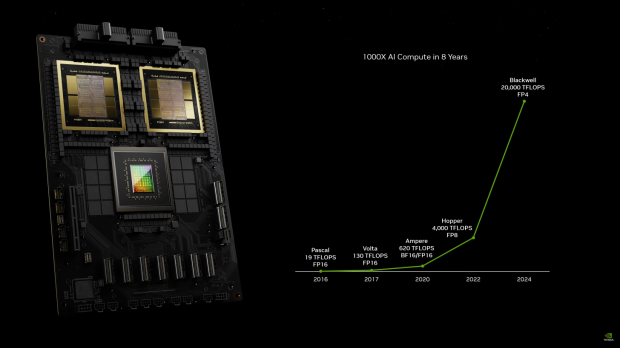

NVIDIA's new B200 AI GPU has 20 petaflops of AI performance from a single GPU, compared to just 4 petaflops of AI performance from the current H100 AI GPU. Impressive. Note: NVIDIA is using a new FP4 number format for these numbers, with H100 using the FP8 format, which means that B200 has 2.5x theoretical FP8 compute than H100. Still, very impressive.

Each of the B200 GPU dies two full reticle-size chips, with 4 x HBM3E stacks of 24GB each, along with 1TB/sec of memory bandwidth on a 1024-bit memory interface. The total of 192GB of HBM3E memory, with 8TB/sec of memory bandwidth, is a huge upgrade over the H100 AI GPU, which had 6 x HBM3 stacks of 16GB each (at first, H200 kicked that up to 24GB per stack).

NVIDIA is using an all-new NVLink chip design that has 1.8TB/sec of bi-directional bandwidth and packing support for a 576 GPU NVLink domain. This NVLink chip itself features 50 billion transistors, manufactured by TSMC on the same N4P process node.