NVIDIA is splashing down cash on next-gen HBM3e memory, where the AI GPU leader is securing enough stock of the new High-Bandwidth Memory for its upcoming Hopper H200 AI GPU and its next-gen Blackwell H200 AI GPU, which both arrive in 2024.

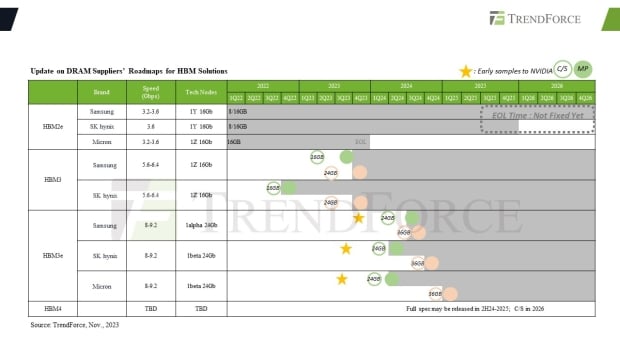

DRAM suppliers roadmaps for HBM solutions (source: TrendForce)

According to Korean media outlet Biz.Chosun, NVIDIA has placed orders for massive quantities of new HBM3e memory from both SK hynix and Micron. It was only yesterday that SK hynix announced its next-gen HBM4 memory has entered production, ready for 2024, with NVIDIA scooping up as much HBM3e memory as it can right now.

NVIDIA has reportedly pre-paid for around 700 billion to 1 trillion won for its HBM3e orders, which works out to a staggering $775 million USD. Remember: this is just the pre-payment and not the full amount... so we're talking an easy $1 billion (probably much more) in early HBM3e memory orders from NVIDIA alone.

NVIDIA's upcoming H200 AI GPU (source: NVIDIA)

NVIDIA is using the new HBM3e memory on its upcoming H200 AI GPU, which is a refreshed and beefed-up version sibling to the H100 AI GPU that is powering most things AI right now. NVIDIA's new H200 AI GPU has 141GB of HBM3e memory with up to 4.8TB/sec of memory bandwidth, an upgrade from the 80GB of HBM3 memory (non-e) on the current H100 AI GPU.

- NVIDIA B100 AI GPU: unknown amount of HBM3e (more details here)

- NVIDIA H200 AI GPU: 141GB of HBM3e @ 4.8TB/sec memory bandwidth (more details here)

- NVIDIA H100 AI GPU: 80GB of HBM3 @ 3.5TB/sec memory bandwidth (more details here)

- AMD Instinct MI300X AI GPU: 128GB of HBM3 @ 5.3TB/sec bandwidth (more details here)

xxx source said: "According to the industry on the 26th, SK Hynix and Micron are known to have each received between 700 billion and 1 trillion won in advance payments from NVIDIA to supply cutting-edge memory products".

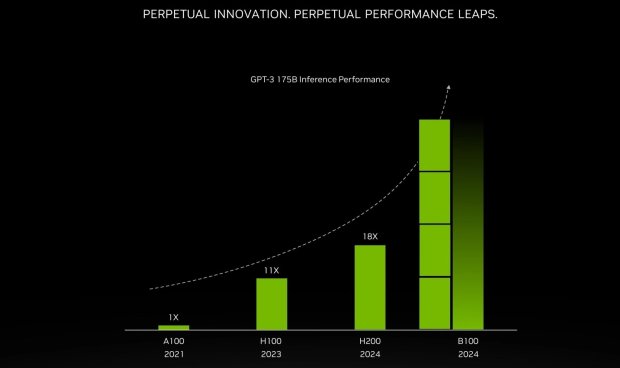

NVIDIA GPT-3 175B inference performance, including B100 AI GPU (source: NVIDIA)

"NVIDIA's large advance payment led to investment actions by memory semiconductor companies that were struggling to expand HBM production capacity. In particular, SK Hynix, the largest supplier, is known to be planning to invest the advance payment received from NVIDIA intensively in expanding TSV facilities, which are holding back HBM production capacity".

"This is evidenced by the fact that work related to the establishment of a new TSV line was carried out smoothly in the third quarter of last year. Likewise, Micron's investment in TSV facilities is expected to receive a boost. Meanwhile, Samsung Electronics is also known to have recently completed HBM3 and HBM3E product suitability tests with NVIDIA and signed a supply contract".

- Read more: NVIDIA H200 AI GPU: up to 141GB of HBM3e memory with 4.8TB/sec bandwidth

- Read more: NVIDIA teases next-gen B100 Blackwell GPU: over 4x as fast as H100 AI GPU

- Read more: NVIDIA's next-gen B100 'Blackwell' AI GPUs hit supply chain certification stage

- Read more: SK hynix announces next-gen HBM4 memory development kicks off in 2024

- Read more: NVIDIA to soak up most HBM supply for its AI GPUs, HBM4 is coming in 2026

NVIDIA is also expecting big things from the world of AI, where the company plans on making $300 billion on AI sales alone in 2027, which is a big driving reason behind securing the latest in HBM3 memory technology for the future of its AI GPUs. SK hynix, Samsung, and Micron are all making HBM memory as fast as possible, with the insatiable demand from NVIDIA, and now AMD, Intel, and other AI GPU makers.