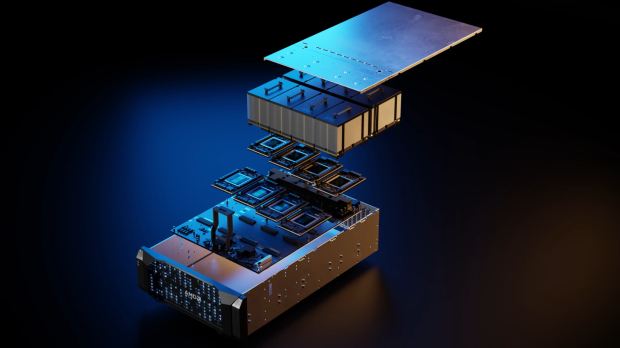

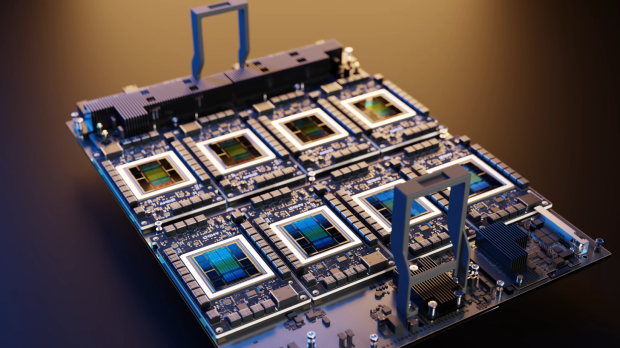

AMD has officially launched its new Instinct MI300X, its new flagship AI accelerator that will take the AI GPU battle to NVIDIA's door, where it's up to 60% faster than the H100 AI GPU.

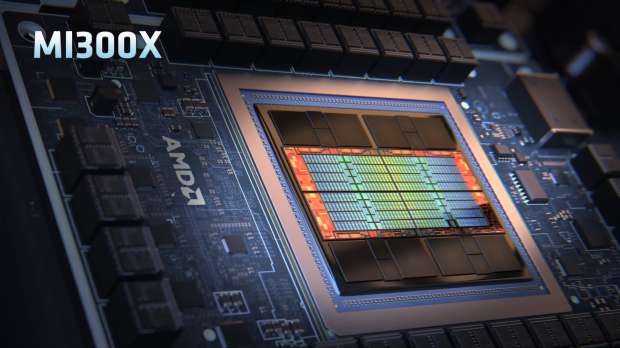

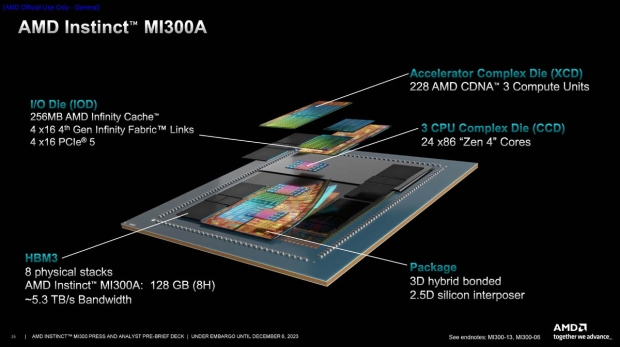

AMD's new Instinct MI300X is a technological marvel featuring chiplets and advanced packaging technologies from TSMC to craft the new AI GPU. We have the new CDNA 3 architecture, which has a blend of both 5nm and 6nm IPs that have up to an insane 153 billion transistors on the Instinct MI300X.

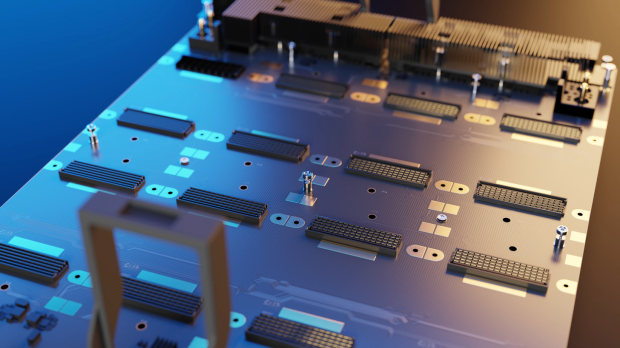

Inside, we have the main interposer laid out with a passive die that houses the interconnect layer using a 4th Gen Infinity Fabric solution, with the interposer featuring a total of 28 dies that include 8 x HBM3 packages, 16 dummy dies between the HBM packages, and 4 active dies with each of these active dies featuring 2 compute dies.

The CDNA 3-based GCD (Graphics Compute Die) features a total of 40 compute units, which works out to 2560 stream processors. There are 8 compute dies (GCDs) in total, with a grand total of 320 compute units and 20,480 stream processors. AMD wants the best yields possible (of course), so it is dialing it down a bit: 304 compute units (38 CUs per GPU chiplet), which will make for a total of 19,456 stream processors.

For me, it's the VRAM side of the AI GPUs that I love the most... with AMD's new Instinct MI300X featuring a huge 50% increase in HBM3 memory over its predecessor in the Instinct MI250X (192GB now versus 128GB). AMD is talking 8 x HBM3 stacks; each stack is 12-Hi, which features 16 Gb ICs with 2GB capacity per IC for 24GB per stack. 8 x 24 = 192GB HBM3 memory.

AMD's new Instinct MI300X, with its 192GB of HBM3 memory, will enjoy a huge 5.3TB/sec of memory bandwidth and 896GB/sec of Infinity Fabric bandwidth.

In comparison, NVIDIA's upcoming H200 AI GPU has 141GB of HBM3e memory with up to 4.8TB/sec of memory bandwidth, so AMD's new Instinct MI300X is definitely sitting very pretty against the H100, which has only 80GB of HBM3 with up to 3.35TB/sec of memory bandwidth.

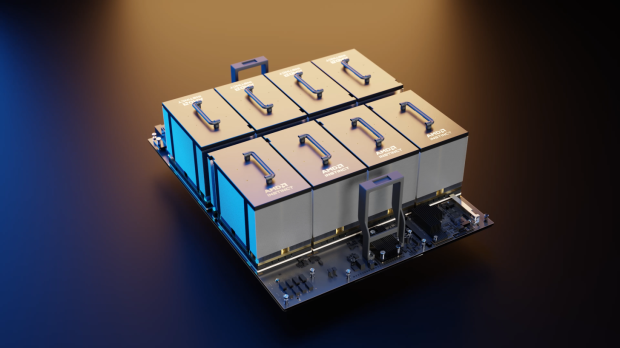

AMD Chair and CEO Dr. Lisa Su said: "AI is the future of computing and AMD is uniquely positioned to power the end-to-end infrastructure that will define this AI era, from massive cloud installations to enterprise clusters and AI-enabled intelligent embedded devices and PCs".

She continued: "We are seeing very strong demand for our new Instinct MI300 GPUs, which are the highest-performance accelerators in the world for generative AI. We are also building significant momentum for our data center AI solutions with the largest cloud companies, the industry's top server providers, and the most innovative AI startups ꟷ who we are working closely with to rapidly bring Instinct MI300 solutions to market that will dramatically accelerate the pace of innovation across the entire AI ecosystem".

AI GPU VRAM comparison:

- Instinct MI300X - 192GB HBM3 @ up to 5.3TB/sec

- Gaudi 3 - 144GB HBM3 @ up to 4.4TB/sec

- H200 - 141GB HBM3e @ up to 4.8TB/sec

- MI300A - 128GB HBM3 @ up to 4.4TB/sec

- H100 SXM - 80GB HBM3 @ up to 3.3TB/sec

- MI250X - 128GB HBM2e @ up to 2.5TB/sec

- Gaudi 2 - 96GB HBM2e @ up to 2.5TB/sec

What next? NVIDIA has its next-gen Blackwell GPU architecture and B100 AI GPUs coming out in 2024, where they should run rings around everything out right now, and what's coming -- which is both AMD's new Instinct MI300X and NVIDIA's H200 AI GPUs -- but right now, AMD has a lot to be praised for here with its new AI accelerators.

AMD has stepped up to the AI GPU plate with Instinct MI300X as it has with EPYC to Xeon with Intel and with Ryzen to Core with Intel. Now... if AMD could just do that with Radeon to GeForce with NVIDIA, we'd be laughing across the silicon board.