The new NREL Kestrel supercomputer details have been unveiled, with the National Renewable Energy Laboratory (NREL) division of the US Department of Energy (DOE) packing some serious horsepower into its new supercomputer.

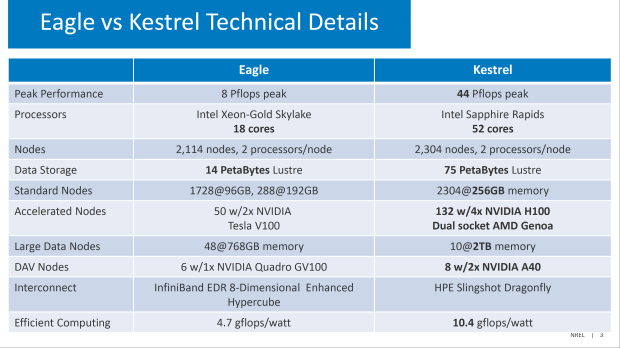

We're looking at a Minajatwa of silicon between Intel Sapphire Rapids Xeon CPUs, AMD EPYC "Genoa" CPUs, and NVIDIA H100 GPU accelerators. We have 44 petaflops of peak compute performance, up from just 8 petaflops on the previous-gen Eagle supercomputer.

CPU upgrades are big: Intel Sapphire Rapids Xeon CPUs with 52 cores, 112 threads each -- up from the 18 cores and 36 threads from the Intel Xeon-Gold Skylake CPUs in the Eagle supercomputer.

- Read more: NVIDIA Grace CPU + Grace Hopper Superchip power 'Venado' supercomputer

- Read more: AMD CPUs and GPUs power Frontier, the world's fastest supercomputer

- Read more: NVIDIA Grace CPU-powered servers are coming from Taiwan tech giants

There are 2304 standard nodes with each node rocking 2 processors in a dual-socket configuration, meaning we have a total of 4608 Intel Sapphire Rapids-SP CPUs and a total of an insane 239,616 cores and even-more-insane 479,232 threads... yeah, you're reading those numbers right.

We've got 75PB (petabytes) of Lustre storage, while each of the 2304 nodes will have 256GB of DDR5 memory, with the Kestrel supercomputer rocking a total of 560PB of RAM.

There will be 132 accelerated nodes that each have 4 x NVIDIA H100 GPU accelerators, based on NVIDIA's latest Hopper GPU architecture and dual-socket AMD EPYC "Genoa" CPU configuration. This adds up to a total of 528 x NVIDIA H100 GPUs and 264 x AMD EPYC "Genoa" CPUs inside of the accelerated nodes.

- Industry-leading performance and scalability

- 100GbE and 200GbE interfaces

- High radix, 64-port, 12.8 Tb/s bandwidth switch

- Scalability to >250,000 host ports with a maximum of 3 hops

- Innovative hardware congestion management, adaptive routing, and quality of service

- Ethernet standards and protocols, plus optimized HPC functionality

- Link level retry and low-latency forward error correction

- Standardized, open API management interfaces

If you want to know the total CUDA cores, we're looking at a bonkers 8,921,088 CUDA cores (H100 SXM5) and then CPU core wise, from the AMD EPYC "Genoa" CPUs we have 25,344 Zen 4-based CPU cores. This is joined by 42TB of HBM3 memory, and 20TB of RAM. There's also 8 DAV nodes, each with up to 16 NVIDIA A40 GPUs, all connected over HPE Slingshot Dragonfly interconnect.