Artificial Intelligence News - Page 1

X users will now get AI-generated news summaries

X has rolled out an update that enables its Premium users to receive AI-generated summaries of news and topics trending on the platform.

The new feature called "Stories on X" has become available to Premium subscribers, and according to a post from the company's engineering account, the new feature will appear within the Explore tab and is entirely curated by X's GrokAI tool. So, how does it work? Grok has been leveraged to identify the most popular content trending on X, which can be news stories and any general public discussion that has gained a lot of attention.

Grok then digests the popular content and generates a summary. Users exploring the new feature have posted screenshots to X showcasing the design and layout, with some screenshots showing AI-generated summaries for stories such as Apple's earnings report, aid to Ukraine, and "Musk, Experts Debate National Debt," which was a summary of an online discussion between Musk and other prominent X users.

Continue reading: X users will now get AI-generated news summaries (full post)

SK hynix expects HBM memory chip revenues of over $10 billion in 2024

SK hynix has had an absolutely stellar last 12 months riding the ever-growing AI wave, with the South Korean memory giant expecting over $10 billion in revenue from HBM alone by the end of 2024.

The news is coming from South Korean outlet TheElec, which sums up that SK hynix has sold out of its 2024 supply of HBM memory, and is already close to selling out its 2025 supply of HBM. NVIDIA's current H100 uses HBM3, while its new H200 and next-gen B200 AI GPUs both use HBM3E memory, provided by SK hynix.

SK hynix is staying ahead of its HBM competitors in Samsung and Micron, with plans to provide samples of its new 12-stack HBM3E this month, with mass production of the new HBM memory chips expected in Q3 2024 according to SK hynix CEO Kwak Noh-jung at a press conference on Thursday.

Continue reading: SK hynix expects HBM memory chip revenues of over $10 billion in 2024 (full post)

NVIDIA DGX GB200 AI servers expected to sell 40,000 servers in 2025, mass production in 2H 2024

NVIDIA's new DGX GB200 AI server is expected to enter mass production in the second half of this year, with the volume expected to hit 40,000 units in 2025.

NVIDIA and Quanta are the two suppliers of NVL72 and NVL36 cabinets, respectively, with the NVL72 packing 72 GPUs and 36 Grace CPUs. Each of the AI server cabinets cost 96 million NTD (around $3 million USD or so).

The new NVIDIA DGX NVL72 is the AI server with the most computing power, and thus, the highest unit price. Inside, the DGX NVL72 features 72 built-in Blackwell-based B200 AI GPUs and 36 Grace CPUs (18 servers in total with dual Grace CPUs and 36 x B200 AI GPUs per server) with 9 switches. The entire cabinet is designed by NVIDIA in-house, and cannot be modified, it is 100% made, tested, and provided by NVIDIA.

SK hynix says most of its HBM for 2025 is sold out already, 16-Hi HBM4 coming in 2028

SK hynix has announced that almost all of its HBM volume for 2025 has been sold out, as demand for AI GPUs continues to skyrocket.

During a recent press conference, the South Korean memory giant announced plans to invest on its new M15X lab in the Cheongju and Yongin Semiconductor Cluster in Korea with advanced packaging plants in the US.

SK hynix selling out of most its 2025 HBM volume is pretty crazy, as we're not even half way through the year, and NVIDIA's beefed-up H200 AI GPU with HBM3E isn't quite here yet, and its next-gen Blackwell B200 AI GPUs with HBM3E will be launching later this year... yet SK hynix is selling HBM like they're hotcakes.

NVIDIA ChatRTX updated: new models, voice recognition, media search, and more with AI

NVIDIA has just updated its ChatRTX AI chatbot with support for new LLMs, new media search abilities, and speech recognition technology. Check it out:

The latest version of ChatRTX supports more LLMs including Gemma, the latest open, local LLM trained by Google. Gemma was developed by the same research and technology that Google used to create Gemini models, and is built for responsible AI development.

ChatRTX now supports ChatGLM3, an open, bilingual (English and Chinese) LLM based on the general language model framework. The updated version of ChatRTX now lets users interact with image data through Contrastive Language-Image Pre-training from OpenAI. CLIP is a neural network that, as NVIDIA explains, through training and refinement will learn visual concepts from natural lanage supervsion -- a model that recognizes what the AI is "seeing" in image collections.

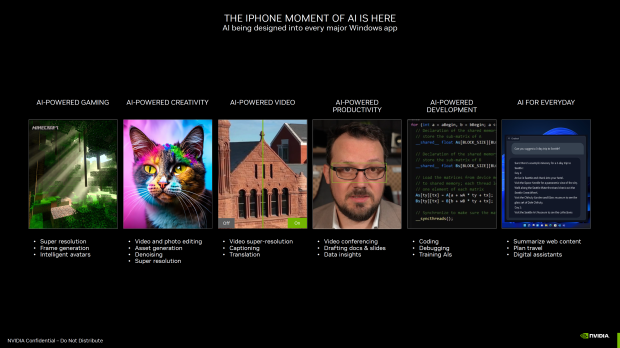

NVIDIA says RTX is the 'premium' AI PC platform, NPUs are for 'basic' AI PCs

NVIDIA isn't going to be left out with the coming AI PC revolution, with AMD and Intel already releasing NPU-powered processors for AI workloads, NVIDIA has just announced the "Premium AI PC" with RTX hardware, compared to the "Basic AI PC" with an NPU on the CPU.

NVIDIA compares that a Premium AI PC with an RTX GPU for AI workloads can provide gigantic performance uplifts for AI TOPS of between 100 and a whopping 1300+ TOPS (int8 + FP8 workloads) compared to just 10-45 TOPS on current, and next-gen CPUs with beefed-up NPUs.

Intel and AMD will combine the TOPS performance of the CPU + GPU + NPU, because if they used the NPU alone, the AI power is nowhere near as great. The more the NPU is used, the more resources that are taken away from other key parts of the chip: ya know, like the CPU and GPU.

ChatGPT AI now has a memory - for subscribers at least - but the more paranoid can turn it off

OpenAI introduced the 'memory' feature for its ChatGPT AI back in February 2024, but now that functionality is available to all users - well, paying subscribers, anyway.

To recap, what this feature does is to give ChatGPT the ability to remember elements of your previous chats with the AI.

It can then refer back to those memories to make things easier or more convenient in future queries, while making chats with the AI seem a more human-like experience.

Microsoft official says a 'new kind of digital species' has been created

The boss of Microsoft's AI division has said that people really need to change their perspective of the emergence of artificial intelligence, and that a different way of thinking about the new technology is consider it a new "species".

DeepMind co-founder, and current CEO of Microsoft AI, Mustafa Suleyman, held a TED talk last week where he explained his vision for a world that will be mostly driven by artificial intelligence. Suleyman spoke to the crowd and said that it may be useful to think of AI as "something like a new digital species", with his prediction being that humans will come to view AI as "digital companions" and "new partners in the journeys of all our lives."

Suleyman's idea is that artificial intelligence will become so engrained into our everyday lives, much like smartphones have, that we will begin to consider AI-powered pieces of tech, such as Siri, more of a virtual being rather than a tool. This change is perspective will be enabled by the AI's language model that will be designed to enable a much more fluent interaction and response.

China's black market price of NVIDIA's H100 AI GPU has plummeted, H200 is coming soon

The price of NVIDIA's Hopper H100 AI GPU in the Chinese black market is coming down "rapidly," with spot prices falling more than 10% in the short term.

In a new report from UDN, the market is watching the impact of this wave of selling on the overall supply and demand, and whether the normal market for AI GPUs will be affected. NVIDIA's new H200 AI GPU is about to be released, upgraded with HBM3E memory, meaning that the price of AI servers with H100 AI GPUs on the black market in mainland China have plummeted in anticipation of H200.

At the time of replacing the old H100 AI GPUs with newer H200 AI GPUs, scalpers who previously had lots of stock of H100 AI GPUs are trying to get rid of them all -- and sometimes, in large quantities -- which is quickly bringing the price down on the black market.

NVIDIA rumored to build new R&D center in Taiwan, after first AI R&D center is a huge success

NVIDIA opened its first Asia-based AI R&D center in Taiwan two years ago, investing NT$24.3 billion ($715 million USD or so) and employing 400 people there. But now, the company is reportedly considering a second R&D center in Taiwan.

In a new post by the China Times, the Technology Department of the Ministry of Economic Affairs, said on April 26 that NVIDIA's current research and development (R&D) is "going smoothly" and it will "assist our manufacturers in AI application development". They're reportedly happy with the quality of talent in Taiwan, and are considering setting up a second R&D center in Taiwan.

The first R&D center that NVIDIA built in Taiwan mainly works on AI chip research and development, as well as GPUs. The R&D center also helped build Taiwan's biggest supercomputer -- Taipei-1 -- with the Taiwan government providing a NT$6.7 billion ($205 million USD or so) subsidy for NVIDIA's first R&D center project.