The release of ChatGPT has shaken many industries around the world, while a brand new race was spawned in the technology market that is currently being dominated by OpenAI's extremely popular chatbot.

OpenAI's debut of ChatGPT was quickly adopted by millions of users, including the likes of Microsoft, which invested $10+ billion into the company and, in return, received the underlying technology powering the impressive chatbot, GPT. This technology, or more specifically, language models, are being developed by many other companies such as Google, Apple, Meta, Baidu, and Amazon. All of these will eventually be rolling out their own version of artificial intelligence that will be added to their products.

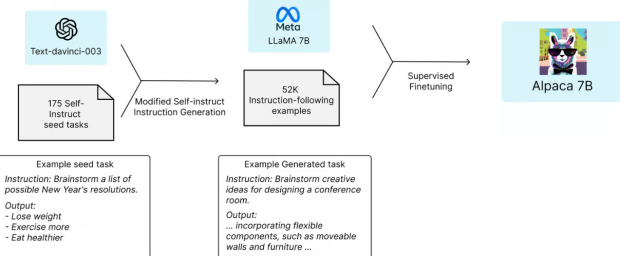

Stanford scientists set out to replicate the GPT language model created by OpenAI and used Meta's open-source LLaMA 7B language model, which is the smallest and cheapest of the LLaMA models that Meta makes available for purchase. Notably, while this small language model was trained on trillions of "tokens" or data, it fell behind in terms of speed compared to GPT. For example, while the small language model was trained on a significant amount of data, it has been trained on the answering of questions about that data or what is called 'post-training'.

NewAtlast puts it perfectly, "It's one thing to have read a billion books, but another to have chewed through large quantities of question-and-answer conversation pairs that teach these AIs what their actual job will be."

This is one of the key differences between the AI that Stanford scientists created and OpenAI's chatbot. OpenAI has spent a large amount of time training its chatbot on what responses are correct, increasing its speed.

Stanford scientists then asked OpenAI's GPT API to generate 175 self-instruct seed tasks to train the LLaMA model, which took approximately three hours and cost about $100. The team generated 52,000 sample conversations to train the AI, which cost $500, the majority of the total cost. Once the new language model was trained, it was named Alpaca 7B and was put up against OpenAI's ChatGPT in several ways; email writing, social media, productivity, and more. Out of the tests, Alpaca won 90, and GPT won 89.

Notably, the researchers were quite surprised at the results from the model they had created, given the reasonably small amount of instruction data the AI was fed. Furthermore, the researchers say that additional internal testing indicated that Alpaca 7B was performing similarly to GPT-3.5 on a diverse set of inputs. However, the team does recognize that their tests could be limited in scale and diversity.

The researchers note that Alpaca 7B experiences "several common deficiencies of language models, including hallucination, toxicity, and stereotypes" - more frequently than OpenAI's ChatGPT.

In other news, a YouTube has attached 10 foot-tall wheels to Tesla Model 3 and driven it upside-down. If you are interested in watching that video, check it out below.