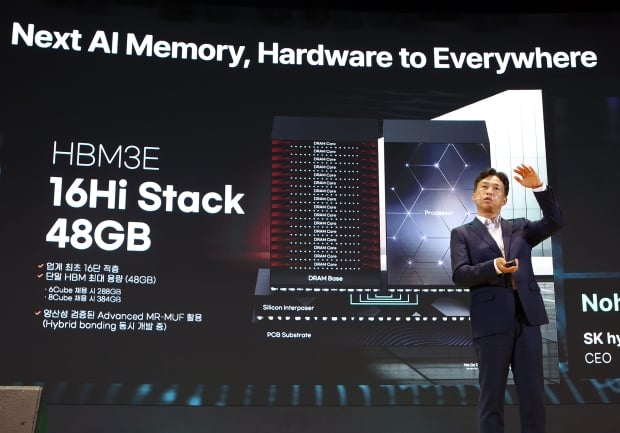

SK hynix has just unveiled the world's first 16-Hi HBM3E memory, which will arrive in up to 48GB capacities per stack.

The new 16-Hi HBM3E memory was announced by SK hynix CEO Kwak Noh-Jung during the SK AI Summit 2024 event, with the SK hynix CEO unveiling the 16-Hi HBM3E memory first with samples of 48GB capacity. This is the highest capacity, and the highest number of layers ever in the HBM memory industry.

This comes in hot off the heels of a story from yesterday, in which NVIDIA CEO Jensen Huang reportedly called SK hynix leadership and told them to bring up supply of its next-gen HBM4 memory by 6 months. SK hynix's latest 16-Hi HBM3E memory is "expected to open up from the HBM4 generation" with the company developing 48GB 16-Hi HBM4E memory in a "bid to secure technological stability" with plans to provide samples to customers in early 2025.

SK hynix's new 16-Hi HBM3E memory uses its Advanced MR-MUF process, while also developing hybrid bonding technology "as a backup".

- Read more: SK hynix boss: NVIDIA CEO asked him to bring forward supply of HBM4 chips by 6 months

- Read more: JEDEC chills on next-gen HBM4 thickness: 16-Hi stacks with current bonding tech allowed

- Read more: SK hynix says most of its HBM for 2025 is sold out already, 16-Hi HBM4 coming in 2028

Mr Kwak's comments provided by SK hynix:

- The market for 16-high HBM is expected to open up from the HBM4 generation, but SK hynix has been developing 48GB 16-high HBM3E in a bid to secure technological stability and plans to provide samples to customers early next year.

- SK hynix applied the Advanced MR-MUF process, which enabled mass production of 12-high products, to produce 16-high HBM3E, while also developing hybrid bonding technology as a backup.

- 16-high products come with performance improvement of 18% in training, and 32% in inference vs 12-high products. With the market for AI accelerators for inference expected to expand, 16 high products are forecast to help the company solidify its leadership in AI memory in the future.

- SK hynix is also developing an LPCAMM2 module for PC and data centers, 1cnm-based LPDDR5 and LPDDR6, taking full advantage of its competitiveness in low-power and high-performance products.

- The company is also readying PCIe 6th generation SSD, high-capacity QLC-based eSSD, and UFS 5.0.

- SK hynix plans to adopt a logic process on the base die from HBM4 generation through collaboration with a top global logic foundry to provide customers with the best products.

- Customized HBM will be a product with optimized performance that reflects various customer demands for capacity, bandwidth, and function and is expected to pave the way for a new paradigm in AI memory.

- SK hynix is also developing technology that adds computational functions to memory to overcome the so-called memory wall. Technologies such as Processing Near Memory(PNM), Processing in Memory(PIM), and Computational Storage, essential to processing enormous amounts of data in the future, will be a challenge that transforms the structure of next-generation AI systems and the future of the AI industry.