TSMC chairman Mark Liu and TSMC chief scientist Philip Wong have co-written a new piece about the road towards a 1-trillion transistor GPU.

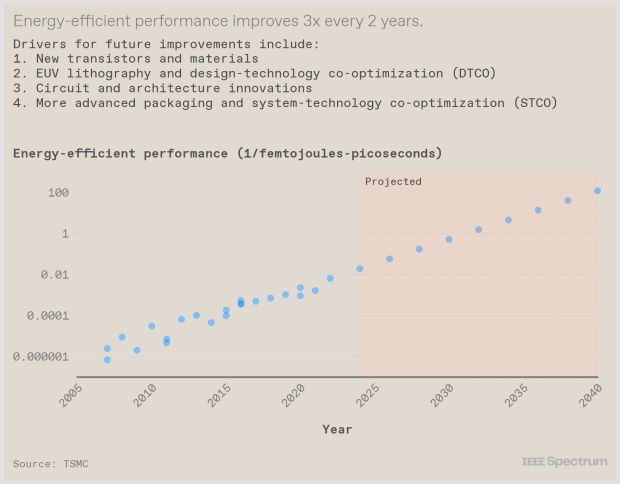

The two Taiwan Semiconductor Manufacturing Company (TSMC) executives pointed to the importance of advanced semiconductor packaging (CoWoS, SoIC, etc.) and the vital role silicon photonics will play in boosting data transfer speeds between chips. The two TSMC executives wrote that if the AI revolution is to continue at its current pace, "it's going to need even more from the semiconductor industry."

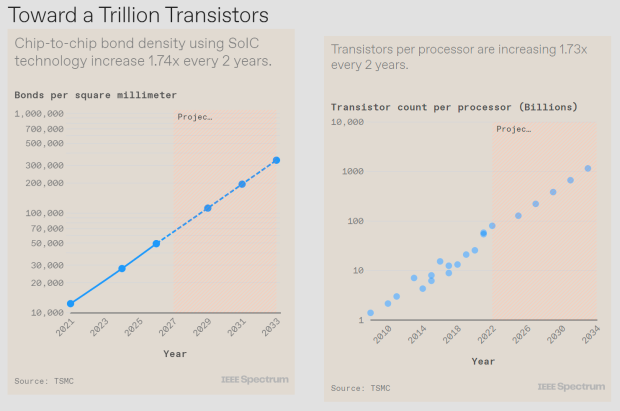

Within 10 years, the TSMC executives said we'll need a 1-trillion-transistor GPU, which is a GPU with 10x as many transistors as today. NVIDIA's new Blackwell B200 AI GPU has 208 billion transistors with a chiplet design, and two separate GPUs with 104 billion transistors per chip. 1 trillion transistors will be incredible to see roll out in front of our eyes over the next 5-6 years.

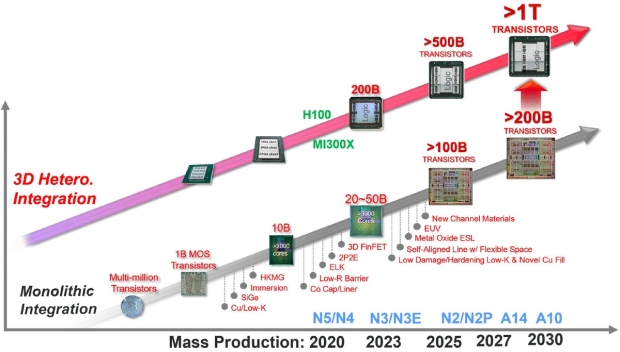

Ever since the invention of the integrated circuit, semiconductor technology has focused on scaling down in feature size so that companies can cram in as many transistors as they can into a thumbnail-sized chip. Today, integration hasn't stopped and companies like TSMC have moved from 2D scaling to 3D system integration: putting as many chips into a tightly integrated, massively interconnected system.

- Read more: TSMC next-gen 1nm-class chips with 1 trillion transistors by 2030

- Read more: TSMC preparing for 1nm production, new cutting-edge facility in Taiwan

- Read more: ASML ships industry's first High-NA EUV lithography scanner to Intel

- Read more: Intel targets 1 trillion transistors on a single package by 2030

TSMC has its Chip-on-Wafer-on-Substrate (CoWoS) advanced packaging technology that's capable of fitting 6 reticle fields' worth of compute chips, as well as 12 x HBM memory chips. One of the limitations of lithographic chipmaking tools is that they've been designed to make ICs (integrated circuits) that are no bigger than around 800 square millimeters, which is referred to as the "reticle limit".

The TSMC executives explain that they can now extend the size of the integrated system "beyond lithography's reticle limit" by attacking several larger chips onto a larger interposer, which is a piece of silicon into which interconnects are built, allowing TSMC and other advanced packaging companies to integrate a system that contains a much larger number of devices than what is possible with a single chip.

NVIDIA's new Blackwell B200 AI GPU is the prime example of this, with 208 billion transistors in total -- fabbed at TSMC on its new N4P process node -- with a dual-chiplet design that features two reticle-sized GPU chips. NVIDIA has shifted away from a monolithic GPU towards a chiplet-based design, with a whopping 208 billion transistors for the B200, or 104 billion transistors.

It's not just GPUs that are important for the future -- especially the future of AI -- of the semiconductor industry, as HBM. HBM memory has a stack of vertically interconnected chips of DRAM on top of a control logic IC. It uses vertical interconnects called through-silicon-via (TSVs) to get signals through each chip and solder bumps to form the connections between the memory chips. The world's best high-performance AI GPUs are all using HBM exclusively, with HBM3 and now HBM3E memory.

TSMC explains that 3D SoIC technology will provide a "bumpless alternative" to conventional HBM technology of today, where it will deliver "far denser vertical interconnection between the stacked chips". We've recently seen 12-layer HBM3E memory teased, which HBM test structures with 12 layers of chips stacked are using hybrid bonding, a copper-to-copper connection with a higher density than what solder bumps are capable of. Bonded at low temperatures on top of a larger base logic chip, this memory system has a total thickness of just 600 µm.

The executives from TSMC explained getting to 1 trillion transistor GPUs: "As noted already, typical GPU chips used for AI training have already reached the reticle field limit. And their transistor count is about 100 billion devices. The continuation of the trend of increasing transistor count will require multiple chips, interconnected with 2.5D or 3D integration, to perform the computation. The integration of multiple chips, either by CoWoS or SoIC and related advanced packaging technologies, allows for a much larger total transistor count per system than can be squeezed into a single chip. We forecast that within a decade a multichiplet GPU will have more than 1 trillion transistors".

"We'll need to link all these chiplets together in a 3D stack, but fortunately, industry has been able to rapidly scale down the pitch of vertical interconnects, increasing the density of connections. And there is plenty of room for more. We see no reason why the interconnect density can't grow by an order of magnitude, and even beyond".