Introduction

By far, 16nm NAND is the smallest lithography flash to hit the market. Typically, as lithography shrinks, you get some good benefits, and some unfortunate consequences. The benefits come in the form of lower cost to the consumer for the same capacity. The consequences of shrinking lithography typically come in the form of lower performance, and reduced endurance.

We saw this scenario play out when Micron flash went from 25nm lithography to 20nm lithography. When 20nm IMFT flash first hit the market, its performance was downright abysmal; SSD prices came down dramatically, but so did performance. In its first generation, 20nm IMFT flash was easily outperformed by competing 19nm Toshiba flash.

Today, however, 20nm Micron flash is a more mature process, and has become an excellent performer, to the point of even challenging the best Toshiba flash for performance supremacy. Micron flash based SSDs are typically less expensive in comparison to their Toshiba based counterparts, but the Toshiba based drives usually have an edge in performance.

Crucial's 16nm flash based MX100 is basically their response to Samsung TLC flash based SSDs; moving to a 16nm lithography allows Crucial to compete with Samsung's EVO in terms of price. The question is: How will moving to a smaller lithography shake out performance wise? This shrinkage is reminiscent of the 25nm to 20nm change that preceded this die change. Will we see a big performance hit like last time?

Well, there is only one way to find out; let's dive in and see!

PRICING: You can find Crucial's MX100 (512GB) for sale below. The prices listed are valid at the time of writing, but can change at any time. Click the link to see the very latest pricing for the best deal.

United States: The Crucial MX100 (512GB) retails for $209.99 at Amazon.

Canada: The Crucial MX100 (512GB) retails for CDN$224.99 at Amazon Canada.

Specifications, Drive Details, Test System Setup, Drive Properties, Pricing and Availability

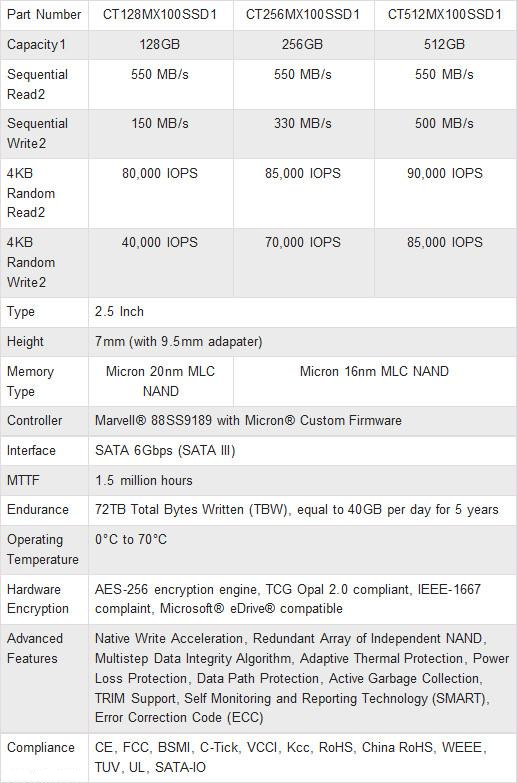

Specifications

Crucial's MX100 SATA III SSD is available in three capacity sizes, 128GB, 256GB, and 512GB. Specifications list the 512GB MX100 SSD as capable of 550MB/s sequential reads and 500MB/s sequential writes. Random read/write speed is listed at 90,000/85,000 IOPS. Crucial's MX100 comes in a 2.5" x 7mm z-height form factor, and ships with a spacer should you need to increase the drive's thickness to 9.5mm.

The MX100 supports AES 256-bit hardware encryption, which meets TCG Opal 2.0 and IEEE-1667 standards. Microsoft's eDrive is also supported. Crucial backs the MX100 with an industry standard three-year warranty, with a 72 TBW limit.

Because this is a RAID review, we are going to focus on performance rather than features. For a more in-depth look at the Toshiba Q Series Pro feature set, I will refer you to Chris Ramseyer's extensive review of Crucial's MX100 512GB SSD.

Drive Details - Crucial MX100 512GB SSD

Crucial packages their MX100 in an attractive blue and silver flip-top box. The drive is pictured on the top of the box.

The rear of the box lists the contents, although there is no mention of the included Acronis migration software.

The MX100 512GB ships with a download key for Acronis migration software, and a 7mm to 9.5mm black plastic spacer.

The top of the drive's enclosure is formed from a single sheet of aluminum. A manufacturer's sticker lists the drive's capacity, shipping firmware, model number, serial number, and various bits of other relevant information.

The bottom and sides of the drive's enclosure are formed from a single piece of cast aluminum. There is an attractive blue sticker centered on the bottom face of the drive's enclosure.

Here's what Crucial's MX100 512GB SSD looks like completely disassembled. There is a conductive thermal pad to wick heat from the drive's controller and DRAM package into the thick cast aluminum half of the drive's enclosure.

On this side of the PCB there are eight Micron branded 16nm BGA NAND packages, one DRAM package, a row of small capacitors providing host power-loss protection, and Marvell's newest 88SS9189 flash Processor.

There are an additional eight NAND packages on the opposite side of the PCB.

Test System Setup

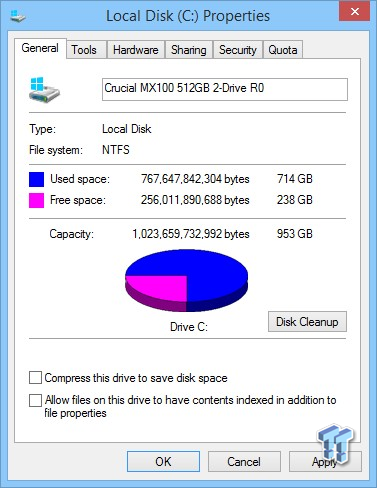

- Drive Properties

The majority of our testing is performed with our test drive/array as our boot volume. Our boot volume is 75% full for all OS Disk "C" drive testing to mimic a typical consumer OS volume implementation. We are using 64k stripes for our two to three drive arrays, and 32k stripes for our four to six drive arrays. C-states and speed stepping are both disabled in our systems BIOS, the high performance power plan is enabled in Windows, write caching is enabled in RST control panel, and Windows buffer flushing is disabled.

All of our testing includes charting the performance of a single drive, as well as RAID 0 arrays of our test subjects. We are utilizing Windows 8.1 64-bit for all of our testing.

Synthetic Benchmarks - ATTO, Anvil Storage Utilities, CrystalDiskMark & AS SSD

ATTO

Version and / or Patch Used: 2.47

ATTO is a timeless benchmark used to provide manufacturers with data used for marketing storage products.

Sequential read transfers max out at 1.028 GB/s, and sequential write transfers max out at 0.968 GB/s.

Sequential Write

Write performance is nearly identical to the M550, and second only to Samsung's 840 EVO.

Sequential Read

Read performance is not as strong as the M550, or most of the other arrays on our chart.

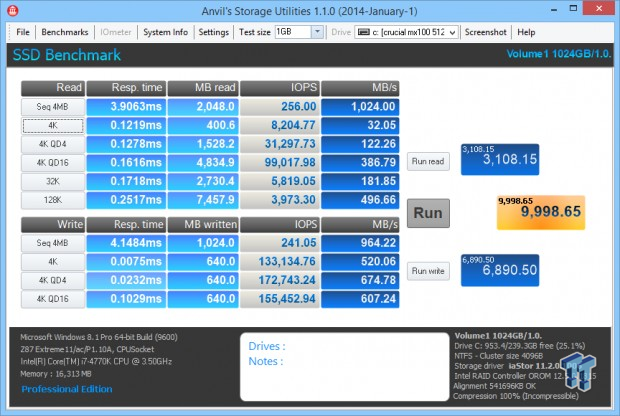

Anvil Storage Utilities

Version and / or Patch Used: RC6

Anvil's Storage Utilities is a storage benchmark designed to measure the storage performance of SSDs. The Standard Storage Benchmark performs a series of tests; you can choose to run a full test, or just the read or write test, or you can run a single test (i.e. 4k QD16).

10,000 points is a very good score for a 2 drive array running on Windows 8.1

Read IOPS through Queue Depth Scale

Surprisingly, we see our MX100 array easily outperforming our M550 array. With enthusiast grade performance like this, it is hard to believe the MX100 is a value drive.

Write IOPS through Queue Scale

Again, our MX100 array handily outperforms our M550 array. We give the win to Seagate's 600 Pro, which is a write juggernaut, but the MX100 is able to outperform its intended target, Samsung's 840 EVO.

CrystalDiskMark

Version and / or Patch Used: 3.0 Technical Preview

CrystalDiskMark is disk benchmark software that allows us to benchmark 4k and 4k queue depths with accuracy.

Note: Crystal Disk Mark 3.0 Technical Preview was used for these tests since it offers the ability to measure native command queuing at 4 and 32.

The numbers are excellent across the board; especially sequential speed. I think we are beginning to see that Crucial's 16nm flash can deliver enthusiast grade performance for an incredible bargain.

Only our Extreme II array has better sequential performance than our MX100 array. The16nm flash appears to have a performance disadvantage at 4K QD:32 read; however, it's definitely the least important metric of any on our chart.

Our MX100 array delivers a performance that's equivalent to that of our current RAID champion, the Intel 730 array. Seagate's 600 pro wins this competition because it delivers massive performance at 4K QD:4, which none of our other arrays can touch.

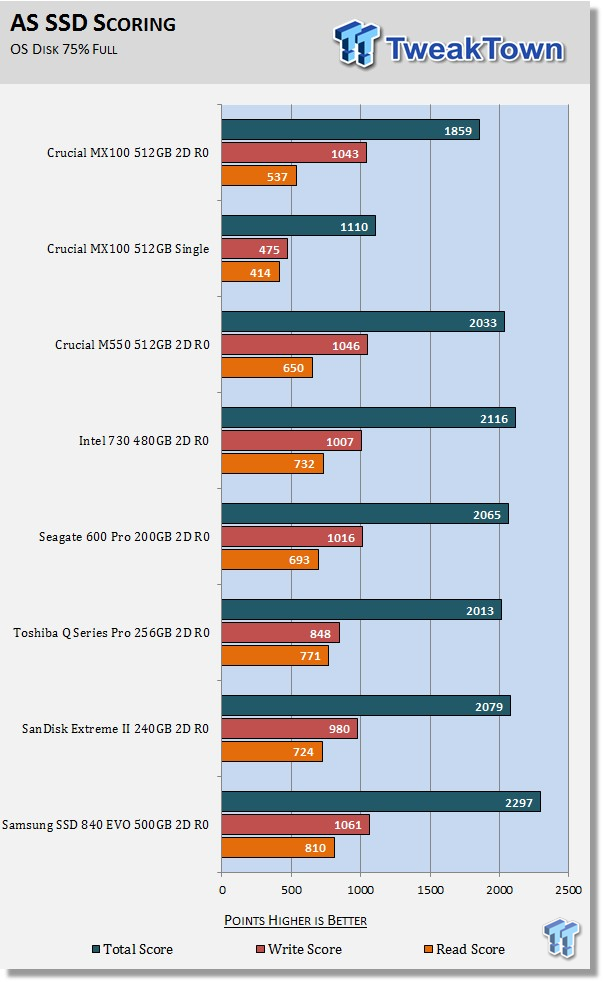

AS SSD

Version and / or Patch Used: 1.7.4739.38088

AS SSD determines the performance of Solid-State Drives (SSD). The tool contains four synthetic tests, as well as three practice tests. The synthetic tests are used to determine the sequential, and random read and write performance of the SSD.

AS SSD confirms what we saw with CDM. In 4K read performance at a very high QD, Crucial's 16nm flash shows a weakness in comparison to 20nm flash. Again, this is the least important metric of any on our chart, and you will see why as we move into real-world simulations.

Benchmarks (Trace Based OS Volume) - PCMark Vantage, PCMark 7 & PCMark 8

Light Usage Model

We are going to categorize these tests as indicative of a light workload. If you utilize your computer for light workloads like browsing the web, checking emails, light gaming, and office related tasks, then this category of results is most relevant for your needs.

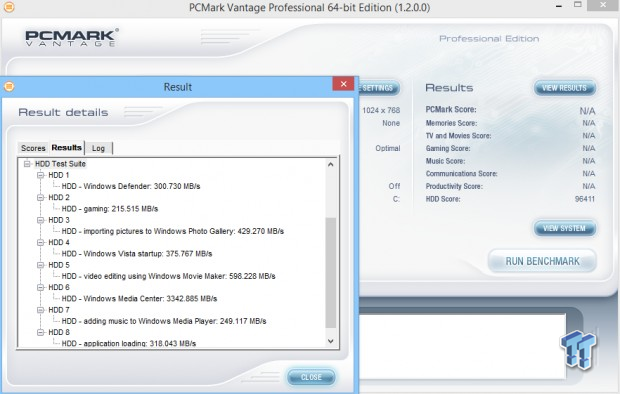

PCMark Vantage - Hard Disk Tests

Version and / or Patch Used: 1.2.0.0

The reason we like PCMark Vantage is because the recorded traces are played back without system stops. What we see is the raw performance of the drive. This allows us to see a marked difference between scoring that other trace-based benchmarks do not exhibit. An example of a marked difference in scoring on the same drive would be empty vs. filled vs. steady state.

We run Vantage three ways. The first run is with the OS drive/array 75% full to simulate a lightly used OS volume filled with data to an amount we feel is common for most users. The second run is with the OS volume written into a "steady state" utilizing SNIA's guidelines (Rev 1.1). Steady state testing simulates a drive/array's performance similar to that of a drive/array that has been subjected to consumer workloads for extensive amounts of time. The third run is a Vantage HDD test with the test drive/array attached as an empty, lightly used secondary device.

OS Volume 75% Full - Lightly Used

OS Volume 75% Full - Steady State

Secondary Volume Empty - Lightly Used

As you can see, there's a big difference between an empty drive/array, one that's 75% full/used, and one that's in a steady state.

The important scores to pay attention to are "OS Volume Steady State" and "OS Volume 75% full." These two categories are most important because they are indicative of typical of consumer user states.

When a drive/array is in a steady state, it means garbage collection is running at the same time it's reading/writing. This is exactly why we focus on steady state performance. Our MX100 array is only slightly slower than our M550 array, delivering the fourth best steady state performance of any two drive array we have tested to date.

PCMark 7 - System Storage

Version and / or Patch Used: 1.4.00

We will look to the Raw System Storage scoring for RAID 0 evaluations because it's done without system stops, and therefore allows us to see significant scoring differences between drives/arrays.

OS Volume 75% Full - Lightly Used

Our MX100 array again shows itself to be a real-world performance powerhouse, even defeating our M550 array this time. Toshiba's Q Series Pro is the clear winner of this test.

PCMark 8 - Storage Bandwidth

Version and / or Patch Used: 1.2.157

We use the PCMark 8 Storage benchmark to test the performance of SSDs, HDDs, and hybrid drives with traces recorded from Adobe Creative Suite, Microsoft Office, and a selection of popular games. You can test the system drive, or any other recognized storage device, including local external drives. Unlike synthetic storage tests, the PCMark 8 Storage benchmark highlights real-world performance differences between storage devices.

OS Volume 75% Full - Lightly Used

IMFT flash based arrays dominate this testing. Crucial's MX100 is targeted to compete with Samsung's 840 EVO, and here we can see that our MX100 array has no problem soundly defeating our EVO array in this real-world simulation. 16nm flash is looking spectacular so far.

Benchmarks (Secondary Volume) - Disk Response & Transfer Rates

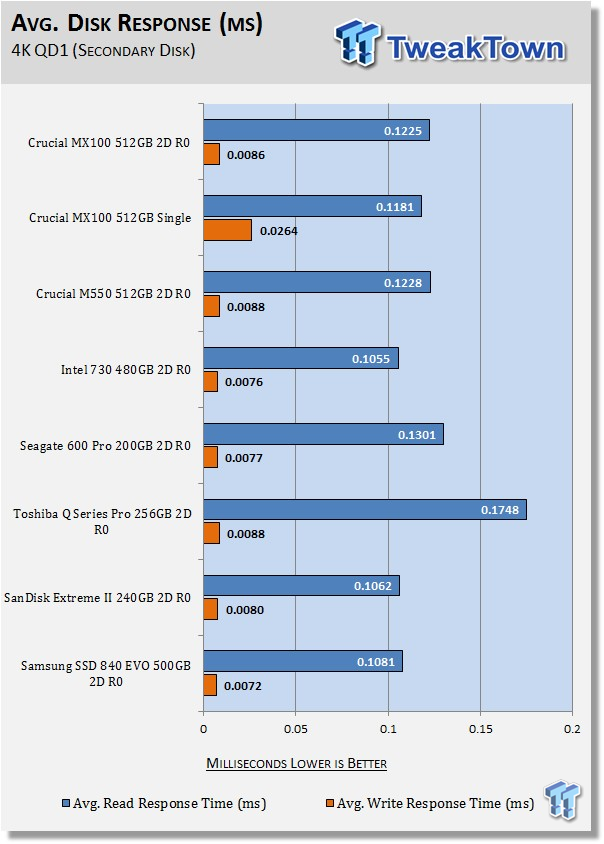

Iometer - Disk Response

Version and / or Patch Used: 1.1.0

We use Iometer to measure disk response times. Disk response times are measured at an industry accepted standard of 4k QD1 for both write and read. Each test is run twice for 30 seconds consecutively, with a five second ramp-up before each test. The drive/array is partitioned and attached as a secondary device for this testing.

Write Response

Read Response

Average Disk Response

Write response times benefit most from RAID 0 because of write caching. There is a slight latency increase in read response times for an array vs. a single drive. Response times are about average, but our MX100 array has slightly better response times than our M550 array, which is a pleasant surprise.

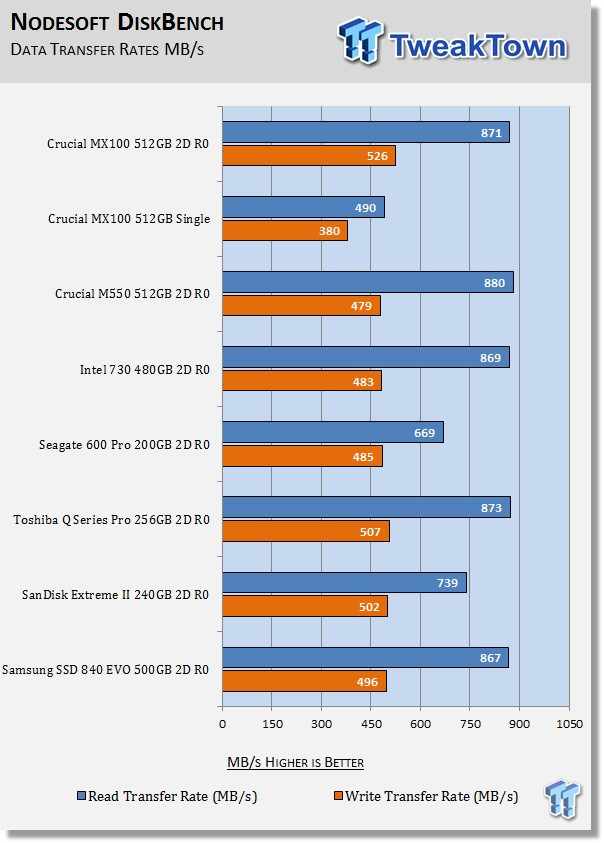

DiskBench - Directory Copy

Version and / or Patch Used: 2.6.2.0

We use DiskBench to time a 28.6GB block (9,882 files in 1,247 folders) of mostly incompressible random data as it's transferred from our OS array: to our test drive/array. We then read from a 6GB zip file that's part of our 28.6GB data block to determine the test drive/array's read transfer rate. The system is restarted prior to the read test to clear any cached data, ensuring an accurate test result.

Write Transfer Rate

Read Transfer Rate

It's hard to believe that that the MX100 is considered a value drive and costs less per GB than any other drive on the market today. This is bleeding edge enthusiast performance. Actually, its hyper-class performance.

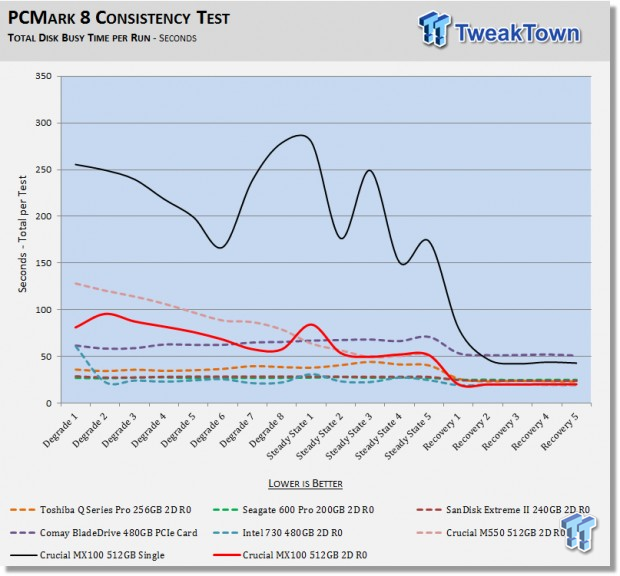

Benchmarks (Secondary Volume) - PCMark 8 Extended

Futuremark PCMark 8 Extended - Consistency Test

Heavy Usage Model

We consider PCMark 8's consistency test to be our heavy usage model test. This is the usage model most enthusiasts, heavy duty gamers, and professionals fall into. If you do a lot of gaming, audio/video processing, rendering, or have workloads of this nature, then this test will be most relevant to you.

PCMark 8 has built-in, command line executed storage testing. The PCMark 8 Consistency test measures the performance consistency and the degradation tendency of a storage system.

The Storage test workloads are repeated. Between each repetition, the storage system is bombarded with a usage that causes degraded drive performance. In the first part of the test, the cycle continues until a steady degraded level of performance has been reached (Steady State).

In the second part, the recovery of the system is tested by allowing the system to idle and measuring the performance with long intervals (TRIM).

The test reports the performance level at the start, the degraded steady state, and the recovered state, as well as the number of iterations required to reach the degraded state, and the recovered state.

We feel Futuremark's Consistency Test is the best test ever devised to show the true performance of solid state storage in a heavy usage scenario. This test takes an average of 13 to 17 hours to complete, and it writes somewhere between 450GB and 13,600GB of test data, depending on the drive(s) being tested. If you want to know what a SSD's performance is going to look like after a few months or years of heavy usage, this test will show you.

Here's a breakdown of Futuremark's Consistency Test:

Precondition phase:

1. Write to the drive sequentially through up to the reported capacity with random data.

2. Write the drive through a second time (to take care of overprovisioning).

Degradation phase:

1. Run writes of random size between 8*512 and 2048*512 bytes on random offsets for ten minutes.

2. Run performance test (one pass only).

3. Repeat steps one and two, eight times, and on each pass increase the duration of random writes by five minutes.

Steady state phase:

1. Run writes of random size between 8*512 and 2048*512 bytes on random offsets for 50 minutes.

2. Run performance test (one pass only).

3. Repeat steps one and two, five times.

Recovery phase:

1. Idle for five minutes.

2. Run performance test (one pass only).

3. Repeat steps one and two, five times.

Storage Bandwidth

PCMark 8's Consistency test provides a ton of data output that we can use to judge a drive/array's performance.

We consider steady state bandwidth (the blue bar) our test that carries the most weight in ranking a drive/array's performance. The reason we consider steady state performance more important than TRIM is that when you are running a heavy-duty workload, TRIM will not be occurring while that workload is being executed. TRIM performance (the orange and red bars) is what we consider the second most important aspect to consider when ranking a drive/array's performance. Trace based consistency testing is where true high performing SSDs are separated from the rest of the pack.

Following what we saw from Crucial's more expensive M550 variant identically, the MX100 512GB shifts from mediocre in this test as a single drive, to beast mode in RAID. This is what we love about IMFT flash; it scales incredibly well. This is the first we have seen of 16nm IMFT flash, and as this test, the most brutal of all tests, demonstrates, 16nm Micron flash has not lost any performance with a die shrinkage as opposed to 20nm Micron flash.

Although the Toshiba flash based arrays on our chart all edge out our MX100 array, I anticipate that if we had a three drive array of MX100 512GB SSD's, the outcome would be quite different. The reason I anticipate this performance increase is because I believe the MX100 will keep scaling better than the Toshiba based drives, with the possible exception of Toshiba's Q Series Pro. If I can talk Chris Ramseyer out of his MX100 512GB, then we will have a look for ourselves.

Notice how all of our IMFT flash based arrays have far better TRIM performance than the Toshiba flash based arrays? This has turned out to be a very good indicator as to how well our arrays will potentially scale as we add drives to the array.

We chart our test subject's storage bandwidth as reported at each of the test's 18 trace iterations. This gives us a good visual perspective of how our test subjects perform as testing progresses.

Total Access Time (Latency)

Access time is the time delay, or latency, between a request to an electronic system, and the action being completed or the requested data returned. Essentially, access time is how long it takes to get data back from the disk. We chart the total time the disk is accessed as reported at each of the test's 18 trace iterations.

This is a great visual representation of what RAID brings to the table. A quick look at Steady State One shows that when you RAID two MX100's, latency under load drops as much as 5.5x in comparison to a single MX100. Surprisingly, we find our MX100 array outperforms our M550 array, delivering lower latency for almost the entire test.

Disk Busy Time

Disk Busy Time is how long the disk is busy working. We chart the total time the disk is working as reported at each of the test's 18 trace iterations.

When latency is low, disk busy time is low as well. In a steady state, an MX100 array spends up to 3.6 X less time working than a single MX100 with the exact same workload.

Data Written

We measure the total amount of random data that the drives/arrays are capable of writing during the degradation phases of the consistency test. The total combined time that degradation data is written to the drive/array is 470 minutes. This can be very telling. The better the drive/array can process a continuous stream of random data, the more data will be written.

Our MX100 array has lower latency than our M550 array, and as such, can write more random data in the same span of time. Drives/arrays like Seagate's awesome 600 Pro with its enterprise pedigree have the ability to write many times more data than consumer based drives/arrays in this test. This is directly attributable to lower latency.

Gratuitous Benchmarking

This is where we show you what our array's performance looks like when powered by the fastest operating system for SATA based storage ever made, Windows Server 2008. This is the exact same hardware, just an OS change.

4k QD1 Write. 168,000 IOPS is just smoking fast!

You can't get performance like this from Windows 8, or 8.1. You can get very close with Windows 7, but nothing performs quite as well as Server 2008 when it comes to SATA based storage. 4K write performance is vastly superior on Server 2008 and Windows 7 in comparison to Windows 8 or 8.1.

Final Thoughts

Solid state storage is the most important performance component found in a modern system today. Without it, you do not even have a performance system.

Micron designed their 16nm flash primarily to compete with Samsung's TLC flash. Based on the monster performance we were able to get from our MX100 512GB based array, I would say Crucial/Micron came up with a winner.

I am still trying to figure out how Micron was able to shrink NAND lithography by that much with no apparent loss of performance. Throughout most of our testing, our MX100 array was not only able to keep pace with our M550 array, it outperformed it.

Let's talk for a minute about what you get for 43 cents per gigabyte when you purchase a MX100 512GB SSD. Everything on the drive is premium, including MLC flash in BGA packages (the good stuff), a full eight channel controller (Marvell's latest and greatest), and onboard host power-loss protection. You get features like Dev Sleep, and the MX100 supports AES 256-bit hardware encryption, which meets TCG Opal 2.0 and IEEE-1667 standards. Microsoft's eDrive is also supported.

When we look at how Crucial's MX100 512GB compares with Samsung's 840 EVO, I have to give the edge to Crucial. They both carry the same warranty, but MLC NAND is inherently superior to TLC. The MX100 is cheaper; in fact, it's the best value per GB SSD there is at the moment, and it delivers enthusiast grade performance, just like the EVO. When comparing the two drives (512GB EVO and 512GB MX100), strictly based on performance, they are very evenly matched.

Normally, I don't give a crap about cost, and it doesn't factor into what makes a particular drive my favorite flavor of the week, but this time it does. Bleeding edge, hyper-class performance at 43 cents per gigabyte is intoxicating, and is precisely the reason Crucial's MX100 512GB SSD is my new favorite SSD (at least until the next RAID Report).

RAIDing two or more drives together provides you with storage that takes performance to the next level, and is something I recommend you try. Think of it as the SLI of storage. Once you go RAID, there's no going back!

PRICING: You can find Crucial's MX100 (512GB) for sale below. The prices listed are valid at the time of writing, but can change at any time. Click the link to see the very latest pricing for the best deal.

United States: The Crucial MX100 (512GB) retails for $209.99 at Amazon.

Canada: The Crucial MX100 (512GB) retails for CDN$224.99 at Amazon Canada.

United

States: Find other tech and computer products like this

over at

United

States: Find other tech and computer products like this

over at  United

Kingdom: Find other tech and computer products like this

over at

United

Kingdom: Find other tech and computer products like this

over at  Australia:

Find other tech and computer products like this over at

Australia:

Find other tech and computer products like this over at  Canada:

Find other tech and computer products like this over at

Canada:

Find other tech and computer products like this over at  Deutschland:

Finde andere Technik- und Computerprodukte wie dieses auf

Deutschland:

Finde andere Technik- und Computerprodukte wie dieses auf