Artificial Intelligence News - Page 1

Sony sends strict warning letter to 700+ companies over AI training

Sony has officially put its stake in the ground on where it stands when it comes to AI models using the company's IP for training, or at least Sony Music has.

According to a letter seen by the Financial Times, Sony Music has warned more than 700 companies developing AI products, along with music streaming platforms, that using any of Sony's music is strictly prohibited, and the company, along with all of its signed artists opt of out any text and data mining. It should be noted the letter states Sony has opted out of data mining for individuals and companies that are using its content for the purpose of commercialization, or in other words, to make money.

Companies that have already received the letter include OpenAI, Microsoft, Google, and many more. Additionally, music streaming services such as Apple Music and Spotify have received a different letter recommending they implement measures that will align with Sony's new policy. This move by Sony Music is the latest from the Sony arm that has signed artists such as Harry Styles, Adele, and Beyoncé to protect its IP from being used to train an AI model that can then produce its own unique music.

Continue reading: Sony sends strict warning letter to 700+ companies over AI training (full post)

Google unveils monster processing unit designed to power next-gen AI models

Google recently announced at its I/O event its sixth tensor processing unit (TPU) called Trillium, and according to the company the new processor is designed for powerful next-generation AI models.

The company initially created the TPUs for its own internal products such as Gmail, Google Maps and YouTube, which leverage machine learning workloads. Now, Google has created six generations of this technology and according to the company Trillium will arrive with a 4.7x increase in peak compute performance, along with double the high bandwidth memory capacity, compared to its TPU v5e design.

More specifically, Google's claim of a 4.7x increase in peak compute performance means the new TPU is capable of pushing 926 teraFLOPS at BF16 and 1,847 teraFLOPS at INT8, making it about twice as fast as TPU v5p accelerators that Google announced less than six months ago. How did Google do this? The company said the performance increase can be traced back to the decision to increase the size of TPU's matrix multiple units (MXUs), and boosting the clock speed.

NVIDIA to help Japan's sovereign AI efforts with generative AI infrastructure build-out

NVIDIA will play a central role in developing Japan's generative AI infrastructure, as the country seeks to capitalize on the technology's economic potential, and further develop its workforce.

The announcement from NVIDIA comes after Japan's Ministry of Economy, Trade and Industry on the future of AI with NVIDIA in the country. NVIDIA is collaborating with key digital infrastructure providers, including GMO Internet Group, Highreso, KDDI Corporation, RUTILEA, SAKURA internet Inc. and SoftBank Corp., which the ministry has certified to spearhead the development of cloud infrastructure crucial for AI applications.

The ministry announced plans to provide $740 million in funding to help 6 local firms in this new AI initiative, which means Japan is the latest nation to embrace the concept of sovereign AI by fortifying Japanese startups, enterprises, and research with advanced AI technologies.

SK hynix unveils new tech for 'dream memory chip' to store data, perform calculations for AI

SK hynix unveiled a new technology that will be used to create a "dream memory chip" that is capable of storing data and performing calculations for AI.

The South Korean memory giant unveiled its new technology during the International Memory Workshop (IMW 2024) held from May 12-15 at the Walkerhill Hotel in the Gwangjin district of Seoul, South Korea. The new technology enhances the accuracy of Multiply Accumulate (MAC) operations in Analog Computing in Memory (A-CIM) semiconductors using oxygen diffusion barrier technology.

MAC operations are critical for the high-speed multiplication and accumulation processes required in artificial intelligence (AI) inference and learning. SK hynix's recent development is a significant step for the company in the competitive field of creating a "dream memory semiconductor" that can both store information and perform calculations, passing the traditional limitations of memory-only semiconductors.

OpenAI unveils new AI model that's a step towards natural human-computer interaction

OpenAI has unveiled a new AI model that is designed to analyze audio, visual and text, and provide answers based on what it "sees/hears".

The company behind the immensely popular AI tool ChatGPT announced its latest flagship model called GPT-4o (omni), which OpenAI describes as being a step towards a "much more natural human-computer interaction". The new AI model is expected to match the performance of GPT-4 Turbo at processing text and code input, while simultaneously being faster and 50% cheaper with its API, making it a more affordable choice for third-party app integration.

More specifically, users will be able to submit a query by voice about what the AI agent is able to "see" on the devices screen, and an example of this would be asking the AI what game two people can play. OpenAI demonstrated this with two people that verbally asked the AI "what game can we play". The AI used the smartphone camera to "see" the two people sitting in front of it and suggested playing rock, paper, scissors. The quick demonstration showed the AI model being able to fluently interact with the individuals and also be extremely responsive to interruptions and new commands.

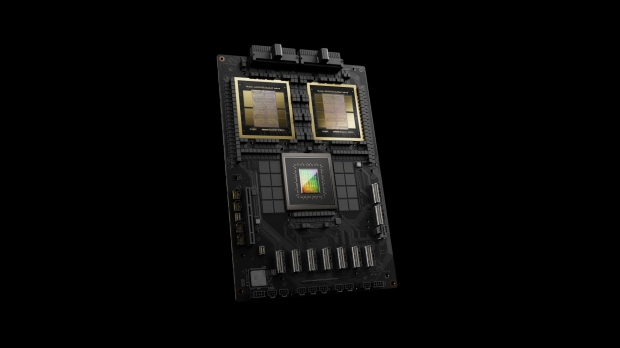

NVIDIA's new GB200 Superchip costs up to $70,000: full B200 NVL72 AI server costs $3 million

NVIDIA's new Blackwell GPU architecture is going to make the company a lot of money, and while we know the B200 AI GPUs will cost $30,000 to $40,000 each -- CEO Jensen Huang said that just after the GPUs were announced -- but, the GB200 Superchip (CPU + GPU) will cost upwards of $70,000.

In a post on X by a senior writer at Barron's, the new NVIDIA GB200 Superchip will cost between $60,000 and $70,000. We already know that an NVIDIA DGX NVL72 AI server cabinet will cost $3 million per AI server, which gets filled with 72 x B200 GPUs and 36 x Grace CPUs.

The new NVIDIA DGX NVL72 is the AI server with the most computing power, and thus, the highest unit price. Inside, the DGX NVL72 features 72 built-in Blackwell-based B200 AI GPUs and 36 Grace CPUs (18 servers in total with dual Grace CPUs and 36 x B200 AI GPUs per server) with 9 switches. The entire cabinet is designed by NVIDIA in-house, and cannot be modified, it is 100% made, tested, and provided by NVIDIA.

Samsung reportedly FAILS to pass HBM3E memory qualification tests by NVIDIA for its AI GPUs

Samsung has reportedly failed to pass specific stages of HBM3E memory verification standards from NVIDIA, which is surely going to cause a headache for the South Korean memory giant.

SK hynix has been pumping out HBM3 and now HBM3E while still preparing not just the next-generation HBM4 memory, but even HBM4E memory... while South Korean HBM rival, Samsung, can't get its act together with HBM3 memory for NVIDIA according to Korean news outlet AlphaBiz.

Samsung has reportedly failed qualification tests for its HBM3 8-layer memory, which is a serious situation to be in considering how bleeding-edge HBM is, and how Samsung has been acting in an emergency-style manner to get its HBM business flourishing... and now this gigantic roadblock, as NVIDIA prepares its beefed-up Hopper H200 AI GPU, and next-generation Blackwell B100, B200, and GB200 AI GPUs which all use HBM3E memory.

Arm plans to develop an AI chip division, will have AI chips released in 2025

Arm is developing its own artificial intelligence (AI) chips, with the first AI chips made by the company expected to launch in 2025.

The UK-based company will spool up an AI chip division that will deliver a prototype AI chip by spring 2025 according to a report from Reuters. The mass production of Arm's new AI chip will be handled by contract manufacturers -- TSMC -- and is expected to start in autumn 2025.

Arm Holdings is a SoftBank Group subsidiary -- SoftBank owns a 90% share in Arm -- with SoftBank CEO Masayoshi Son preparing a huge $64 billion strategy to transform SoftBank into a powerhouse AI company. Negotiations are reportedly already happening with TSMC and others to secure production capacity.

SK hynix says its ultra-next-gen HBM4E in 2026, ready for the world of next-gen AI GPUs

SK hynix has announced it plans to complete the development of its next-gen HBM4E memory by as early as 2026, preparing for the next-gen AI GPUs of the future.

SK hynix's head of the HBM advanced technology team, Kim Gwi-wook, announced the news this week of the direction of next-generation HBM development at the International Memory Workshop (IMW 2024). HBM was developed by SK hynix in 2014, with HBM2 (2nd generation) in 2018, HBM2E (3rd generation) in 2020, HBM3 (4th generation) in 2022, and HBM3E (5th generation) was introduced this year.

There's a two-year cadence between HBM generations, with HBM3E unleashed this year, it means that HBM4 (6th generation) should drop in 2025, and HBM4E (7th generation) in 2026. That's a bit faster than two years for HBM4 and HBM4E, which is because SK hynix is predicting that HBM performance advancements would become faster than previous generations.

This portable AI supercomputer in a carry-on suitcase: 4 x GPUs, 246TB storage, 2500W PSU

GigaIO and SourceCode have just unveiled Gryf, an ultra-portable AI supercomputer-class system that weighs less than 55 pounds, and fits inside of a TSA-friendly carry-on suitcase. Impressive.

Gryf can handle data collection and processing on a scale that would usually see the data sent off-site, this means that the suitcase-sized supercomputer handles super-fast processing and analysis, all in a suitcase. Gryf supports disaggregating and reaggregating its GPUs, with owners capable of customizing the system's hardware configuration in the field, and on-the-fly.

You can create the absolute optimal hardware configuration for one assigned workload, and then the next workload gets another optimized hardware configuration. Each Gryf has multiple slots filled with compute, storage, accelerator, and network sleds that are perfect for their respective workloads. There's 6 sled slots in total, where you can insert and remove the modules as required.