Artificial Intelligence News - Page 2

Amazon allegedly broke its own copyright laws to keep itself in the AI race

A newly filed lawsuit against Amazon alleges the company broke its own copyright policy to prevent it from falling out the global generative artificial intelligence race that numerous big tech companies are embarking on.

The lawsuit was filed last week in a Los Angeles state court by Dr Viviane Ghaderi, an AI researcher, who claims to have worked in Amazon's Alexa and Large Language Model (LLM) departments. Ghaderi claims she was promoted several times in both of these departments, but was allegedly demoted and eventually fired following her return back from maternity leave.

Ghaderi has placed many allegations against Amazon, claiming the company has discriminated against her, is inherently sexist towards her, retaliation, wrongful termination, and many other claims. More specifically, Ghaderi says when she returned to work, she was tasked with an LLM project, the underlying technology powering impressive tools such as OpenAI's ChatGPT, and her role was flagging instances of the LLM violating Amazon's own copyright policy.

Google releases AI that can predict future natural catastrophes

Google is set to shake up the weather prediction industry with the release of SEEDS, or the Scalable Ensemble Envelope Diffusion Sampler AI model.

The new Large Language Model (LLM) is designed to provide accurate weather predictions much cheaper and faster than traditional weather prediction tools, specifically, weather events such as hurricanes or heat waves that can have a potentially devastating impact on regions. So, how does it work? Predicting the weather inherently is difficult due to the multitude of variables that are at play, with current forecasting being good enough for conditions such as local temperature, it gets progressively more difficult the further out into the future those predictions.

It gets even harder to predict the occurrence of an extreme weather event as that is a culmination of all the variables in typical weather predictions plus random variables that would induce an extreme weather event. The accuracy of predicting an extreme weather event is currently extremely low, with Google explaining that a model needs to incorporate 10,000 weather predictions for an event to have a 1% likelihood of occurrence.

Continue reading: Google releases AI that can predict future natural catastrophes (full post)

LG's kitchen of the future includes a Gourmet AI oven that will take care of the cooking

Last week, LG was in Milan, Italy, showcasing its latest built-in kitchen appliances, including a Signature Kitchen Suite with a built-in oven, 'free zone induction hob,' and downdraft hood. Now, you might be wondering why we're suddenly bringing you news about a new all-in-one oven do-dad - that's because AI powers it.

LG calls it Gourmet AI. The oven can identify what is being cooked through video recognition and automatically select the suitable cooking mode from 130 recipes. With Gourmet AI, the oven also keeps tabs on the cooking process, watching how pizza or a steak is 'browning' and then alerting users via LG's ThinQ app to let them know what they were cooking is done.

There's also real-time video monitoring so that you can watch those cookies cook, and time-lapse recordings are also available - which is a pretty cool feature for an oven.

AI discovers famous painting wasn't entirely painted by the credited artist

Humans are only so good at detecting details in art, which is why researchers have decided to train an AI algorithm to detect what humans can't and only suspect.

A team of researchers from the UK and US created a custom algorithm using a pre-trained architecture called ResNet50, which was developed by Microsoft. The team took advantage of a common machine learning technique to improve the algorithm, feeding it with authenticated paintings by the famous artist Raphael. The goal was to teach the AI to identify with a high degree of accuracy brushstrokes in paintings that were created by Raphael's hand and the faces of people within those paintings.

One painting, in particular, from Raphael, has been a point of debate among scholars. The Madonna della Rosa, or Madonna of the Rose, was painted on canvas from 1518 to 1520. Scholars have been debating for many years if the painting was an original work by the famed artist or if its creation involved another hand, specifically with the face of St Joseph. Some scholars have argued that St Joseph's face isn't as meticulously crafted as the Raphael's other pieces of work, leading them to believe it was done by another painter.

NVIDIA AI GPUs trained Meta's new Llama 3 model for the cloud, edge, and RTX PCs

NVIDIA has just announced optimizations across all of its platforms to accelerate Meta Llama 3, Meta's latest-generation large language model (LLM).

The new Llama 3 model combined with NVIDIA accelerated computing provides developers, researchers, and businesses with innovation across various applications. Meta engineers trained their new Llama 3 on a computing cluster featuring 24,576 NVIDIA H100 AI GPUs linked through the NVIDIA Quantum-2 InfiniBand network; with support from NVIDIA, Meta tuned its network, software, and model architectures for its flagship Llama 3 LLM.

To further advance the state-of-the-art generative AI, Meta recently described plans to scale its AI GPU infrastructure to an astonishing 350,000 NVIDIA H100 AI GPUs. That's a lot of AI computing power, a ton of silicon, probably a city's worth of power, and an incredible sum of money on AI GPUs ordered by Meta from NVIDIA.

Meta launches early versions of Llama 3 to fight with OpenAI and Google in the AI battle

Meta has a nice surprise today: its latest large language model (LLM), Llama 3. The company explains its new Llama 3 8B contains 8 billion parameters, while its Llama 3 70B features 70 billion parameters.

Meta promises a gigantic increase in performance over the previous Llama 2 8B and Llama 2 70B models, with the company claiming that Llama 3 8B and Llama 3 70B are some of the best-performing generative AI models available today, trained on two custom-built 24,000 GPU clusters.

The company trained its new Llama 3 model on over 15 trillion tokens that were all collected from "publicly available sources," and that Met'a training dataset is 7x larger than what was used for Llama 2, and that includes 4x more code.

AI startup: AMD Instinct MI300X is 'far superior' option to NVIDIA Hopper H100 AI GPU

AI startup TensorWave is one of the first with a publically deployed setup powered by AMD Instinct MI300X AI accelerators, and its CEO says they're a far better option than NVIDIA's dominant Hopper H100 AI GPU.

TensorWave started racking up AI systems powered by AMD's just-released Instinct MI300X AI accelerator, which it plans to lease the MI300X chips at a fraction of the cost of NVIDIA's Hopper H100 AI GPU. TensorWave plans to have 20,000 of AMD's new Instinct MI300X AI accelerators before the end of the year across two facilities, and has plans to have liquid-cooled systems online in 2025.

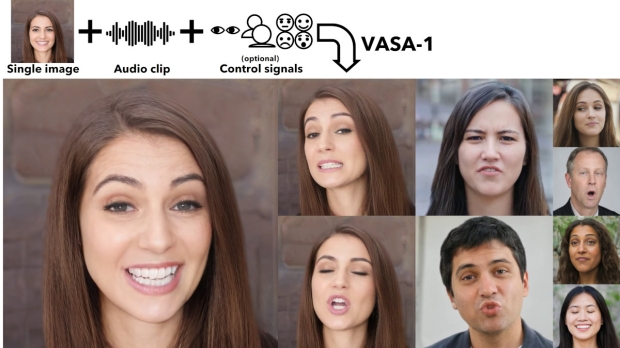

Meet Microsoft's VASA-1: AI can now take a photo and turn it into a 'talking head' video clip

A fresh jaw-dropping initiative for AI, this one from Microsoft, runs like this - give AI a photo of someone, and an audio clip of their voice, and it'll mock up a video clip of that person chatting away.

So, from just an image and a minute long chunk of audio of the person's voice - which is what was used to create the example videos Microsoft shares - you get these fully realized talking faces, complete with lifelike facial expressions, head and eye movements, full lip-syncing, and so on.

In short, the whole works needed for it to look like a totally realistic video of said person espousing their thoughts on any given topic, all generated in real-time by the AI.

NVIDIA and Foxconn expect results this year for AI factories, smart manufacturing, AI smart EVs

Foxconn and NVIDIA are teaming up to battle the future of AI, with Foxconn chairman Liu Yangwei saying yesterday that we'll see the progress of what the companies have been working on, later this year.

The two companies have teamed up for three major platforms: AI factories, AI smart manufacturing, and AI smart electric vehicles. Foxconn is expected to ramp up its support with NVIDIA in building next-gen Blackwell GB200 AI servers, which was announced at the 2024 Taipei International Automotive Electronics Show.

The Foxconn executive flew to the United States to personally attend NVIDIA's recent GTC (GPU Technology Conference), where he said that the two companies would be working on AI factories and that "everyone will soon see some construction on NVIDIA's AI factories".

NVIDIA's new Blackwell AI GPUs drives TSMC to increase CoWoS output by over 150% in 2024

NVIDIA's new wave of Blackwell AI GPUs will boost demand for TSMC's advanced CoWoS packaging capacity by over 150% in 2024.

In a new report from TrendForce, the next-gen Blackwell AI GPU platform that includes the B200 and B100 AI GPUs -- as well as the GB200, which also features NVIDIA's in-house Arm-based Grace CPU -- all use CoWoS packaging from TSMC, and projections suggest millions of shipments of high-end B200 AI GPUs are expected in 2025 making up close to 50% of NVIDIA's high-end GPU market.

NVIDIA will launch its new GB200 and B200 in the second half of 2024, with upstream wafer packaging needing to adopt a much more complex, and high-precision CoWoS-L technology, which will make validation and testing take more time. On top of that, more time is needed to optimize the B-series for AI server systems in things like network communication and cooling performance.