NVIDIA has been making cut-down AI GPUs to circumvent US export restrictions in China for months now, but it appears modified Ampere A100 AI GPUs are also making the rounds there.

NVIDIA A100 7936SP 96GB + 40GB AI GPUs (source: Jiacheng Liu)

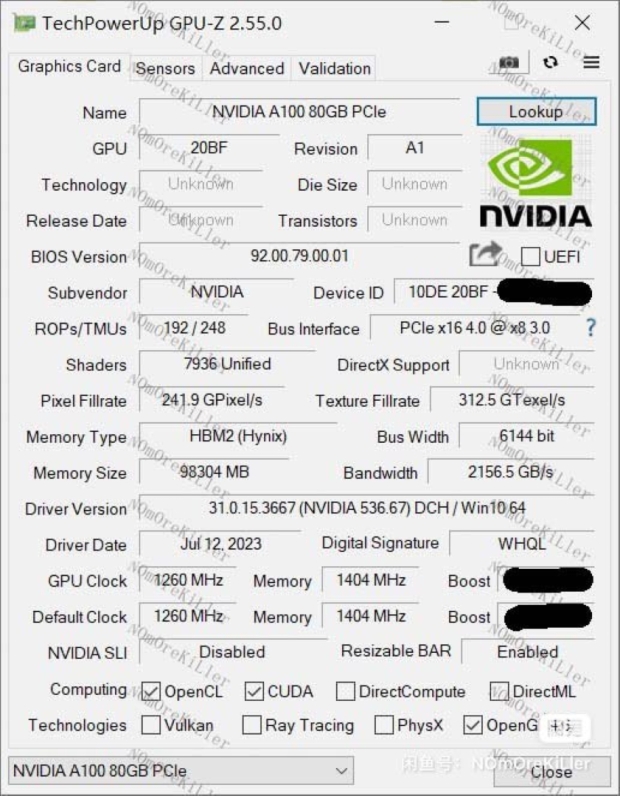

A new NVIDIA A100 7936SP -- not the real name; this name is based on its CUDA core count -- has the same GA100 GPU as the regular A100 -- but with 15% more CUDA cores. The regular A100 has 124 SMs (Streaming Multiprocessors), while the new A100 7936SP has 128 SMs in total.

There's also more HBM2e memory on the new A100 7936SP, with 96GB of HBM2e memory, compared to just 80GB of HBM2e on the standard A100 AI GPU. With the additional 4 SMs, the new A100 7936P has a 15% increase in SM, CUDA, and Tensor Core counts, which should relate to at least 15% more AI performance across the board.

NVIDIA's previous-gen Ampere A100 is offered in both 40GB and 80GB configurations, as too does the new A100 7936SP with both 80GB and 96GB variants in China.

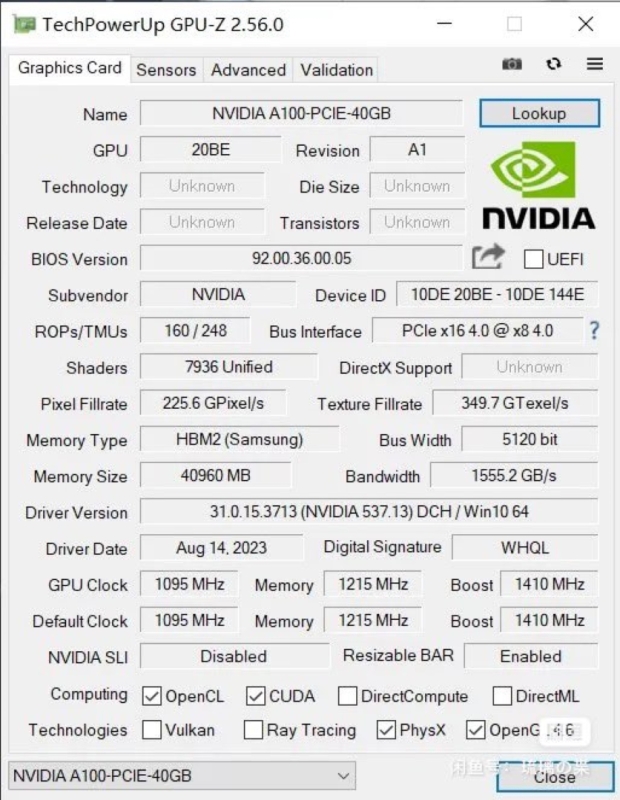

The new A100 7936SP 40GB variant has a huge upgrade in base GPU clocks, from 765MHz on the standard A100 to a whopping 1215MHz (that's a huge 59% increase) on the new A100 7936SP. Boost clocks on the 40GB variants are both at 1410MHz.

As for the GPU itself, the A100 7936SP 40GB has 124 SMs enabled, with 7936 CUDA cores (duh, the name) and 496 Tensor / AI Cores. Meanwhile, the standard A100 40GB features 6912 CUDA cores and 432 Tensor/AI cores, a chunky cut-down from the now mega-A100 7936SP 40GB in China.

Now, let's talk VRAM. The higher-end A100 7936SP 96GB AI GPU has more VRAM -- at 96GB HBM2e versus 80GB HBM2e on the standard A100 -- but it's also on a wider bus. The new A100 7936SP AI GPU has 96GB of HBM2e memory spread out on a 6144-bit memory bus that has up to 2.16TB/sec of memory bandwidth, up from the 5120-bit memory bus and 1.94TB/sec memory bandwidth on the regular A100 80GB AI GPU.

The VRAM subsystem on the new A100 7936SP 40GB is identical to the A100 40GB: 40GB of HBM2e on a 5120-bit memory bus with up to 1.56TB/sec of memory bandwidth.

NVIDIA's current A100 80GB and A100 40GB AI GPUs have TDPs of 300W and 250W, respectively, so we should expect the beefed-up A100 7936SP 96GB to have a slightly higher TDP of something like 350W.

We're seeing Chinese sellers listing the A100 7936SP 96GB model for between $18,000 and $19,800; and we don't even know if these AI accelerators are engineering samples that have been leaked from NVIDIA's own labs, or customized models that the chipmaker developed for a particular client. Whatever it is, these cards are real, and they are more powerful than US export regulations allow.