A Princeton neuroscientist has warned that artificial intelligence-powered chatbots such as ChatGPT are sociopaths without the one thing that makes humans special.

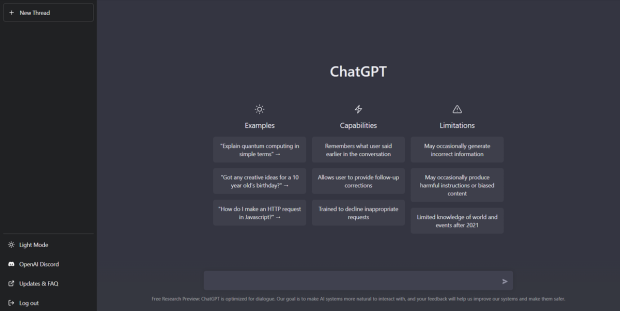

In a new essay detailed by The Wall Street Journal, Princeton neuroscientist Michael Graziano explains that AI-powered chatbots are sociopaths without consciousness and that until developers can implement consciousness, they will pose a real danger to humans. For those that don't know, AI chatbots such as ChatGPT are designed to have human-like conversations by remembering what was written by the human earlier in the conversation, providing almost real-time answers and thorough answers to questions.

While the dangers of AI aren't so prevalent now, in the future, that could very well change as these sophisticated tools are further upgraded and developed. In order to make them more human-like, Graziano proposes that they are taught human traits such as empathy and prosocial behavior. Notably, the neuroscientist says that these systems will need a form of implemented consciousness to understand these traits and, in turn, adjust their responses to align more with human values.

However, there is a big problem. It's very difficult to measure consciousness, and philosophically speaking, it's hard even to know if some people or machines are even conscious at all. Graziano proposes that an AI should be tested in a "reverse Turing test", which is a test of a machine's ability to exhibit intelligent behavior equivalent to, or indistinguishable from, that of a human. Instead of a human testing a machine to see if it performs like a human would, Graziano says the test should determine if the computer can tell if it's talking to a human or another computer.

Furthermore, Graziano says that if these problems aren't figured out, humans will have developed powerful sociopathic machines that can make consequential decisions. At the moment, systems such as ChatGPT and other language models are mere "toys", according to Graziano. However, that may very well change in a year or five as development continues and machine consciousness is explored.

"A sociopathic machine that can make consequential decisions would be powerfully dangerous. For now, chatbots are still limited in their abilities; they're essentially toys. But if we don't think more deeply about machine consciousness, in a year or five years we may face a crisis," said Graziano.

If you are interested in reading more about artificial intelligence or would like to check out more of the recently published essay by Graziano, visit this link here.