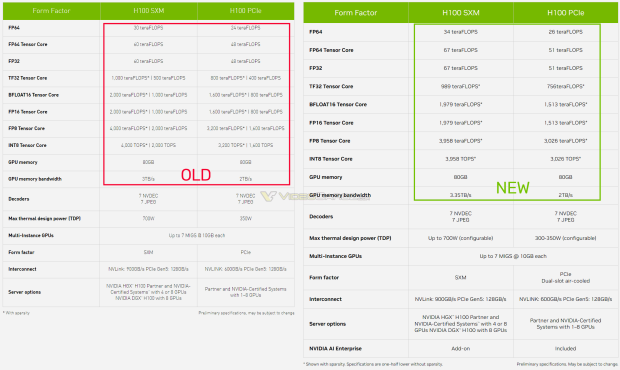

NVIDIA has just updated the specifications for its new Hopper H100 datacenter GPU, with the updated specs coming just two weeks after the company detailed its huge Hopper H100 GPU.

The new NVIDIA Hopper H100 GPU has improved single-precision and double-precision compute performance numbers, where during the announcement we heard it would have 30 TFLOPS of FP64 compute performance, but Hopper H100 now has 34 TFLOPS of FP64 compute performance.

NVIDIA has up to 16896 CUDA cores inside of its Hopper H100 GPU, which will be clocked at a higher GPU clock of at least 1982MHz -- not the 1775MHz GPU clocks that were previously reported -- NVIDIA is tapping the same custom TSMC N4 process node as its Ada Lovelace GPU architecture inside of the upcoming GeForce RTX 40 series graphics cards.

The company has also updated the INT8, FP8, FP16, and BFLOT15 numbers for the Hopper H100 -- not just FP64 -- with slightly lower performance across the board there.

- Read more: NVIDIA Hopper H100 GPU with 120GB HBM2e (or HBM3) memory teased

- Read more: NVIDIA Hopper H100 GPU detailed: TSMC 4nm, HBM3 VRAM, 80B transistors

As for the NVIDIA Hopper H100 itself, the company will be making it in SXM mezzanine connector and PCIe 5.0 form, with the SXM version of the Hopper H100 rocking out with the full 700W TDP, while the PCIe-based GPU will have just a 350W TDP. NVIDIA will be shipping its new Hopper H100 GPUs in Q1 2023 with NVIDIA DGX workstation systems, but the GPU itself is currently in production.