Facebook already knows the smallest nitty gritty details about our lives, but anyone who knows more about the company would know they're working on some freaky Black Mirror level stuff in their R&D labs.

In a huge update for its brain-computer interface, the social networking giant talked about the strides its made in its push towards being able to "decode silent speech" without the need of implanting electrodes into the brain. Facebook first unveiled its brain-computer interface research initiative at its F8 conference in 2017, and has since worked with researchers at the University of California, San Francisco.

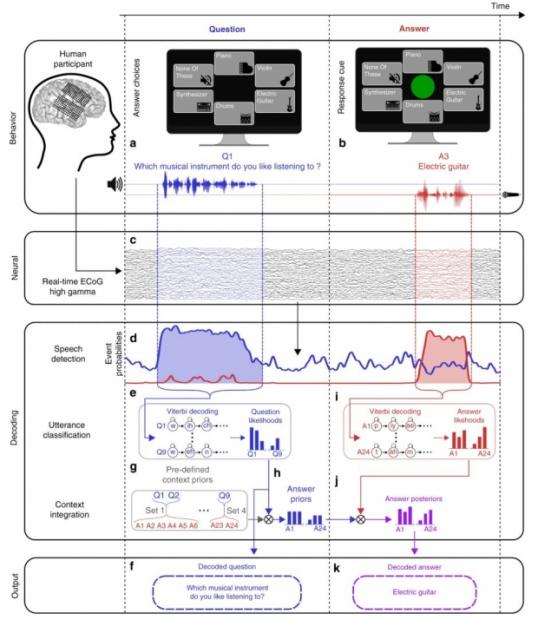

The researchers have published a new paper in Nature that details they've been working with people who have had brain surgery for epilepsy, creating an algorithm that can "decode a small set of full, spoken words and phrases from brain activity in real-time".

Obviously Facebook isn't going to go around drilling into users' heads (well maybe not yet) and notes that brain surgery patients are defintiely not the "non-invasive" approach it wants.

Facebook's own AR and VR research arm 'Reality Labs' has been looking into this, with one of the current methods detecting brain activity by monitoring oxygen levels in the brain with "a portable, wearable device made from consumer-grade parts". Right now it is "bulky, slow, and unreliable". But future models? Who knows.

Facebook said in a statement: "While measuring oxygenation may never allow us to decode imagined sentences, being able to recognize even a handful of imagined commands, like 'home,' 'select,' and 'delete,' would provide entirely new ways of interacting with today's VR systems - and tomorrow's AR glasses".

"Thanks to the commercialization of optical technologies for smartphones and LiDAR, we think we can create small, convenient BCI devices that will let us measure neural signals closer to those we currently record with implanted electrodes - and maybe even decode silent speech one day", it added.