DeepSeek AI is currently making headlines because it's doing more with less, creating complex and powerful open-source large-language models (LLMs) with a fraction of the hardware of some of the most prominent AI players. KIOXIA, a pioneer and leader in flash memory technology, has showcased how AiSAQ's all-in-storage solution for AI exponentially improves performance without the need for expensive DRAM.

At CES, we got to check out some cool new technology, but one AI-based breakthrough blew us away with its potential to shake up the industry. KIOXIA's open-source AiSAQ (All-in-Storage ANNS with Product Quantization) is all about leveraging the cost-effectiveness of SSDs over DRAM for data retrieval. And when it comes to AI, we're talking about a lot of data.

Today marks the open-source release of AiSAQ, which delivers exponentially scalable performance for retrieval-augmented generation (RAG) without placing index

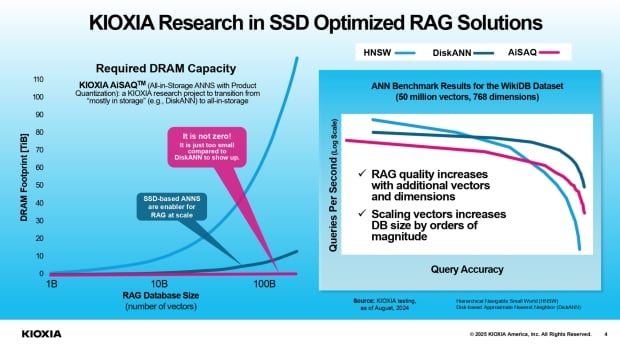

data in DRAM. Searching directly on SSDs is a groundbreaking solution for generative AI systems, and performance and accuracy remain impressive whether AiSAQ is dealing with 10 billion vectors or 100 billion.

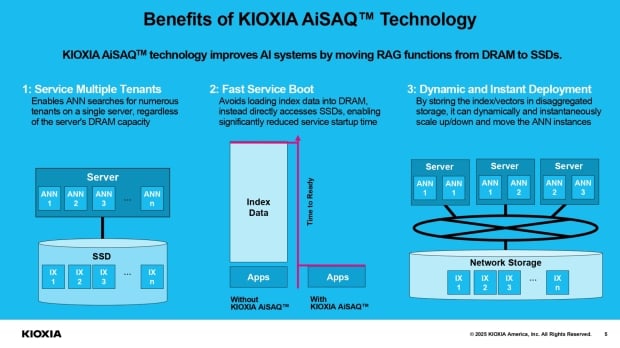

With data converted into vectors in a database, traditionally, the "approximate nearest neighbor" search (ANNS) algorithms are deployed in DRAM for speed and performance. KIOXIA AiSAQ's SSD approach even supports seamless switching between user-specific or application-specific databases for greater efficiency, and the disaggregated storage can be shared across multiple servers.

"Our AiSAQ solution unlocks the potential for RAG applications to scale nearly without limits, with flash-based SSDs at the heart of it all," said Neville Ichhaporia, senior vice president and general manager of the SSD business unit at KIOXIA America, Inc. "By leveraging SSD-based ANNS, we deliver performance comparable to leading in-memory solutions, but using a fraction of expensive DRAM - making large-scale RAG applications more accessible than ever."

KIOXIA AiSAQ is available to download via GitHub.