The Bottom Line

Introduction

SSDs have continued to mature as manufacturers search for refined methods to exploit the high performance and low latency of flash. What initially began as standard 2.5" drives with SAS/SATA connections, morphed into PCIe-connected SSDs and M.2 designs that communicate via the PCIe bus to achieve lower latency. New standards, such as NVMe, have even come to fruition (covered in our Defining NVMe article). Each step on the path to creating the perfect storage device has resulted in tangible benefits, but the ULLtraDIMM has taken what could be the final step, short of fusing a storage medium onto the CPU itself. The SanDisk ULLtraDIMM DDR3 SSD brings latency as low as five microseconds by sidestepping the traditional storage stack, and communicating via the DDR3 bus.

The first time I heard of ULLtraDIMM was during a press briefing with John Scaramuzzo, then the CEO of SMART Technologies, and now Senior Vice President and GM of SanDisk's Enterprise Storage Solutions team. SMART was a scrappy upstart spinning their way to becoming a behemoth of the enterprise SSD world, and John described several of the challenges of bringing such a revolutionary technology to market. Not only did they need to develop the product, but they also had to create an ecosystem of test, validation, and field support tools. They also needed to forge partnerships with OEM's to bring the product to market.

The initial key partnership was with Diablo Technologies, a Canadian company with ten years of experience in the memory subsystem space. Diablo developed the Memory Channel Storage (MCS) architecture (covered in more detail on the following pages), which delivers end-to-end parallelism by leveraging the memory subsystem. MCS's current incarnation works with NAND flash, but the forward-thinking architecture can also support future non-volatile memory technologies. Diablo provides the reference architecture, DDR3-to-SSD ASIC and firmware, kernel and application-level software development, OEM system integration, and ISV partnerships.

Another big step came when SanDisk acquired SMART in July of 2013. The move to SanDisk enabled direct access to NAND manufacturing and engineering, along with a wide range of OEM partnerships in the global market. SanDisk provides the SSD ASIC and FTL firmware development and testing, the Guardian Technology Platform, supply chain, manufacturing, and system validation.

Eventually, ULLtraDIMMs (Ultra Low Latency DIMM) will be plug-and-play with in-box drivers, but initial revisions will require UEFI BIOS modifications. OEM integration is important to provide compatible platforms. IBM (now Lenovo) was the first OEM to deploy ULLtraDIMMs, up to 12.8 TB per system, rebranded as eXFlash in their System x3850 and x3950 X6 servers. Supermicro followed suit, and is integrating ULLtraDIMMs into seven different models in their Green SuperServer and SuperStorage platforms. Huawei recently announced RH8100 V3 servers will feature an ULLtraDIMM option, and Diablo has mentioned that other OEM partnerships are in the works.

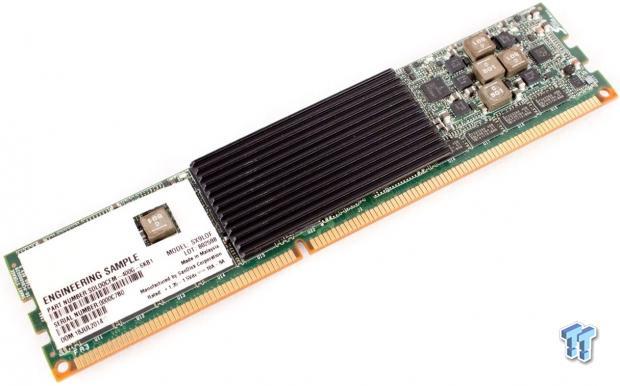

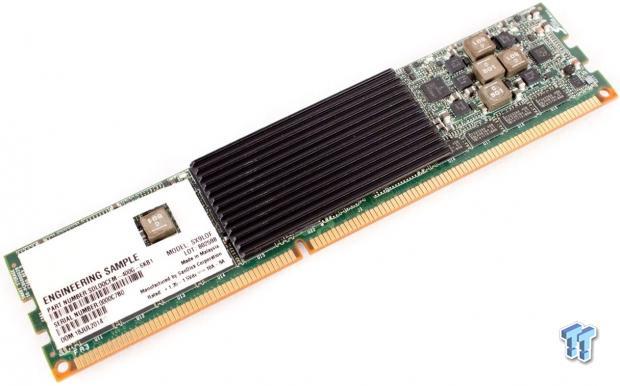

The hardware consists of a JEDEC-compliant ULLtraDIMM that presents itself as a block storage device with 200 or 400GB of capacity. The ULLtraDIMM utilizes two Marvell 88SS9187 controllers running the Guardian Technology Platform to increase endurance and reliability. This tandem delivers random read/write performance of 140,000/40,000 IOPS, and sequential read/write speeds up to 880/600 MB/s. Ten DWPD (Drive Writes Per Day) of endurance, and a five-year warranty (or TBW) are provided by SanDisk 19nm eMLC NAND. The ULLtraDIMM also sports a 2.5 million hour MTBF, and host power-loss protection. The most important attribute is latency as low as five microseconds. This even undercuts new NVMe PCIe SSDs, which hover around 20 microseconds.

Robust ten DWPD endurance enables the ULLtraDIMM to satisfy the requirements of write-intensive and mixed-workload applications. It is well suited for HPC, HFT, transactional workloads, big data, analytics, VDI, and cloud computing architectures. ULLtraDIMMs distributed architecture exploits parallelism for performance advantages, and scales to meet the capacity and performance requirements of applications. The ideal configuration for an ULLtraDIMM deployment is four to eight DIMMs at a minimum, but the platform scales up to 32 DIMMS and 12.8 TB of storage. It is important to note that our evaluation only utilizes two ULLtraDIMMs for testing.

With the players in position, SanDisk and Diablo are poised to radically alter server-side flash utilization. ULLtraDIMM will enable new designs for blade and microservers by eliminating HAD/RAID controllers, cabling, and complexity. SanDisk has displayed incredibly small servers with impressive storage density at several trade shows. Let's take a closer look at the technical aspects of the ULLtraDIMM.

Internals and Specifications

SanDisk ULLtraDIMM Internals and Specifications

The ULLtraDIMM connects into an industry-standard 240-pin DIMM slot, and features a LP 30mm x 133.35 RDIMM form factor. The DIMM is compatible with the JEDEC DDR3 protocol, and operates at the full DDR bus rate, from DDR3-800 through DDR3-1600. The device follows the same motherboard population rules that apply to standard DRAM sticks, and all communication with the host occurs on the memory bus. The device is interoperable with standard RDIMMs in the same channel, and appears as a single rank to the system. The ULLtraDIMM is compatible with Linux (RHEL, SLES, CentOS), Windows, and VMWare ESXI.

Many servers already have spare DRAM slots, especially in comparison to the number of available PCIe slots. There are up to 96 slots available on the memory bus. SanDisk and Diablo are also developing new DDR4 ULLtraDIMMs. With new high-density DDR4 128GB DRAM sticks, there is flexibility to deploy plenty of DRAM and ULLtraDIMMs to satiate even the most demanding workload requirements.

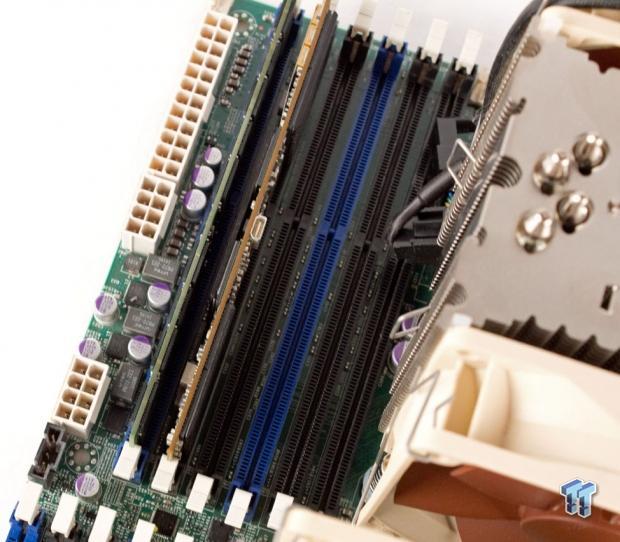

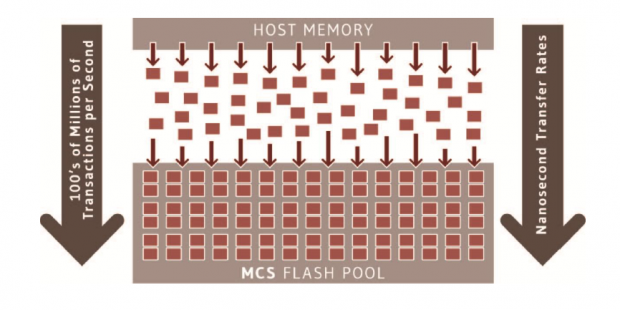

The close orientation of the devices illustrates the limited real estate on a typical motherboard. The memory slots have such limited space due to the high-speed parallel bus, which must be in close proximity to the CPU because it cannot transmit over long distances. PCIe is a serial bus, allowing it to be further from the CPU, but has an overall bandwidth limitation of 16 GB/s. PCIe bandwidth is carved up into an allocation for each lane, meaning a 16-lane device effectively has a top speed of 8 GB/s. A single DRAM slot, in contrast, can transfer 12.8 GB/s at nanosecond latencies.

There are no physical modifications of existing hardware for ULLtraDIMM compatibility, but UEFI BIOS changes are required. The BIOS modifications disable memtest, reserve memory space for the device, and create an entry for the ULLtraDIMM in the ACPI table. ULLtraDIMMs are designed to fit within the power and thermal envelopes of the existing memory subsystem. The 12.5W power draw and heat dissipation are well within the tolerance of a standard server. The ULLtraDIMMs' vertically aligned heat sinks take advantage of the server's native linear airflow pattern.

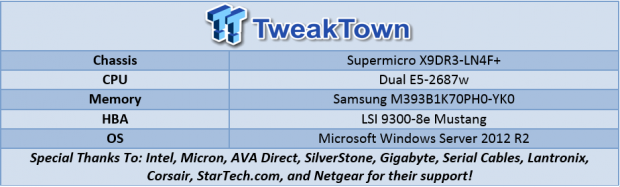

We tested the ULLtraDIMMs on a Supermicro X9DR3-LN4F+ development system. The X9DR3-LN4F+ features the C606 chipset, supports the E5-2600 v2 family, and up to 1.5TB of ECC DDR3 DRAM. The system also features six PCIe slots and 4x Gigabit Ethernet ports via an Intel i350 controller. 2x SATA 6Gb/s ports, 4x SATA 3Gb/s ports, and 6x SAS ports round out an impressive stable of connectivity accentuated with ULLtraDIMM compatibility.

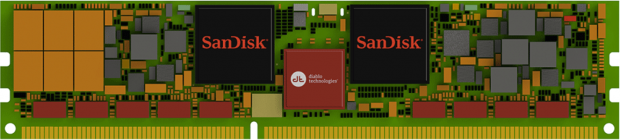

Many of the surface-mounted components of the actual device are covered by heat sinks. The large SanDisk blocks in the illustration are dual Marvell 88SS9187 controllers that control the two banks of NAND on the other side of the device. There is a 1GB Micron DRAM package for each respective SSD ASIC next to the NAND. Each configuration is built from CloudSpeed 1000E components (covered here).

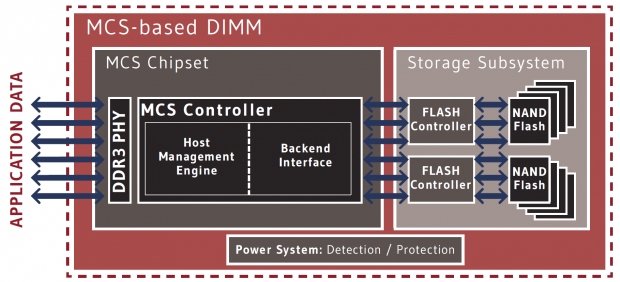

Each module features 16 independent channels into the DIMM. The nine red boxes along the bottom of the ULLtraDIMM, and the large chip in the center, are Diablo components. The DDR3 interface layer provides the electrical and timing interface. The Diablo chipset is responsible for capturing data from the host management engine by decoding host commands, and deciphering packets. Utilizing on-board ASICs keeps host overhead low by removing the lion's share of processing burden from the host.

The six PolyTantalum capacitors, which are an integral part of the third-generation EverGuard design, provide power-loss protection for the entire device, including the MCS chipset. This guarantees all data will be persisted to the underlying media in the event of a power-loss event. A debug port (not used in deployment) is located on the top of the ULLtraDIMM.

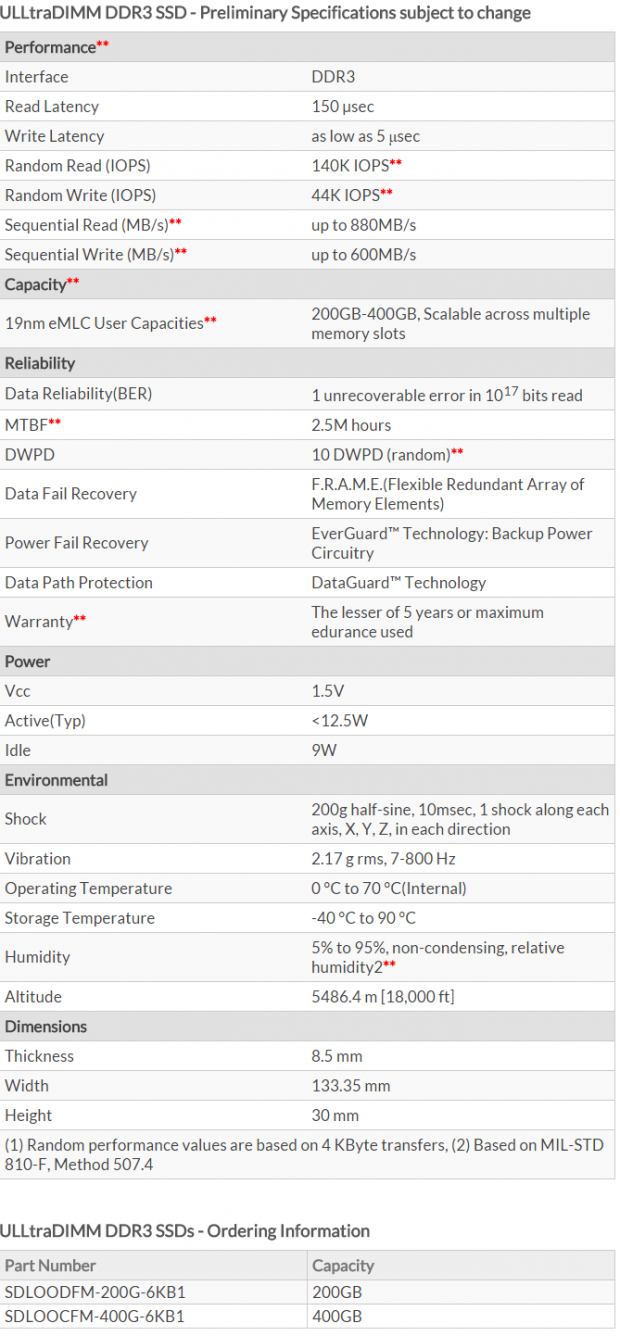

Specifications

The ULLtraDIMM is JEDEC-compliant with JC-45-2065.45 and JESD218 standards.

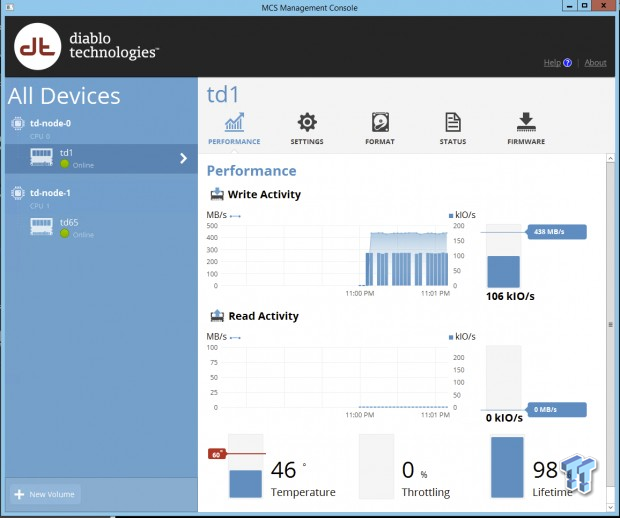

MCS Management Console

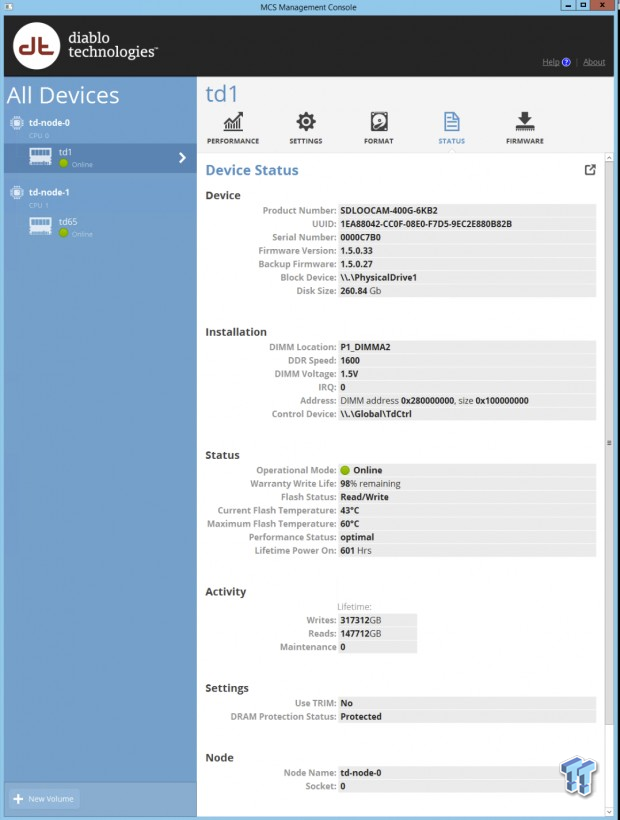

The MCS Management Console is simple and intuitive. It provides granular control of the device, and real-time performance, endurance, and thermal monitoring.

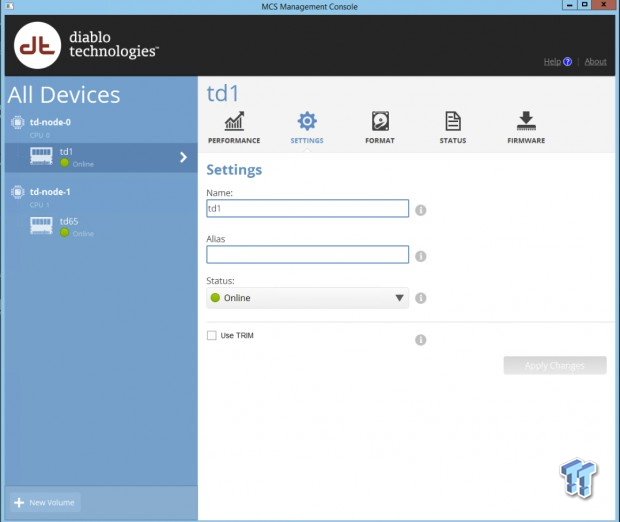

In the settings pane, the device can be placed offline for maintenance purposes. Maintenance mode allows the creation of new arrays with the selection to the bottom left, and ULLtraDIMMs can be configured in striped or mirrored arrays. Users can also indicate whether they would like to utilize TRIM functionality, which is a great inclusion for an enterprise SSD.

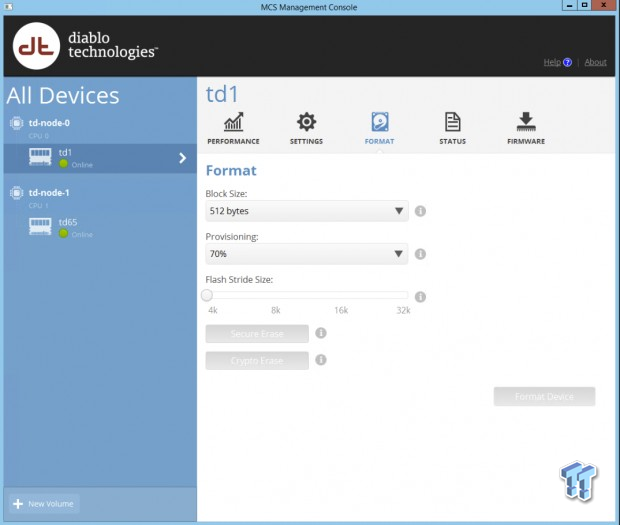

The ULLtraDIMM is configurable with 512B or 4K alignments. Each ULLtraDIMM has 28% of transparent overprovisioning. Extra overprovisioning can be added in 10% increments, up to 90% of the addressable capacity. Extra overprovisioning provides better performance, consistency, and endurance.

This incredible granularity allows for easy tuning of the SSD to varying workloads. There are also Format, Secure Erase, and Crypto Erase options.

The status area allows us to observe just how much pain we put on the ULLtraDIMM during initial testing. The lifetime read and write activity is on display, along with power on hours, and other relevant information. We thoroughly tested every parameter with no issues.

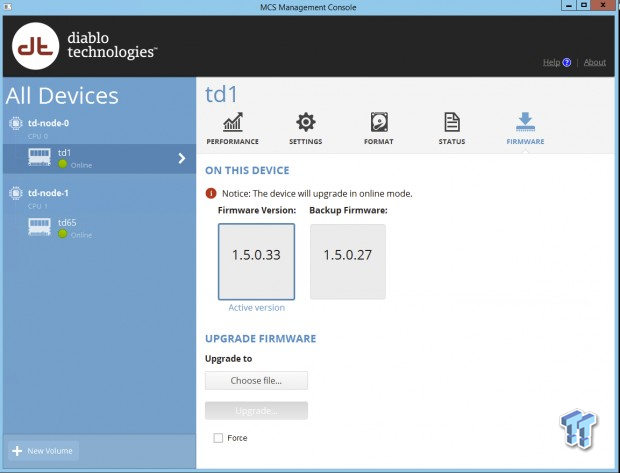

The MCS Management Console allows for in-system firmware updates, and upgrades occur while the device is in online mode.

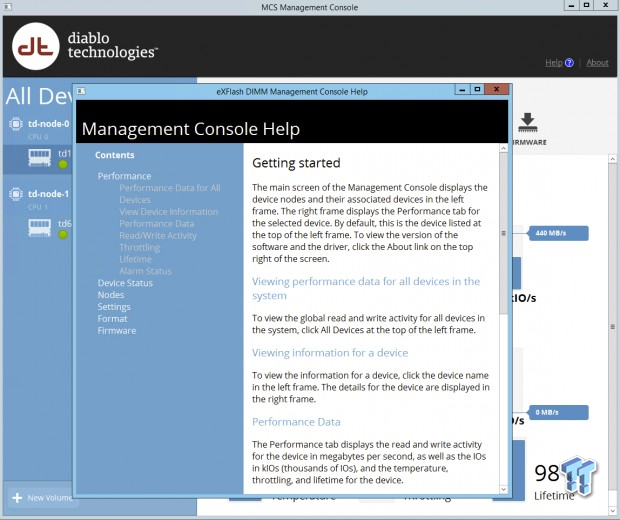

An exhaustive help section explains the functions and features.

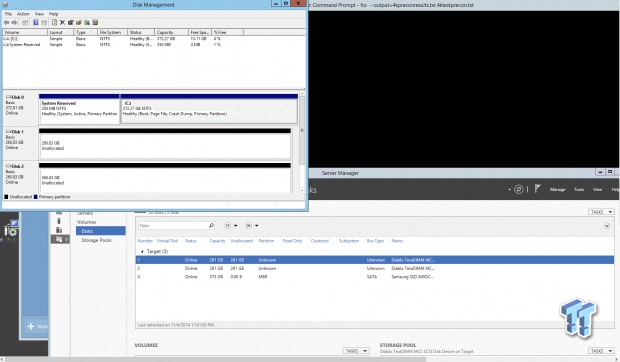

Once the device is brought online in the MCS Management Console, it immediately appears in the Disk Management and Server Manager utilities. The ULLtraDIMM is configurable, and operates as a normal block storage device.

MCS Architecture and VSAN

MCS Architecture

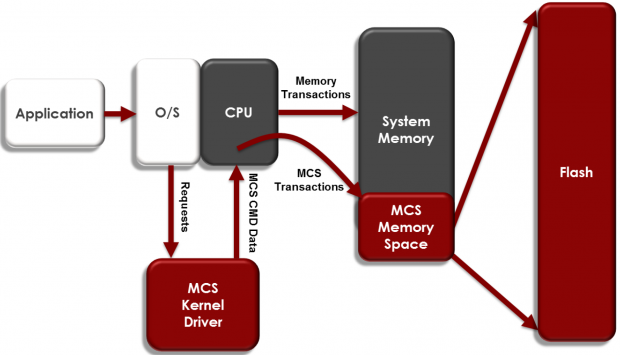

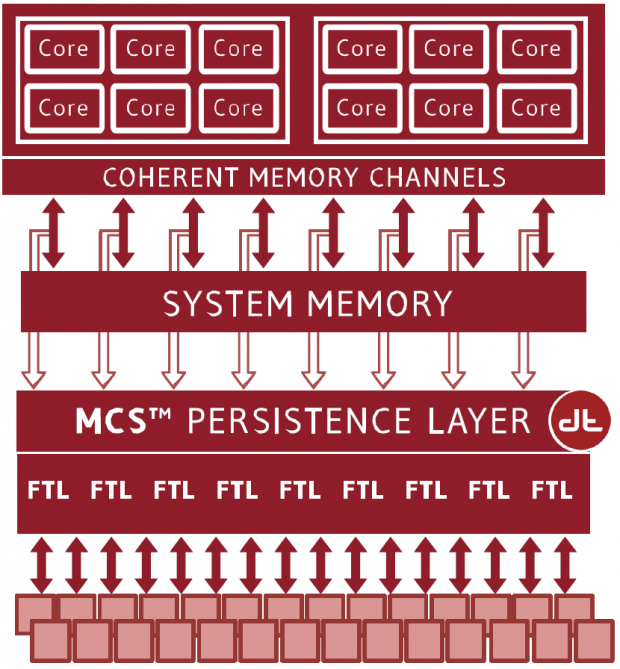

The benefits of deploying flash within the parallel memory subsystem, and keeping I/O in the CPU where processing and applications reside, are apparent in this graphic. Typically, data is DMA'd back and forth between the memory and storage subsystems, but with the MCS Carbon1 architecture, all I/O requests and completions are confined within the NUMA architecture, and can operate at the speed of the memory bus. Leveraging the memory subsystem of the processor gives MCS access to a scalable architecture that avoids the software/hardware I/O stack, and does not allow degradation or diminishing returns as it scales.

In contrast, the multi-purpose PCIe bus wasn't specifically designed for storage traffic. I/O incurs latency as it traverses the gap between the memory subsystem and the I/O Hub. The I/O Hub is restricted to one processor that handles all PCIe traffic, creating device contention with high performance add-in cards. MCS circumvents the HBA/RAID controller layer for routine data processing, and operations are simply a copy from one address to another within the memory subsystem.

The low latency MCS kernel driver routes requests through the CPU to the MCS Memory Space. The driver emulates SCSI in Windows and VMWare environments, and bypasses SCSI/SATA in Linux. The driver thread handles remapping, generates commands, posts them to the device, monitors the status, and copies data. MCS processes requests asynchronously, and the driver handles SMART logs, thermal data, statistics, and events.

The MCS persistence layer is responsible for early-commit write operations. When the software layer passes data to the persistence layer, the driver can immediately signal operation completion, even if the I/O has not entirely filtered through the FTL (Flash Translation Layer) to the NAND. EverGuard power-loss protection is the key to early-commit. Any data in the MCS Persistence Layer will be persisted to the media, even in the event of host power loss.

The internal block diagram of the ULLtraDIMM reveals device-based internal parallelism as well. The two flash controllers respond to data from the MCS controller, and handle each pool of NAND in an aggregated fashion.

Diablo and SanDisk aren't content to rest on their laurels. The MCS architecture is media agnostic and can work with future non-volatile memories such as 3D NAND, resistive NAND, Magentoresistive NAND, and phase change memory. The current implementation communicates via SCSI in Windows and VMWare, but the modular design can allow other protocols, such as NVMe, in the future.

Millions of DRAM products have shipped into the datacenter, but not one of them has actually stored one bit of data. Volatility is a major challenge, and Diablo and SanDisk are developing memory-mapped units that emulate main memory to provide a large in-memory processing pool. DRAM has a much higher cost structure than flash, not to mention power consumption, so the prospect of utilizing NAND with DRAM-like response times is alluring. In-memory processing is systematically backed-up or check-pointed, but a persistent memory layer lifts that requirement. DRAM is often used to accelerate I/O, and by alleviating that burden, it can be dedicated back to application processing.

The new Carbon2 architecture is currently in development. Carbon2 features DDR4 support, lower latency from a souped-up processing engine, and NanoCommit Technology. NanoCommit is an API that allows DRAM persistence at nanosecond latencies to NAND flash, and enables mirroring of DRAM to persistent storage.

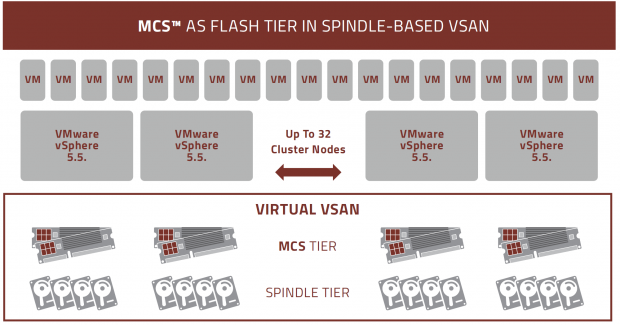

VSAN

MCS doesn't require any operating system or application modifications, but Diablo is working with several ISV's, including Percona and VMWare, to enhance interoperability. Partnerships with these leading vendors will provide enhanced performance in key applications, such as VSAN and database environments.

VSAN features both flash and spindle tiers for data storage. Each disk group has one flash drive, and up to seven spindles. A key advantage of the ULLtraDIMM is that multiple devices appear as one flash volume to the system. This allows for easy scaling of capacity and endurance in the flash tier; administrators can simply add more ULLtraDIMMs to address application performance, endurance, and capacity requirements. This desirable attribute avoids adding more drives to the spindle tier. Diablo provides in-depth VSAN performance comparisons against PCIe SSDs in their VSAN whitepaper.

Guardian Technology Platform

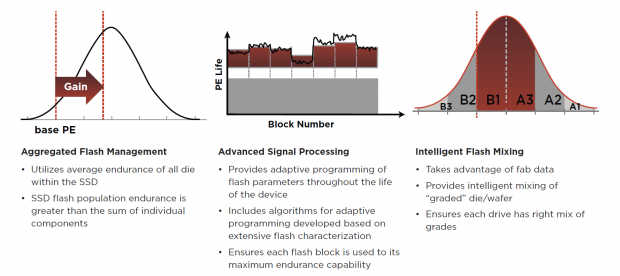

SanDisk's award-winning Guardian Technology Platform is a proprietary approach that manages the physics of the NAND, and not just the errors. It consists of three integral components.

- FlashGuard

SanDisk subjects all NAND to an extensive characterization process before assembly. Aggregated flash management ensures there is an even distribution of different classes of NAND to benefit the whole. Once assembled, the Guardian Technology Platform takes over, and NAND settings are monitored and adjusted constantly. Retention and wear metrics are typically programmed during NAND processing, and never altered. With Guardian, adaptive programming tailors the NAND settings at the base level by dynamically adjusting flash parameters over the course of the SSDs life. This creates an exponential increase in NAND endurance.

As NAND geometries shrink, endurance also shrinks, and data errors multiply. SMART utilizes adaptive ASP (Advanced Signal Processing) in concert with advanced two-level BCH ECC algorithms to combat data errors. Typical SSDs can only achieve wear leveling on each NAND package, but Aggregated Flash Processing treats all of the flash elements as a whole, and provides global cross-die wear leveling. The wear leveling algorithms also monitor the health of individual flash cells, and dynamically focuses the heaviest workloads on the healthiest flash. The result is impressive endurance metrics. The ULLtraDIMM provides ten DWPD of random write endurance, and 25 DWPD for sequential write workloads.

- DataGuard

DataGuard provides full data path protection, and the F.R.A.M.E. (Flexible Redundant Array of Memory Elements) functionality. F.R.A.M.E. is a cross-die data redundancy feature, similar to parity, which allows for data reconstruction in the event of a catastrophic event, such as a flash page or block failure (some incarnations can even recover from an entire die failure). This internal XOR provides embedded fault tolerance, and a BER rating of one unrecoverable error per 10^17.

- EverGuard

EverGuard is a third generation host power-loss protection design that protects against unexpected power interruptions. An array of discrete PolyTantalum capacitors provides power to flush all data in transit to the NAND during power loss. These Tantalum capacitors are rated for high temperature environments, and do not experience degradation over time. There are additional architectural features, such as advanced controller power management and lower pages reserved for power-down writes, that reduce the power hold up time requirements. The entire MCS chipset falls under the EverGuard umbrella, providing power backup for early-commit operations.

Test System and Methodology

We designed our approach to storage testing to target long-term performance with a high level of granularity. Many testing methods record peak and average measurements during the test period. These average values give a basic understanding of performance, but fall short in providing the clearest view possible of I/O QoS (Quality of Service).

While under load, all storage solutions deliver variable levels of performance. 'Average' results do little to indicate performance variability experienced during actual deployment. The degree of variability is especially pertinent, as many applications can hang or lag as they wait for I/O requests to complete. While this fluctuation is normal, the degree of variability is what separates enterprise storage solutions from typical client-side hardware.

Providing ongoing measurements from our workloads with one-second reporting intervals illustrates product differentiation in relation to I/O QoS. Scatter charts give readers a basic understanding of I/O latency distribution, without directly observing numerous graphs. This testing methodology illustrates performance variability, and includes average measurements during the measurement window.

IOPS data that ignores latency is useless. Consistent latency is the goal of every storage solution, and measurements such as Maximum Latency only illuminate the single longest I/O received during testing. This can be misleading, as a single 'outlying I/O' can skew the view of an otherwise superb solution. Standard Deviation measurements consider latency distribution, but do not always effectively illustrate I/O distribution with enough granularity to provide a clear picture of system performance. We utilize high-granularity I/O latency charts to illuminate performance during our test runs.

Our testing regimen follows SNIA principles to ensure consistent, repeatable testing, and utilizes multi-threaded workloads found in typical production environments. We tested two SanDisk ULLtraDIMMs, but it is important to note that typical deployments will consist of larger arrays, typically between four and eight devices. The MCS Management Console provides incredible overprovisioning granularity. Users can select from 10% to 90% of additional overprovisioning, and for this evaluation, we test single and striped RAID configurations with 100% (full-span) utilization, and 70% capacity utilization (30% additional overprovisioning).

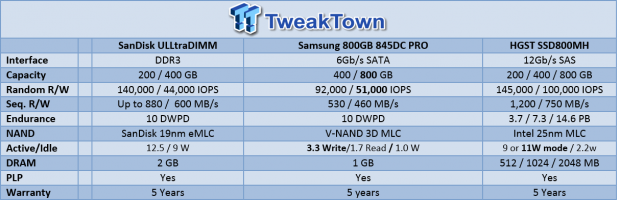

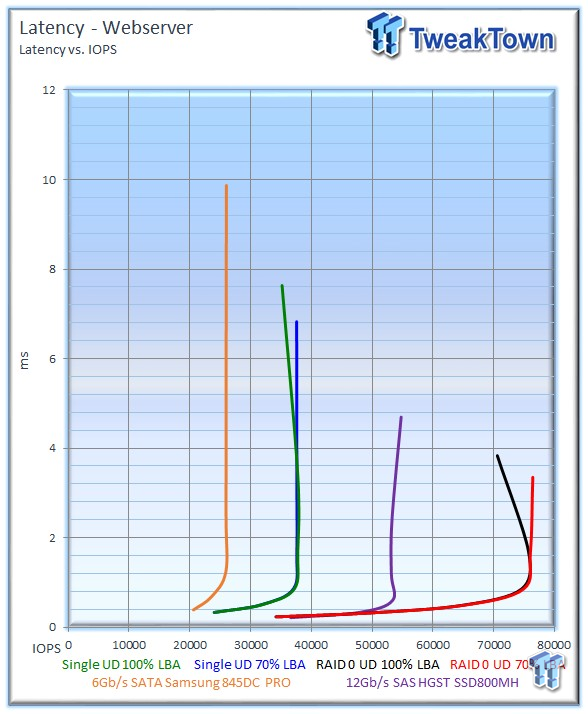

We didn't pull any punches with the competing devices. The 6Gb/s SATA Samsung 845DC PRO and the 12GB/s SAS HGST SSD800MH both deliver leading performance in their respective categories. Both competing drives where attached via a 12Gb/s LSI 9300-8i HBA during testing. We will circle back with PCIe competitors when we receive additional ULLtraDIMM samples.

The first page of results provides the 'key' to understanding and interpreting our test methodology.

Benchmarks - 4k Random Read/Write

4k Random Read/Write

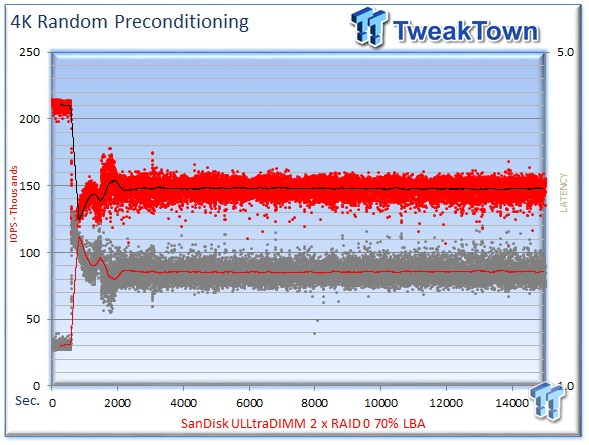

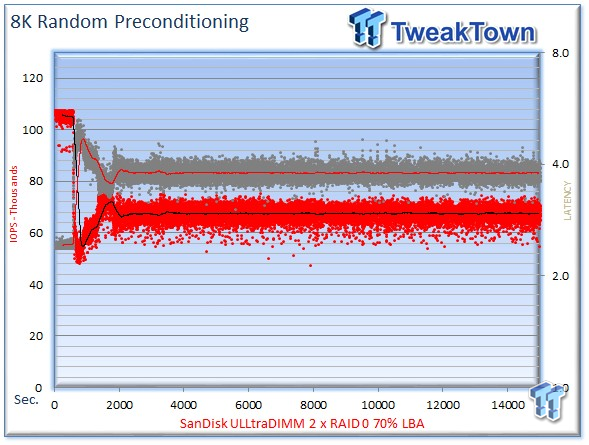

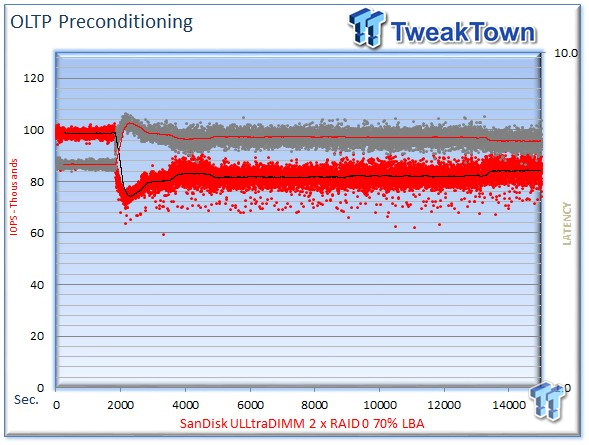

We precondition the 400GB SanDisk ULLtraDIMM DDR3 SSD for 15,000 seconds, or four hours, receiving performance reports every second. We plot this data to illustrate the drives' descent into steady state.

This dual-axis chart consists of 30,000 data points, with the IOPS on the left, and the latency on the right. The red dots signify IOPS, and the grey dots are latency measurements during the test. We place latency data in a logarithmic scale to bring it into comparison range. The lines through the data scatter are the average during the test. This type of testing presents standard deviation and maximum/minimum I/O in a visual manner.

Note that the IOPS and Latency figures are nearly mirror images of each other. This illustrates that high-granularity testing gives our readers a good feel for latency distribution by viewing IOPS at one-second intervals. This should be in mind when viewing our test results below. This downward slope of performance only happens during the first few hours of use, and we present precondition results only to confirm steady state convergence.

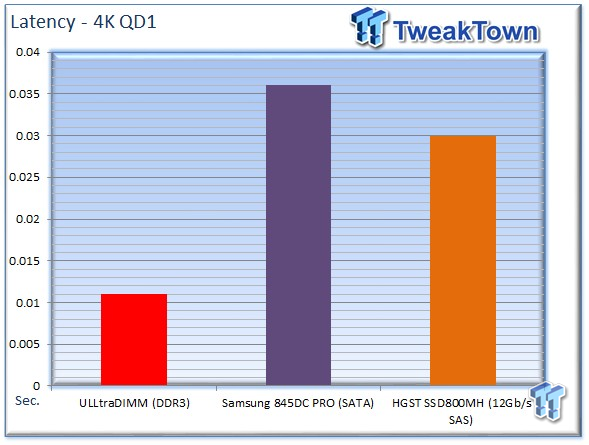

One of the most impressive benefits of the ULLtraDIMM architecture is reduced latency due to the efficiency of the memory bus. Our normal test suite is multi-threaded, and begins with an OIO (Outstanding I/O) count of eight. As with any normal storage device, once subjected to sustained multi-threaded workloads, the latency will increase. This prevents us from observing the base latency of the ULLtraDIMM. To measure the lowest attainable latency, we conducted a latency test utilizing an industry-standard measurement of 4k random transfers with a single thread at a queue depth of one. Both competing drives, the SATA 6Gb/s Samsung 845DC PRO and the 12GB/s SAS HGST SSD800MH, were tested while attached via an LSI 9300-8i HBA.

A single ULLtraDIMM posted a score much lower than the competing devices, at .011ms. The SATA Samsung 845DC PRO had the highest latency measurement at .036, and the 12Gb/s SAS HGST SSD800MH weighed in with .03. The ULLtraDIMM easily blew away the competition, and SanDisk advises they routinely measure latency as low as .005. There is a distribution of I/O requests that lands below the average results presented during the measurement window. Unfortunately, many test utilities are not well equipped to measure the ULLtraDIMM's extreme low latency. Most utilities simply merge the lowest latency measurements into 00-20ms or 00-50ms buckets. SanDisk specs according to the lowest achievable latency, and IBM has also recorded results as low as .005 with the eXFlash solution (rebranded ULLtraDIMM).

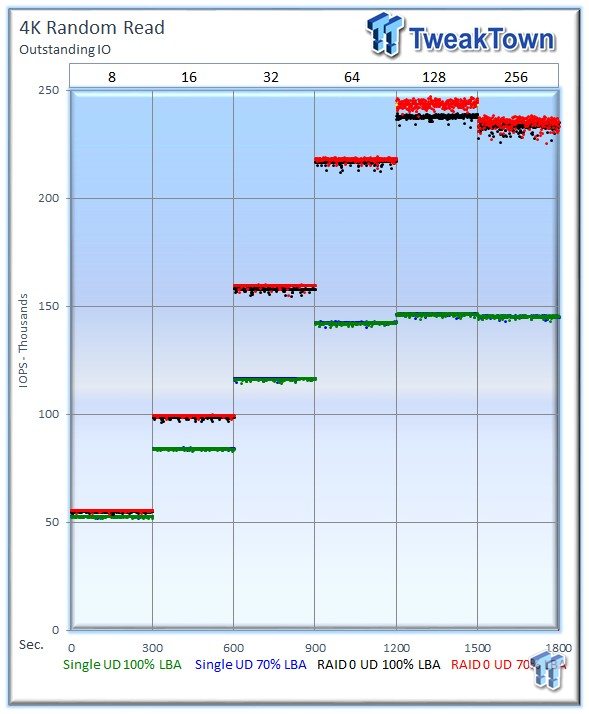

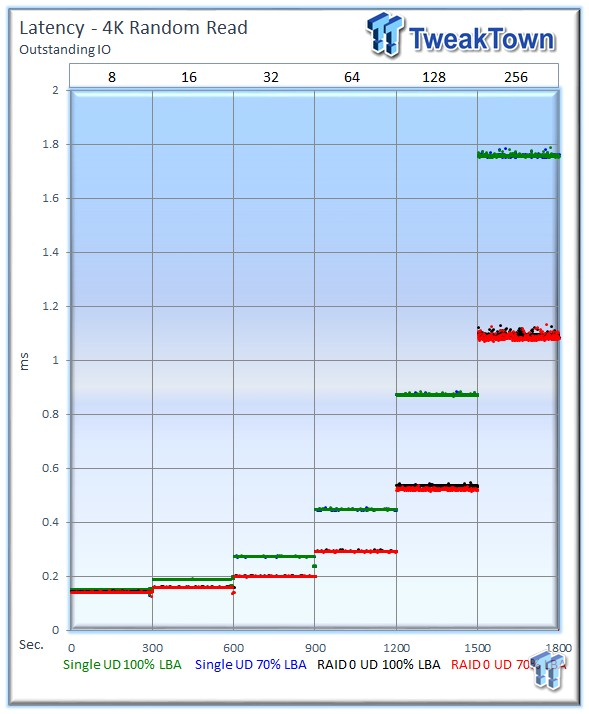

Each level tested includes 300 data points (five minutes of one second reports) to illustrate performance variability. The line for each OIO (Outstanding I/O) count represents the average speed reported during the five-minute interval. 4k random speed measurements are an important metric when comparing drive performance, as the hardest type of file access for any storage solution to master is small-file random. 4k random performance is one of the most sought-after performance specifications, and it's a heavily marketed figure.

With two 400GB ULLtraDIMMs in a RAID 0 configuration at 70% utilization, we averaged 235,254 IOPS at 256 OIO. We also attained similar results at 100% utilization with an average of 233,263 IOPS. With a single ULLtraDIMM at 100% utilization, we received 145,386 IOPS, and with extra overprovisioning, we attained 145,442 IOPS. The benefits of extra overprovisioning typically kicks in during random write workloads, as examined below.

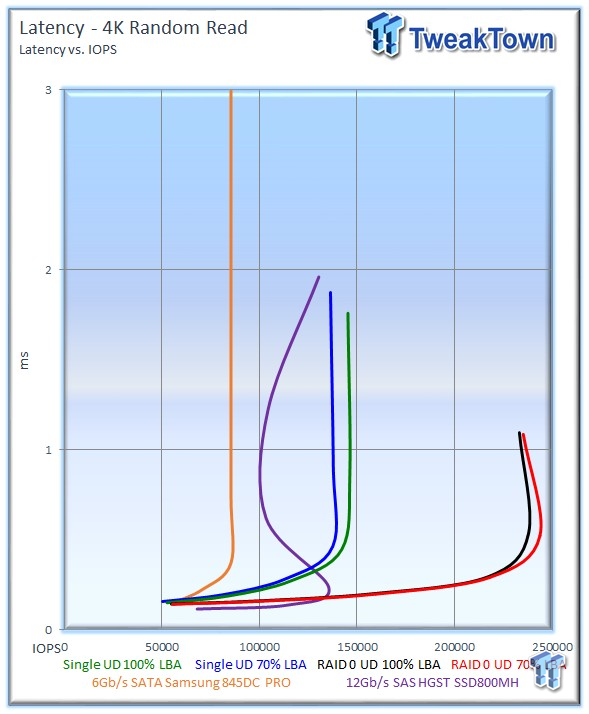

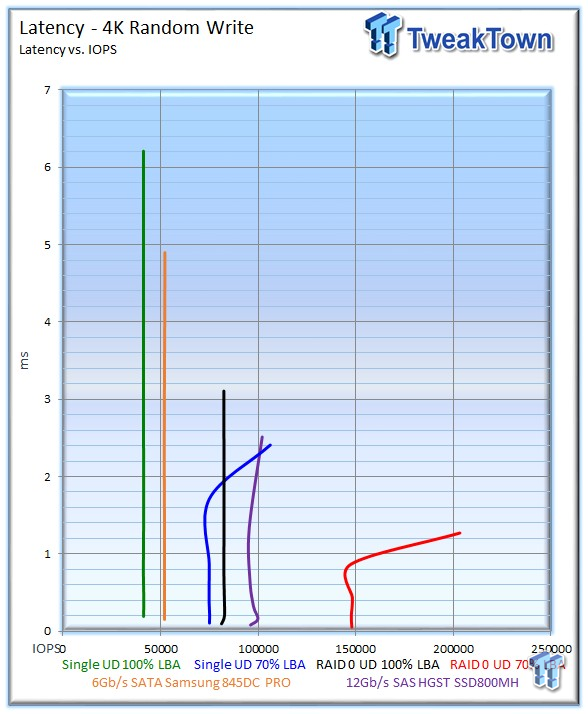

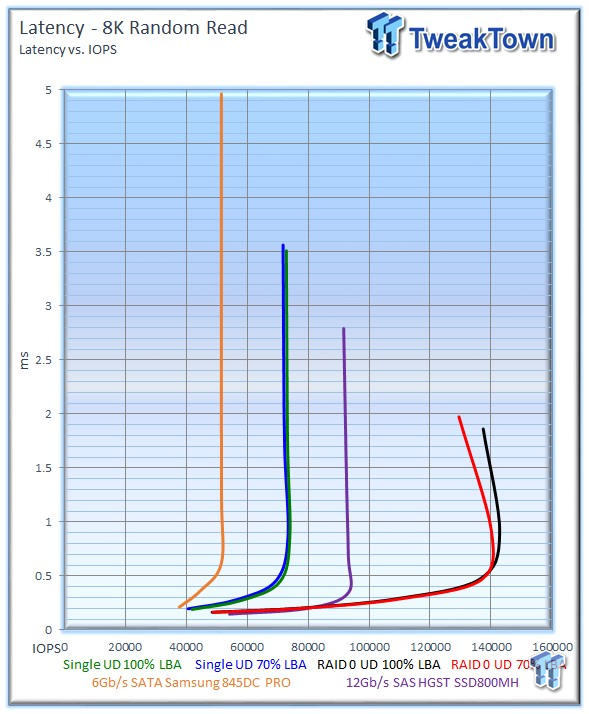

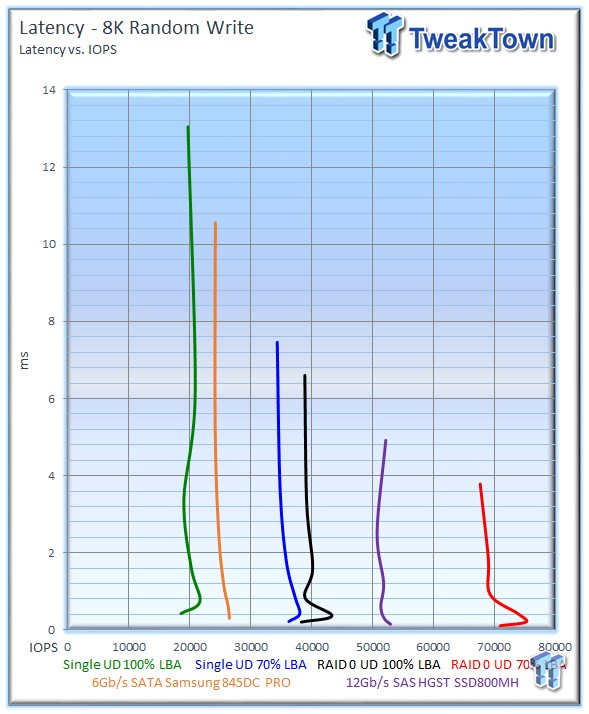

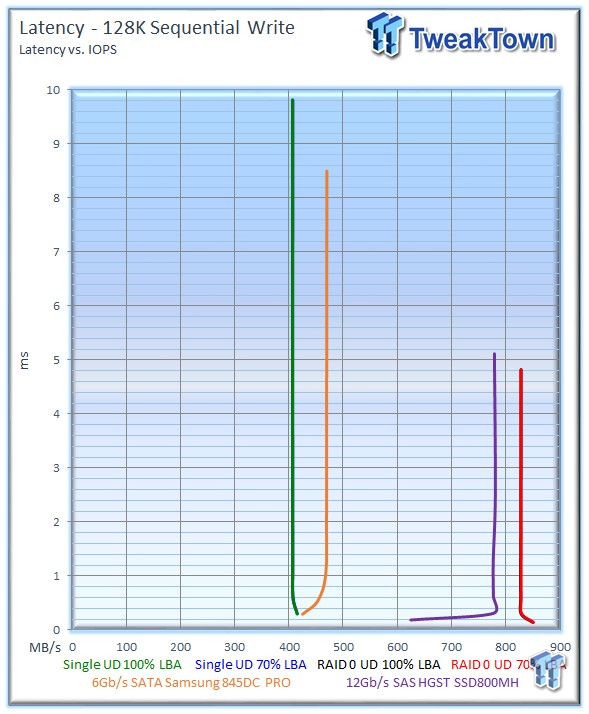

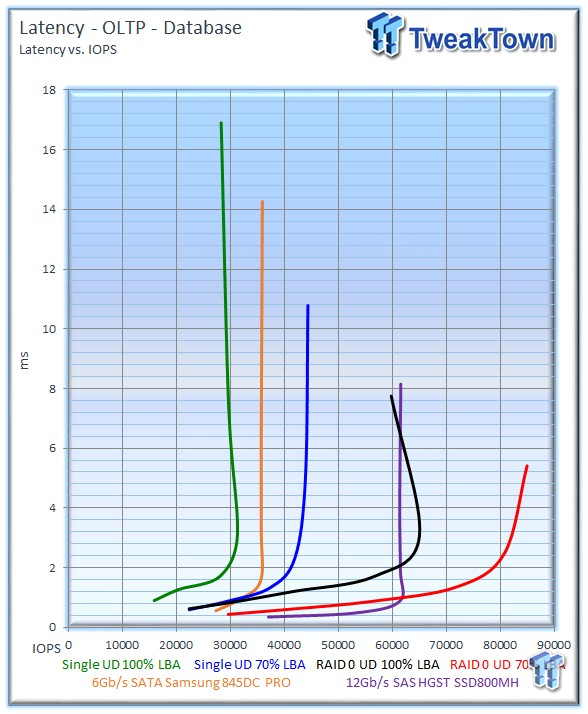

Our Latency vs IOPS charts compare the amount of performance attained from each solution at specific latency measurements. Many applications have specific latency requirements. These charts present relevant metrics in an easy-to-read manner for those familiar with specific application requirements. The results that are lowest, and furthest to the right, exhibit the most desirable latency characteristics.

We include data from a 6Gb/s SATA Samsung 845DC PRO and 12Gb/s SAS HGST SSD800MH in our Latency vs IOPS test. The results highlight the enhanced performance-to-latency ratio attainable via the memory bus with ULLtraDIMM SSDs. One ULLtraDIMM provides increased performance over the competing SSDs, and two ULLtraDIMMs in RAID 0 deliver astounding performance of roughly 240,000 IOPS at the .05ms threshold.

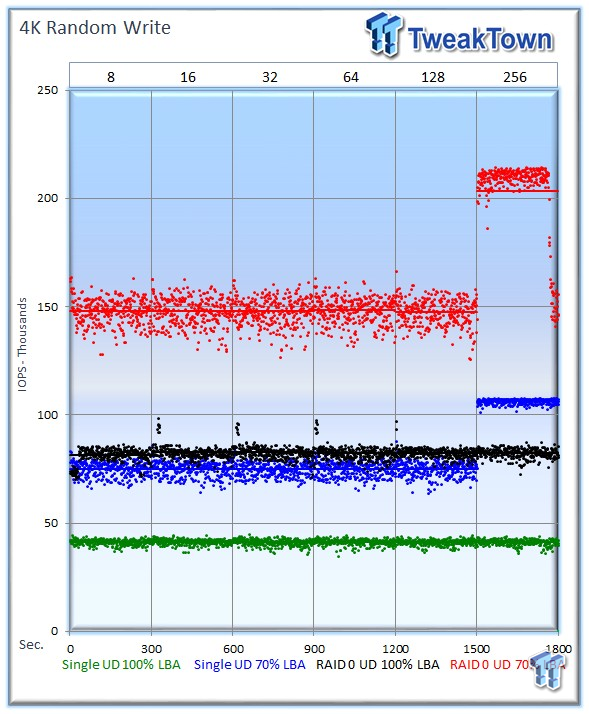

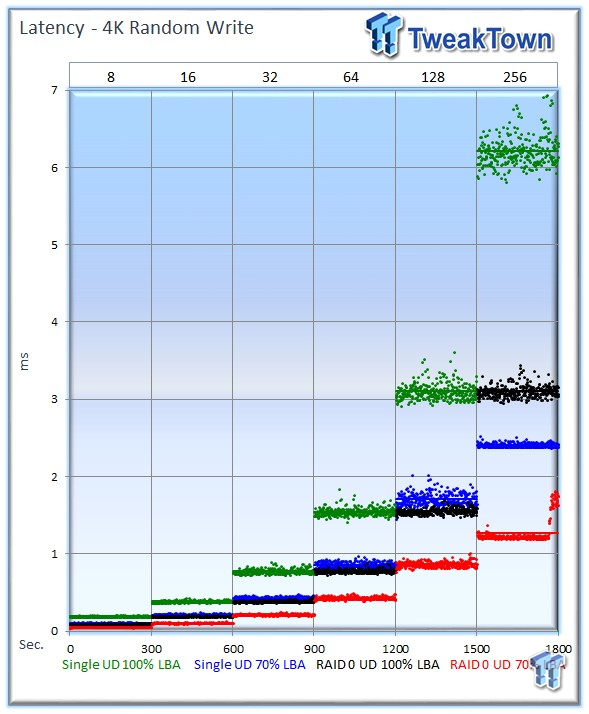

The benefits of extra overprovisioning become apparent in random write workloads, and the two-drive ULLtraDIMM array tops out at 256 OIO with 203,296 IOPS. At 100% utilization, the two-drive array scores 82,451 IOPS. A single ULLtraDIMM at 100% utilization averages 41,289 IOPS, and with 70% utilization, that is boosted up to 106,229 IOPS.

The dual ULLtraDIMMs with 70% utilization deliver extreme performance of 165,000 IOPS at 1ms. The 12Gb/s SAS HGST is incredibly competitive at this workload, but falls behind the RAID 0 ULLtraDIMM configuration.

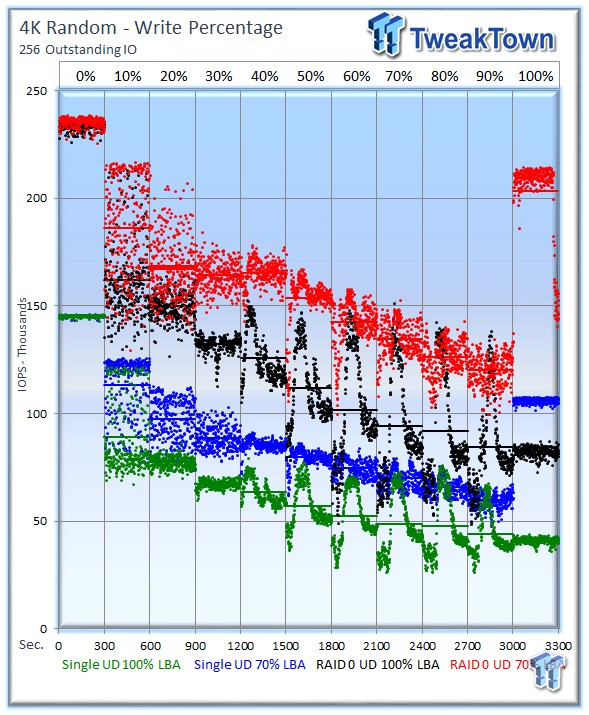

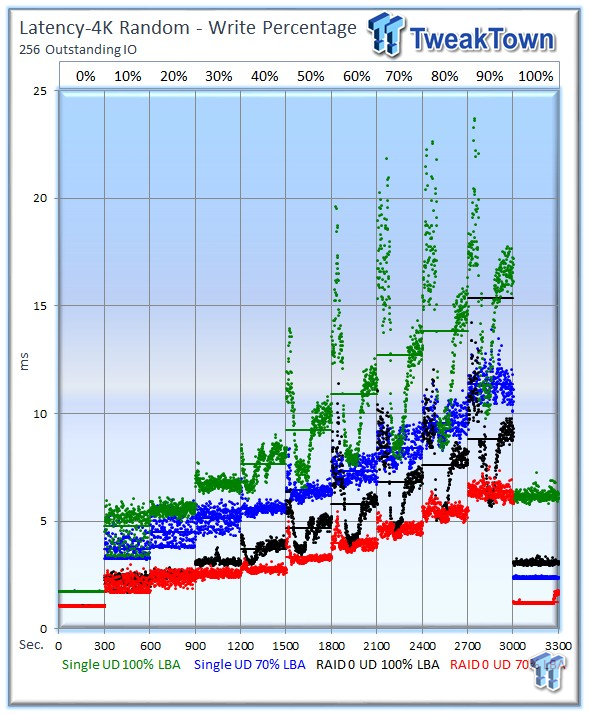

Our write percentage testing illustrates the varying performance of each solution with mixed workloads. The 100% column to the right is a pure 4k write workload, and 0% represents a pure 4k read workload. Mixed I/O is a constant reality in VDI, and other intensive applications, resulting in the I/O blender effect. The ULLtraDIMM configurations provide impressive mixed workload performance, and recover rapidly from extended workloads. They resist our best efforts to keep them in a steady state during each extended period of the test (from 40-90% mix with 100% utilization), demonstrating their resilience to heavy workloads.

Latency scales within expectations during the mixed workload testing.

Benchmarks - 8k Random Read/Write

8k Random Read/Write

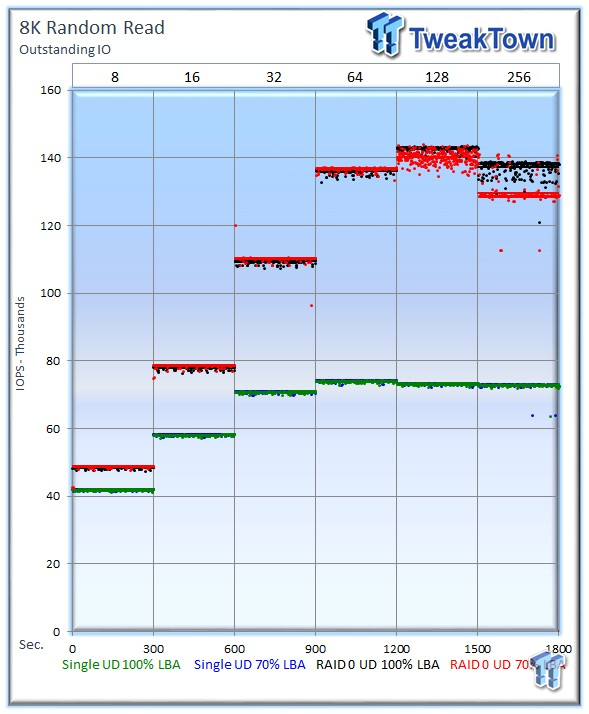

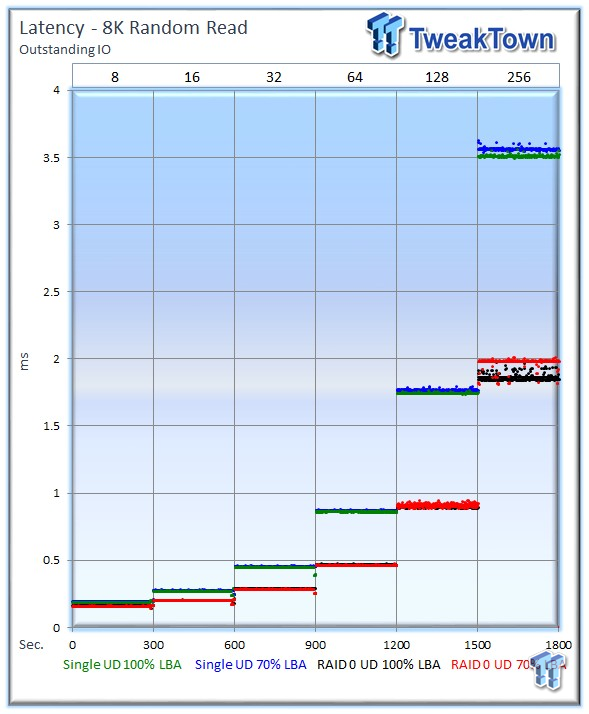

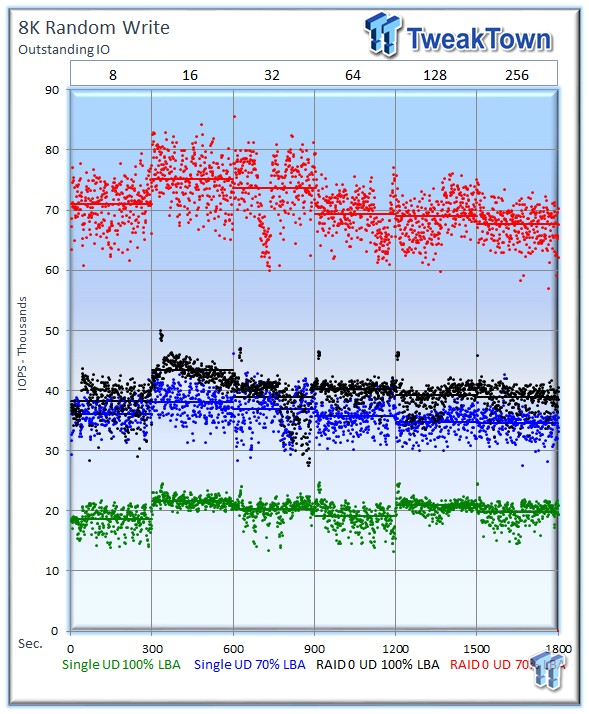

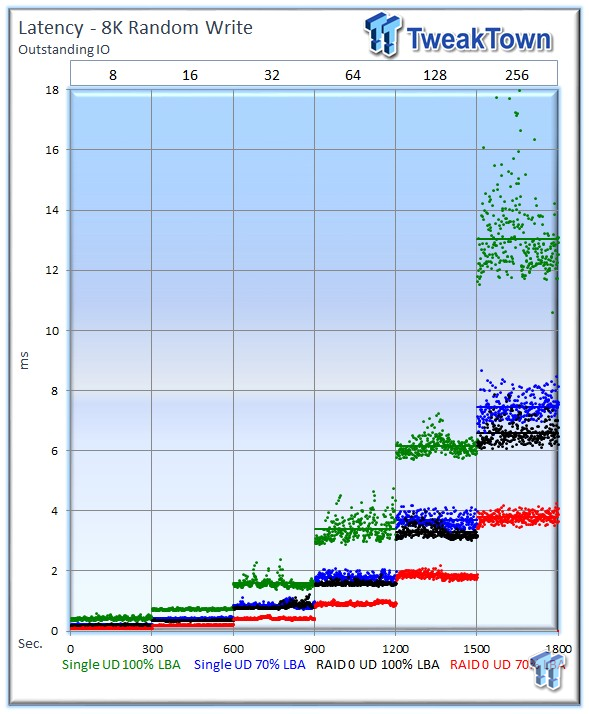

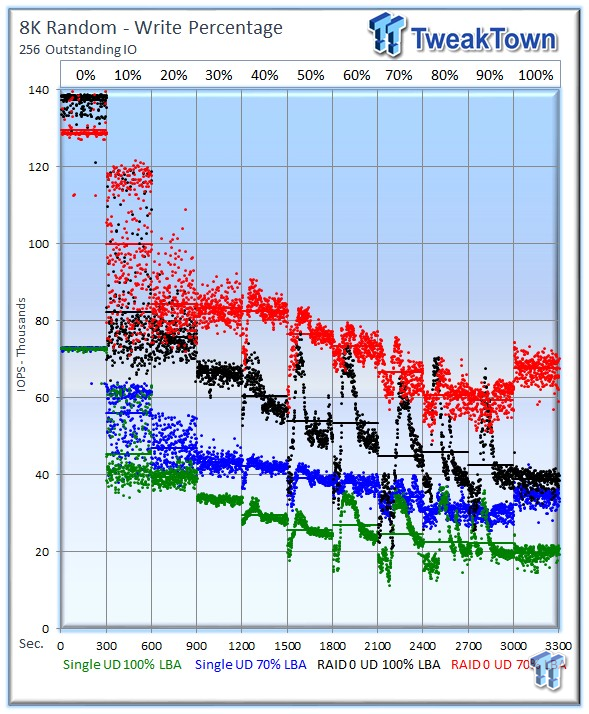

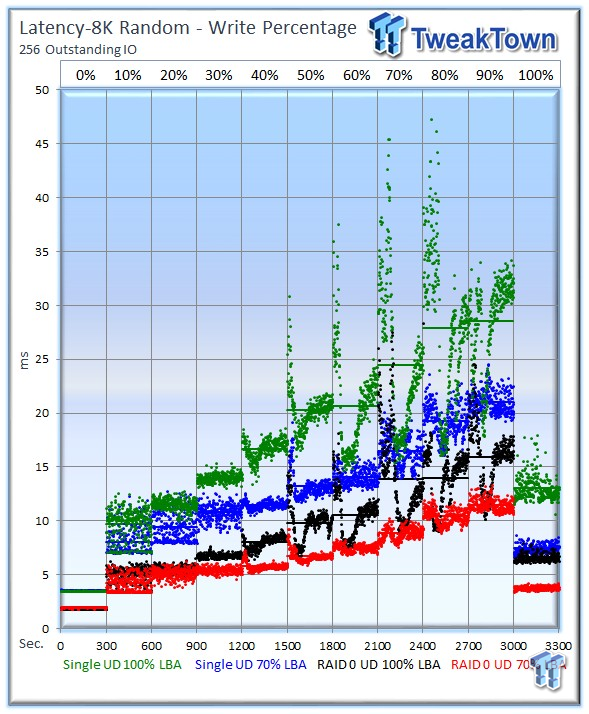

Many server workloads rely heavily upon 8k performance, and we include this as a standard with each evaluation. Many of our server workloads also test 8k performance with various mixed read/write distributions.

Two ULLtraDIMMs at 70% utilization deliver 129,474 IOPS, and with 100% utilization, we receive an average of 137,415 IOPS. Both 100% and 70% utilizations of a single ULLtraDIMM weighed in with 73,000 IOPS.

Two ULLtraDIMMs in a striped RAID configuration deliver a resounding win in this test.

The benefits of extra overprovisioning are very clear. One ULLtraDIMM with 70% utilization nearly reaches the speed of two ULLtraDIMMs at 100% utilization. A single ULLtraDIMM with 100% utilization reaches 19,743 IOPS, and with 70% utilization, it weighs in with 34,547 IOPS. With two ULLtraDIMMs in RAID at 70% utilization, we observe 67,655 IOPS at 256 OIO, and higher speeds with lower loads. With 100% utilization, they score 38,897 IOPS at 256 OIO.

Once again, two ULLtraDIMMs dominate with an outstanding Latency-to-IOPS measurement.

The ULLtraDIMMs scale well in mixed workloads, and latency falls within expected scaling.

Benchmarks - 128k Sequential Read/Write

128k Sequential Read/Write

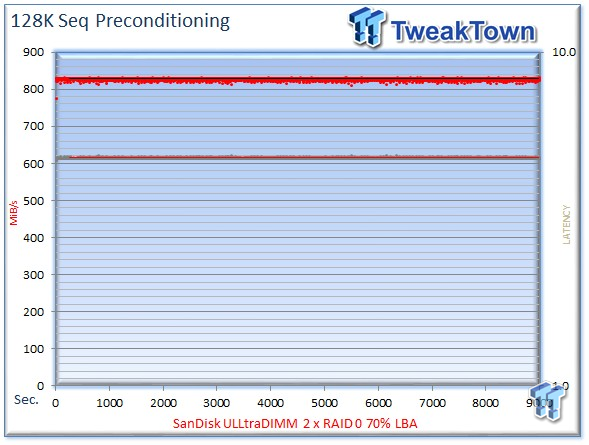

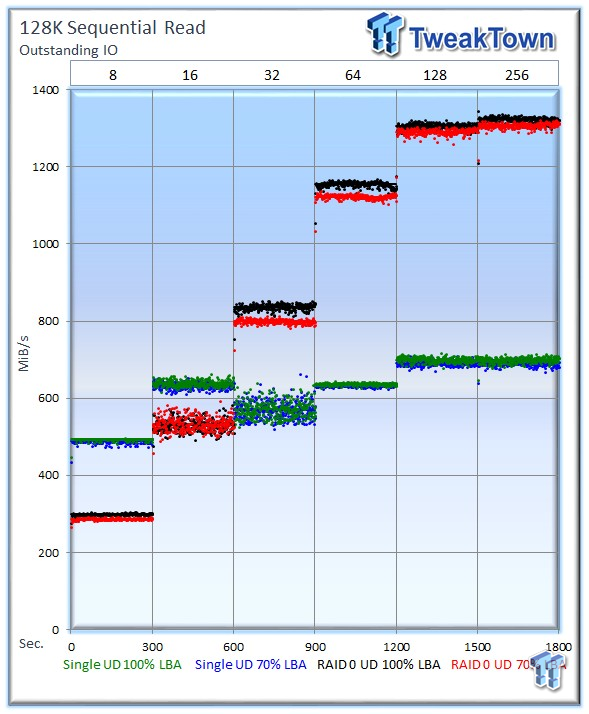

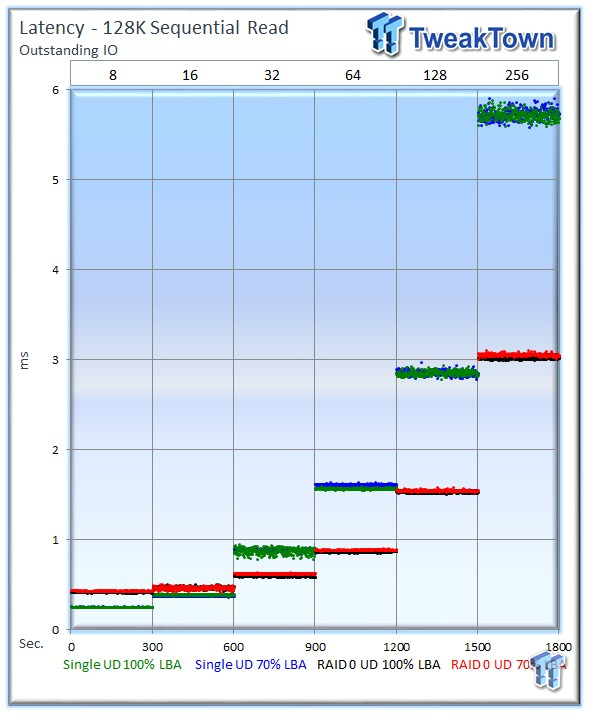

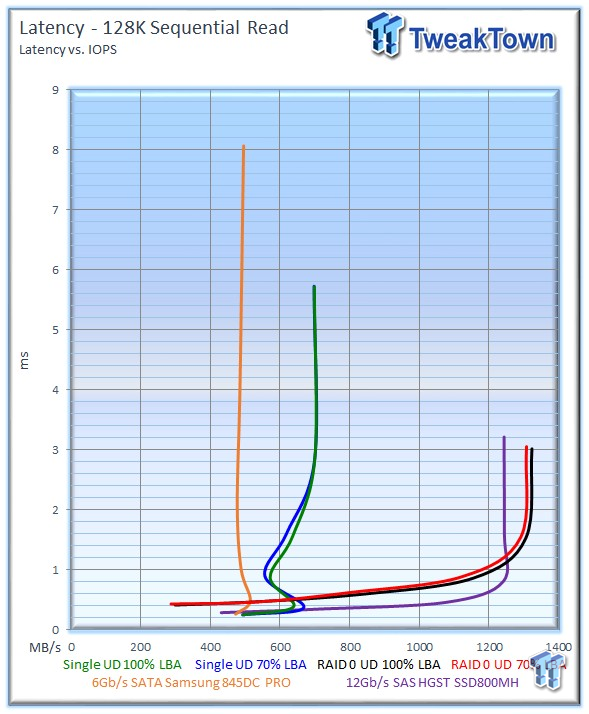

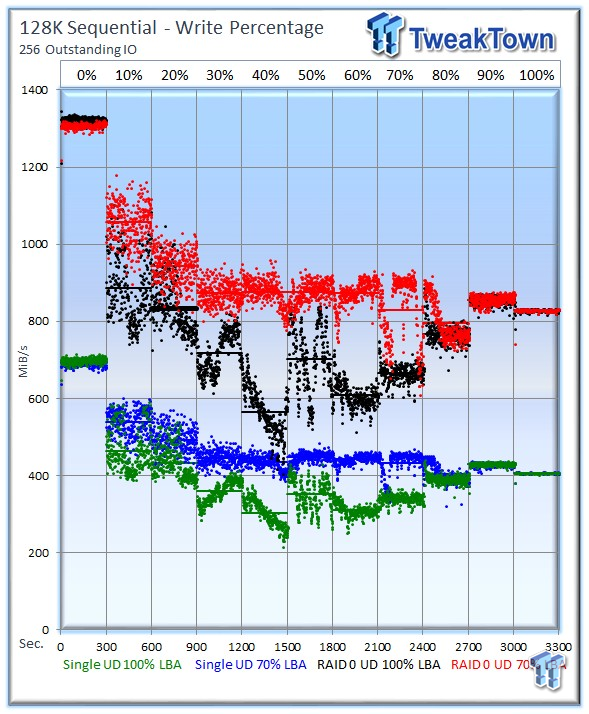

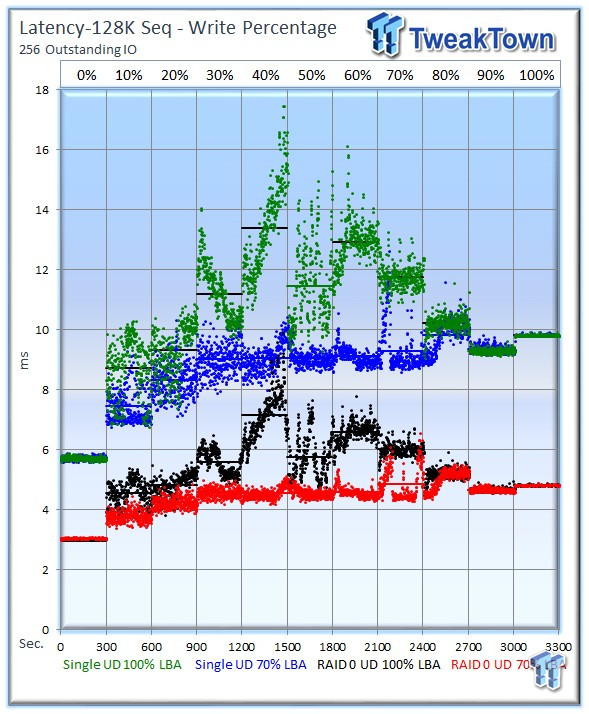

128k sequential speed reflects the maximum sequential throughput of the SSD, and is indicative of performance in OLAP, batch processing, streaming, content delivery applications, and backup scenarios.

Our multi-threaded sequential testing is very demanding; two ULLtraDIMMs with 100% utilization provide 1,323 MB /s, and at 70% utilization, they deliver 1,307 MB /s. A single ULLtraDIMM with 100% utilization delivers 698 MB/s, and with 70% utilization, it averages 691 MB/s.

A single 12Gb/s SSD800MH nearly reaches the sequential speed of two ULLtraDIMMs, which also provide excellent latency and performance.

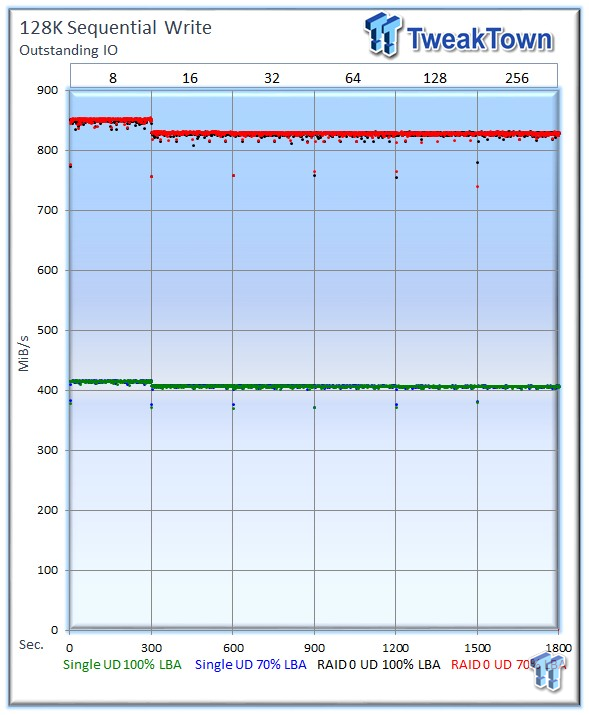

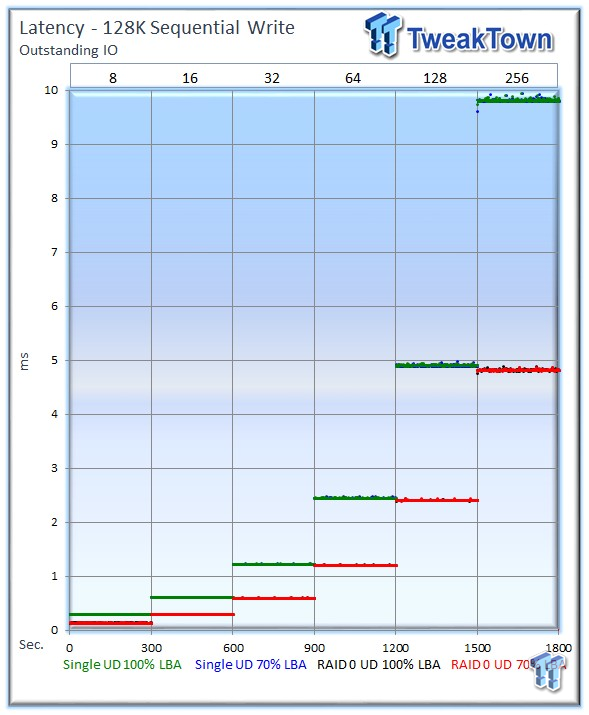

Sequential write performance is important in tasks such as caching, replication, HPC, content delivery applications, and database logging. Two ULLtraDIMMs in RAID with 70% and 100% utilization average 828 MB/s. A single ULLtraDIMM averages 407 MB/s with both usage scenarios.

The dual ULLtraDIMMs take the win in this test. The ULLtraDIMMs clearly benefit from the enhanced parallelism when deployed in RAID, and don't require associated HBA/RAID controllers and cabling.

In mixed sequential workloads, the ULLtraDIMMs scale particularly well, and the benefits of extra overprovisioning provide a clear performance benefit.

Database/OLTP and Webserver

Database/OLTP

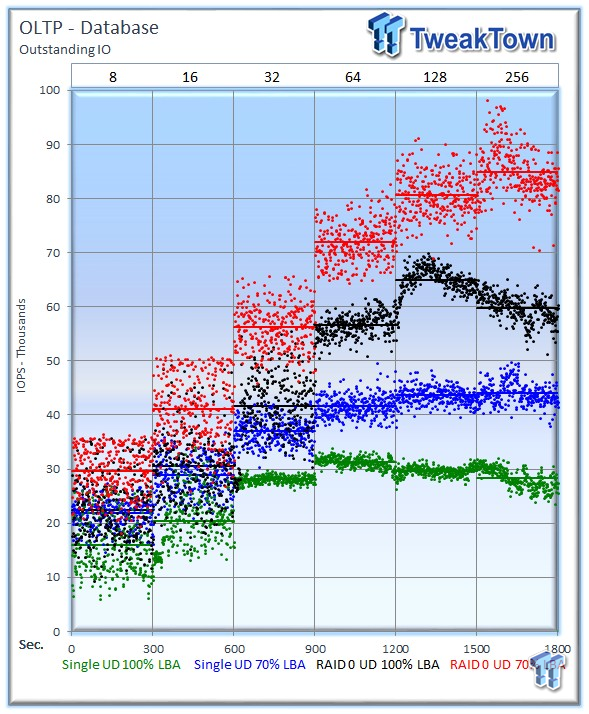

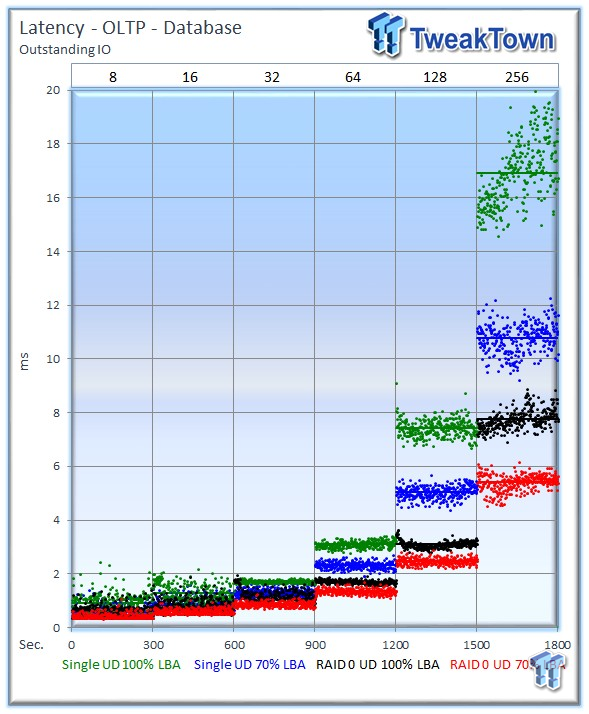

This test consists of Database and On-Line Transaction Processing (OLTP) workloads. OLTP is the processing of transactions such as credit cards and high frequency trading in the financial sector. Databases are the bread and butter of many enterprise deployments. These demanding 8k random workloads with a 66 percent read and 33 percent write distribution bring even the best solutions down to earth.

The clear linear-scaling picture continues in the OLTP test. The single ULLtraDIMM at 100% delivers 28,307 IOPS at 256 OIO, and averages 44,025 at 70%. With two ULLtraDIMMs at 100%, the average is 59,785 IOPS, and at 70%, they average 84,394 IOPS.

Two ULLtraDIMMs at 2ms nearly reach an astounding 80,000 IOPS.

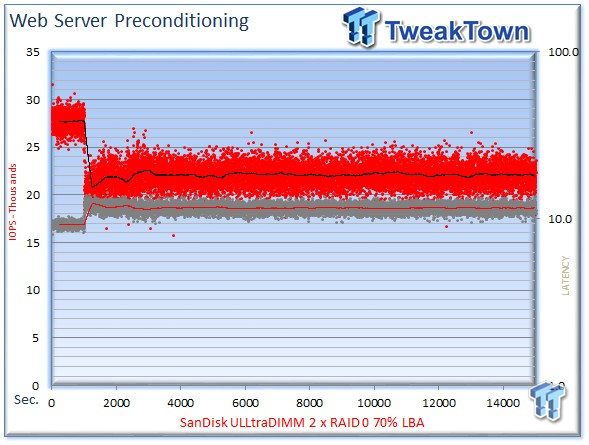

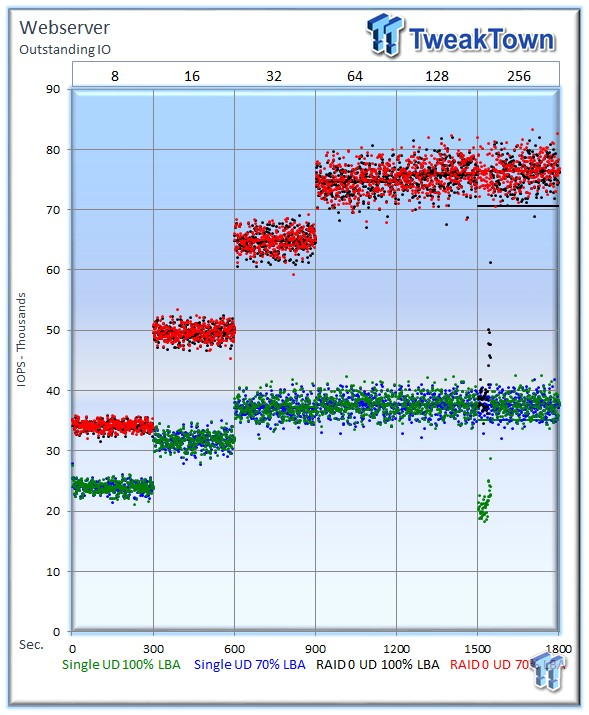

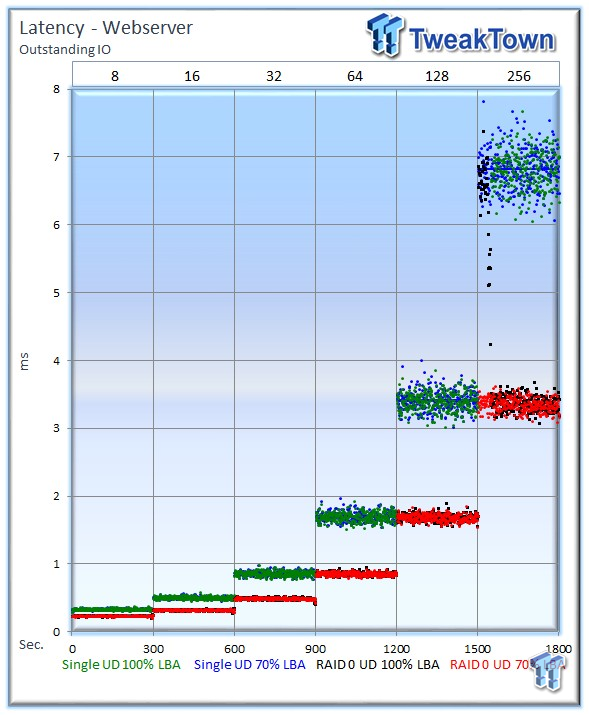

Webserver

The Web Server workload is read-only with a wide range of file sizes. Web servers are responsible for generating content users view over the Internet, much like the very page you are reading. The speed of the underlying storage system has a massive impact on the speed and responsiveness of the server hosting the website.

Two ULLtraDIMMs deliver an average of roughly 76,000 IOPS, and a single ULLtraDIMM delivers 37,000 IOPS.

The ULLtraDIMMs deliver excellent scaling in this test.

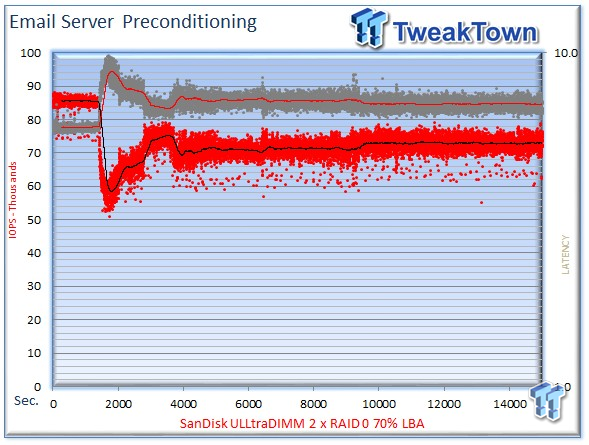

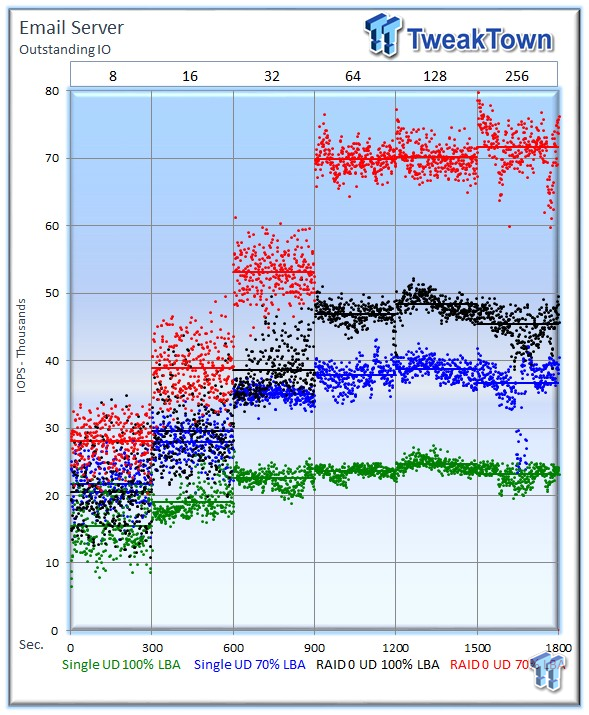

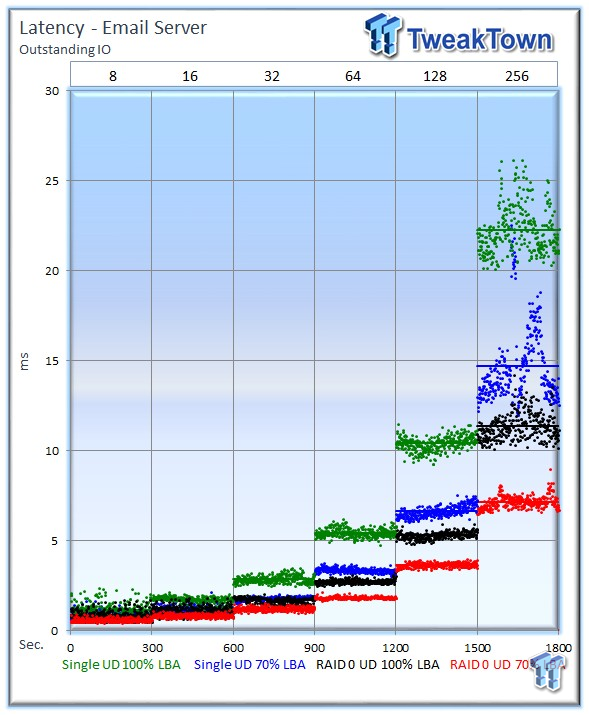

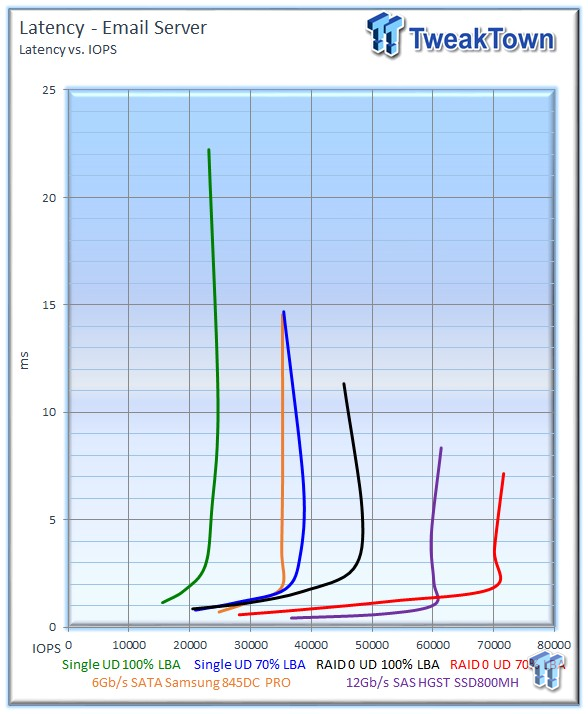

Email Server

The Email Server workload is a demanding 8k test with a 50/50 read/write distribution. This application is indicative of performance in heavy random write workloads.

A single ULLtraDIMM delivers 23,123 IOPS with 100% utilization, and 35,478 IOPS with 70% utilization. Two ULLtraDIMMs at 100% provide 45,344 IOPS, and provide 71,639 IOPS at 70%.

The latency-v-IOPS chart reveals solid scaling with the ULLtraDIMMs.

Final Thoughts

The transition to flash exposed the underlying weaknesses of storage protocols and infrastructure. Each step in the path to the NAND merely adds another layer of latency, and MCS avoids the traditional pitfalls of the storage stack by moving storage from remote locations, such as PCIe and SAS/SATA connections, to the memory bus. Confining I/O traffic within the NUMA architecture, where applications and operating systems reside, provides access to a massively parallel bus designed to scale linearly with no performance degradation as administrators add more devices. Marrying the unadulterated connection of the memory bus with the inherent advantages of the Guardian Technology Platform yields impressive performance benefits and ultra-low latency.

ULLtraDIMM deployments will employ upwards of four devices in parallel. Synthetic workloads do not reveal full application performance of the storage medium, but are a good indicator of scaling and performance available to applications. Our results indicate near-linear scaling in a multitude of workloads, making it is easy to extrapolate performance with multiple devices. We will circle back for TPC-E and TPC-C testing with Benchmark Factory when we obtain additional samples for a comparison with the leading PCIe competitors found in the PCIe SSD category of our IT/Datacenter section. The storage device presents as a standard block device that permits its utilization in a multitude of scenarios, including with complementary technologies like caching and tiering applications.

One of the most telling metrics came during our base latency test. The ULLtraDIMM provided 1/3 of the latency of the competing drives, which is desirable for sporadic or bursty workloads. The underlying SSD performance is exposed once sustained workloads are applied, but the genius of the design lies in parallelism from multiple devices. The ULLtraDIMMs regularly outperformed the SATA 6Gb/s Samsung 845DC PRO with lower latency, and higher performance across the board. The 12Gb/s SAS HGST SSD800MH proved to be stout competition, but requires an HBA or RAID controller.

The ULLtraDIMM design removes complexity, components, and cabling from server designs. The enhanced density will enable smaller blade and microserver designs. Removing the need for power-consuming and expensive hardware is a big plus. Optimized storage multiplies server efficiency, ultimately requiring fewer servers overall.

Diablo is in the driver seat for future memory channel storage devices with the recently awarded patent for interfacing a co-processor and I/O devices with main memory systems. The flexible MCS architecture also provides a solid platform for future non-volatile memory technologies, and storage protocols. The architecture will eventually evolve into a cache line interface at the application level, which will be the catalyst for rethinking how applications and operating systems utilize system memory.

The Supermicro test platform delivered great performance, and is indicative of the tight OEM integration between SanDisk, Diablo, and their partners. SanDisk offers a complete product portfolio with object storage and caching software, along with SATA, SAS, PCIe products, and now the ULLtraDIMM. The ULLtraDIMM closes the performance gap between storage devices and system memory, and the refined solution features easy-to-use and intuitive management software. Robust endurance, power-loss protection, end-to-end data protection, device-level redundancy, and a five-year warranty, are the hallmarks of an advanced storage solution. Tacking on extreme performance, scalability, and low latency is icing on the cake, winning the SanDisk ULLtraDIMM and Diablo MCS architecture the TweakTown Editor's Choice Award.