The power required to run complex, massive clusters of high-performance AI GPUs continues to skyrocket with the power of the AI chips themselves, but new research has a reduction in energy consumption required by AI processing by at least 1000x.

In a new peer-reviewed paper, a group of engineering researchers at the University of Minnesota Twin Cities have showed an AI efficiency-boosting technology, which is in lamens terms a shortcut in the regular practice of AI computations that massively reduces energy consumption for those workloads.

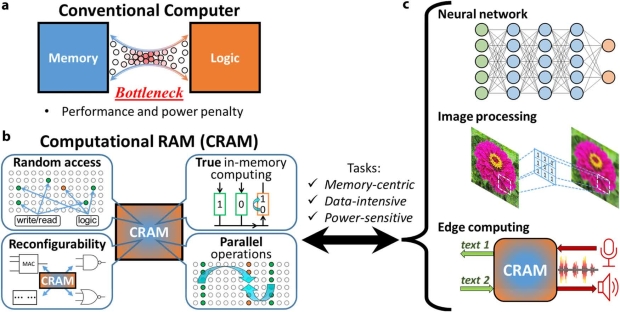

AI computing sees data transferred between components processing it (logic) and where data is stored (memory and storage). The moving around of this data back and forth is the main factor in power consumption being 200x higher than the energy used in the computation, according to this research.

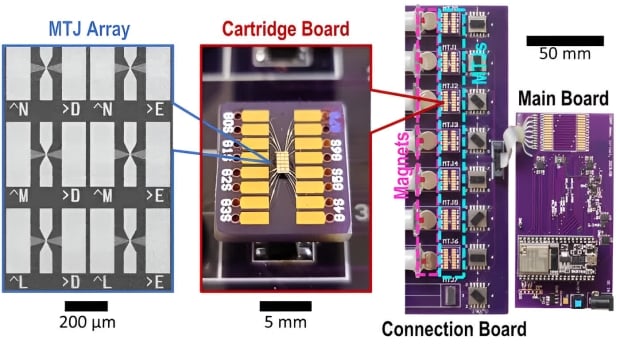

The researchers have used CRAM (Computational Random-Access Memory), with the CRAM technology that the research team developed uses a high-density, reconfigurable spintronic in-memory compute substrate inside of the memory cells themselves. Nifty.

This is different to processing-in-memory solutions like PIM from Samsung, with Samsung using a processing computing unit (PCU) inside of the memory core, so data still needs to travel from memory cells to the PCU and back, but it's just not as far.

This is where CRAM steps in.

With CRAM, the data never leaves the memory, instead it gets processed entirely without using the computer's memory array, with the research team explaining this allowed their CRAM-powered system running AI computing applications with an energy consumption improvement "on the order of 1000x over a state-of-the-art solution".

The team had other examples of their CRAM system, performing the MNIST handwritten digit classifer task, the CRAM was 2500x more energy efficient, and 1700x as fast as near-memory processing systems using the 16nm process node. This task is used to train AI systems on recognizing handwriting.

- Read more: Elon Musk's new Memphis Supercluster uses gigantic portable power generators, grid isn't enough

AI workloads require immense amounts of power, especially when you think the likes of Elon Musk and his xAI startup's new Memphis Supercluster, which has 100,000 x NVIDIA H100 AI GPUs. The facility draws from the grid, but Elon called in a bunch of high-end portable power generators, and he hasn't even fired up all 100,000 of the AI GPUs yet.

We have had recent reports that AI workloads are consuming as much energy as entire nations, with a total of 4.3 GW in 2023, and expected to grow between 26% and 36% in the years to come. So working on solutions that radically reduces power consumption on AI chips is a very important milestone for these researchers and the future of AI semiconductors.