Panmnesia is a company you probably haven't heard of until today, but the KAIST startup has unveiled its cutting-edge IP that enables adding external memory to AI GPUs over the CXL protocol over PCIe, which enables new levels of memory capacity for AI workloads.

The current fleets of AI GPUs and AI accelerators use their on-board memory -- usually super-fast HBM -- but this is limited to smaller quantities like 80GB on the current NVIDIA Hopper H100 AI GPU. AMD and NVIDIA's next-gen AI chip offerings will usher in up to 141GB HBM3E (H200 AI GPU from NVIDIA) and up to 192GB HBM3E (B200 AI GPU from NVIDIA, and Instinct MI300X from AMD).

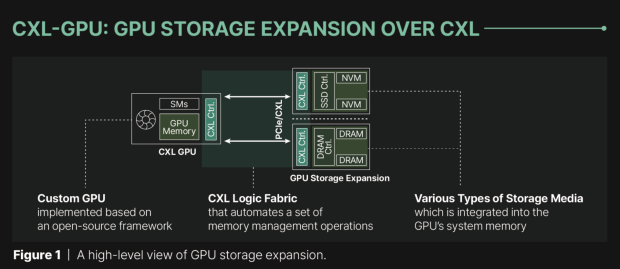

But now, Panmnesia's new CXL IP will let GPUs access memory from DRAM and SSDs, expanding the memory capacity from its built-in HBM memory... very nifty. The South Korean Institute (KAIST) startup bridges the connectivity with CXL over PCIe links, which means mass adoption is easy with this new tech. Regular AI accelerators don't have the subsystems required to connect with and use CXL for memory expansion directly, relying on solutions like UVM (Unified Virtual Memory) which is slower, defeating the purpose completely... which is where Panmnesia's new IP comes into play.

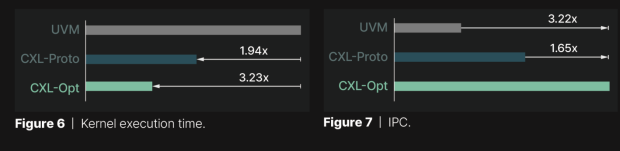

Panmnesia benchmarked its own "CXL-Opt" solution against prototypes from Samsung and Meta, which they've labeled as "CXL-Proto". CXL-Opt has much lower round-trip latency, which is the time taken for data to travel from the GPU to the memory, and then back again. Panmnesia's new CXL-Pro enjoyed a two-digit nanosecond latency, versus the 250ns of latency of its competitors. CXL-Opt's execution time is also far less than UVM, as it hits IPC performance improvements of 3.22x over UVM. Impressive.

The new CXL-Pro solution from Panmnesia could make for big waves in the AI GPU and AI accelerator market, acting as a solution between stacking HBM memory chips and moving towards a far more efficient solution. Panmnesia is one of the first with its new CXL IP, so it'll be interesting to see how we go from here.