The latest IEA Electricity 2024 report states that the electricity and power demands from the data center sector in countries like the United States and China will increase dramatically by the time 2030 rolls around. If you've been keeping track of some of the AI data center plans from the likes of Google, Meta, Amazon, and others, you're aware that this year alone, hundreds of thousands of high-performance NVIDIA GPUs are set to be installed in various locations.

The report writes, "The AI server market is currently dominated by tech firm NVIDIA, with an estimated 95% market share. In 2023, NVIDIA shipped 100,000 units that consume an average of 7.3 TWh of electricity annually. By 2026, the AI industry is expected to have grown exponentially to consume at least ten times its demand in 2023."

According to Rene Has, CEO of Arm (via The Wall Street Journal), if AI accounts for around 4% of current power usage in the United States, this could rise to around 25% by 2030. He also called out generative AI models like Chat GPT as "insatiable" regarding electricity.

According to the IEA Electricity 2024 report, a ChatGPT request (2.9 Wh per request) uses up to 10 times as much power as a standard non-AI Google search (0.3 Wh of electricity). If AI is to become ubiquitous and embedded in everything from operating systems to search engines, so will the power requirements to keep these generative AI systems online.

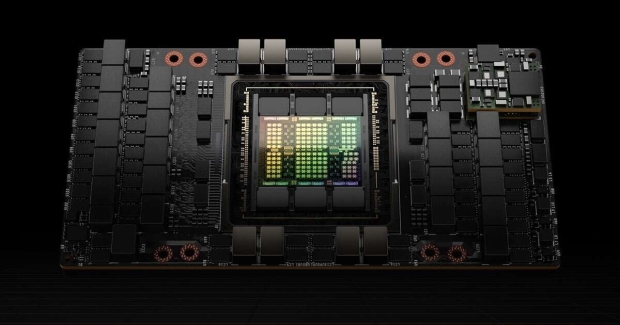

Moving onto OpenAI's Sora, which generates convincing videos, the power requirement increases exponentially - with some reports indicating that it would take a single NVIDIA H100 AI GPU an hour to generate a five-minute video. The NVIDIA H100 has a peak power consumption of roughly 700W, with AI models like this requiring upwards of 100,000 NVIDIA H100 GPUs to train on.

NVIDIA's H100 Tensor Core GPU, image credit: NVIDIA.

The good news is that governments and tech companies are aware of the issue and are looking into solutions, including developing nuclear-powered Small Modular Reactors (SMRs) installed on-site next to AI data centers. However, this technology is still not proven and needs to be developed and deployed at scale.

The other option would be to limit the growth and expansion of power-hungry AI data centers to place restrictions on AI, but that seems unlikely. The IEA Electricity 2024 report makes it clear that efficiency improvements and regulations will be crucial in "restraining data center energy consumption." Renewable energy will also play a role.