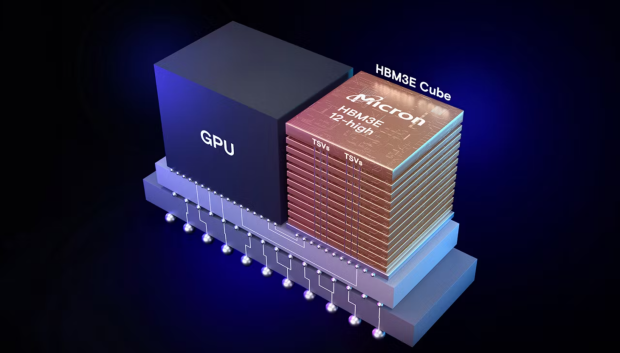

Micron has just announced its shipping production-capable HBM3E 12-Hi memory in up to 36GB capacities, pushing 1.2TB/sec of memory bandwidth ready for AI GPUs.

The new Micron HBM3E 12-Hi features an impressive 36GB capacity, which is a 50% increase over current HBM3E 8-Hi stacks, allowing far larger AI models like Llama 2 with 70 billion parameters to run on a single AI processor. This increased capacity to 36GB allows faster time to insight by avoiding CPU offload and GPU-GPU communication delays.,

Micron's new HBM3E 12-Hi 36GB delivers "significantly" lower power consumption than competitors' HBM3E 8-Hi 24GB memory, with Micron's new HBM3E 12-Hi memory pushing over 1.2TB/sec of memory bandwidth at a pin speed of over 9.2Gbps. These combined benefits of Micron's new HBM3E memory modules over maximum throughput with the lowest power consumption, ensuring optimal outcomes for power-hungry data centers of the future.

The company also adds that its new HBM3E 12-Hi memory features fully programmable MBIST that can run system representative traffic at full-spec speed, providing improved test coverage for expedited validation enabling faster time-to-market (TTM), and enhancing system reliability.

- Read more: Micron HBM3E for NVIDIA's beefed-up H200 AI GPU shocks HBM competitors

- Read more: Micron HBM3e enters volume production, ready for H200 AI GPU

- Read more: Micron's share of HBM memory market expected to hit 20-25% by August 2025

- Read more: Micron's entire HBM supply sold out for 2024, and a majority of 2025 supply already allocated

In summary, here are the Micron HBM3E 12-high 36GB highlights:

- Undergoing multiple customer qualifications: Micron is shipping production-capable 12-high units to key industry partners to enable qualifications across the AI ecosystem.

- Seamless scalability: With 36GB of capacity (a 50% increase in capacity over current HBM3E offerings), HBM3E 12-high allows data centers to scale their increasing AI workloads seamlessly.

- Exceptional efficiency: Micron HBM3E 12-high 36GB delivers significantly lower power consumption than the competitive HBM3E 8-high 24GB solution!

- Superior performance: With pin speed greater than 9.2 gigabits per second (Gb/s), HBM3E 12-high 36GB delivers more than 1.2 TB/s of memory bandwidth, enabling lightning-fast data access for AI accelerators, supercomputers, and data centers.

- Expedited validation: Fully programmable MBIST capabilities can run at speeds representative of system traffic, providing improved test coverage for expedited validation, enabling faster time to market, and enhancing system reliability.