Introduction

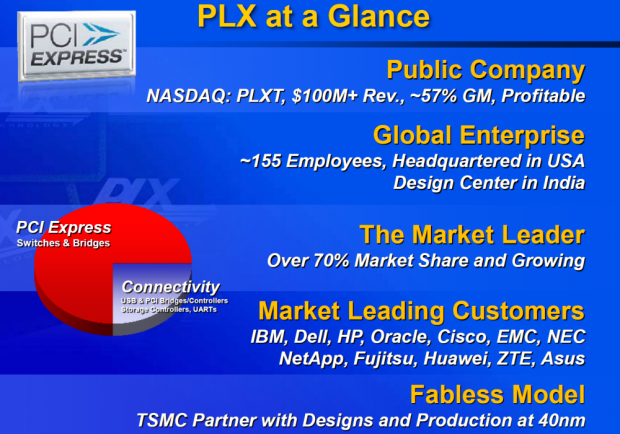

PLX Technology Inc. has been developing leading I/O interconnect silicon and software since 1994. Many associate PLX Technology with PCI Express switches and bridges, but they also manufacture USB controllers, legacy PCI bridges, and consumer storage controllers. Their PCI Express switches and bridges are a common component on server motherboards and other products, and they have a commanding 70 percent market share in this field.

PLX has provided the catalyst for explosive PCI Express technology advancements. They sampled the first PCIe switch in 2004, and their first PCIe Bridge followed soon after. By 2007, they had shipped over 1 million PCIe chips, and have now evolved into a company that churns out over a million units per quarter and has sold 100 million ports.

PLX Technology is developing PCIe Gen4, but also qualifying the ExpressFabric converged fabric. The advent of hyperscale and cloud computing has spurred a re-imagination of datacenter architecture. Disruptive technologies and Open Compute initiatives are challenging the old way of thinking and delivering designs purpose-built for optimum efficiency. The emergence of software-defined datacenters (SDDC) and software-designed storage (SDS) requires new designs that allow total control of resource allocation.

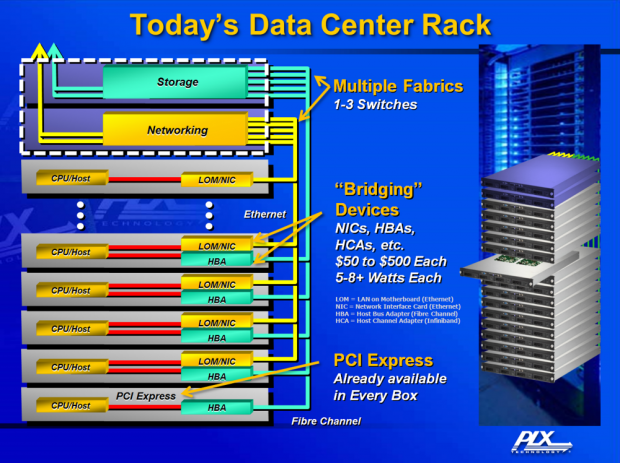

Today's datacenter rack is inherently inefficient. Each server has its own CPU, DRAM, networking, and storage equipment. External shared storage is connected via HBAs and HCAs utilizing switches and interconnects. Network access is achieved using a similar method. Each server has its own LOM or NIC connection to the datacenter network.

Each individual server is essentially its own fully functioning ecosystem, but this is not an efficient design.

A new holistic approach is required that leverages the benefits of each subsystem independently. Let's take a look at a short video that explains PLX Technology's ExpressFabric concept, and then take a closer look at the details of ExpressFabric.

Rack Scale Architectures

Rack Scale

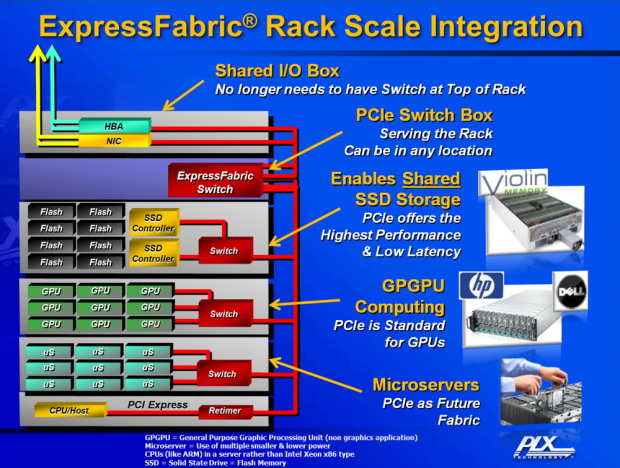

A simpler design is needed to minimize the amount of hardware and networking cluttering the server rack. Managing the rack as a separate unit with shareable resources will deliver the most efficient infrastructure. PCIe has numerous intrinsic benefits. It is low power, widely accepted, economical, and integrated at the base system level. The CPU and chipset already have integrated PCIe, and applications support the protocol natively. The extension of PCIe out of the server chassis is a natural progression of the existing design.

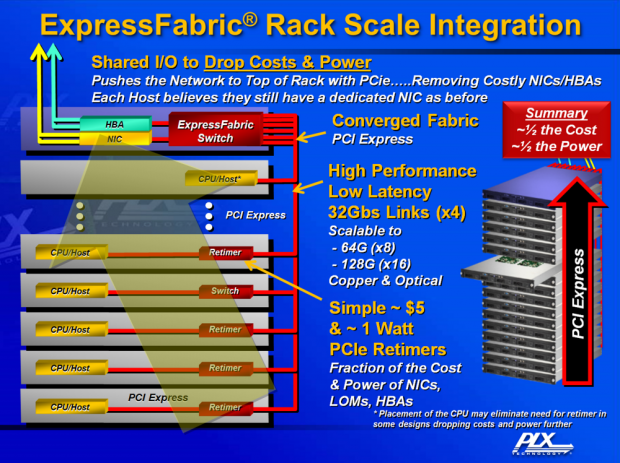

The first steps of widespread adoption of PLX Technology's ExpressFabric will involve a reduction in the amount of networking equipment. Current designs leverage multiple network fabrics to achieve a very similar task. This leads to excess hardware, cost, power consumption, cabling, and complexity.

Removing this extra layer of hardware greatly simplifies design. Utilizing PCIe as a converged fabric provides one high-performance low-latency link between all components. 32Gb/s links (x4 in PCIe parlance) are scalable to 64G (x8) and 128G (x16), providing a robust interconnect that can adjust to different requirements. Copper and optical QSFP+ interconnects are standard fare in the datacenter and offer a proven and reliable means of connection.

ExpressFabric forgoes use of expensive NICs, LOMs, and HBAs, instead employing a Retimer. Retimers boost PCIe out of the chassis, consume less than one watt of power, and cost roughly $5. These connections are routed to the ExpressFabric top-of-rack switch. This switch houses an HBA and NIC for communication outside of the rack. In this instance, simple Retimers have replaced 16 HBAs and 16 NICs. This leads to radically lower power consumption, component cost, and complexity.

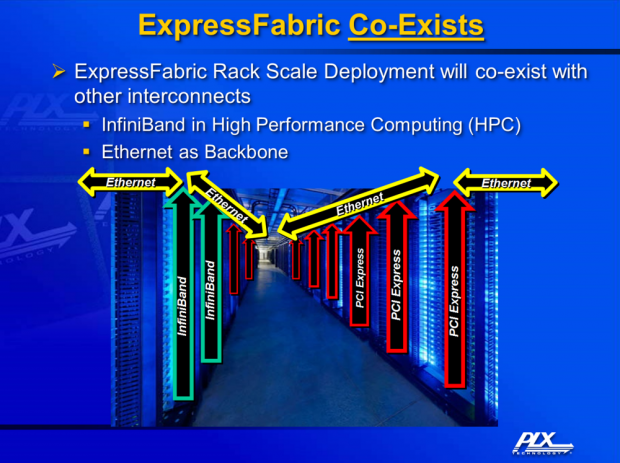

Perhaps most importantly, the ExpressFabric top-of-rack switch houses a NIC and an HBA. The servers react to these resources as they would if they were physically installed in the server. This allows an optimum use of networking resources. This fully utilizes the hardware, instead of the hardware simply standing idle or only operating at a portion of its capability. Ethernet will provide the backbone and Infiniband will be used in HPC applications.

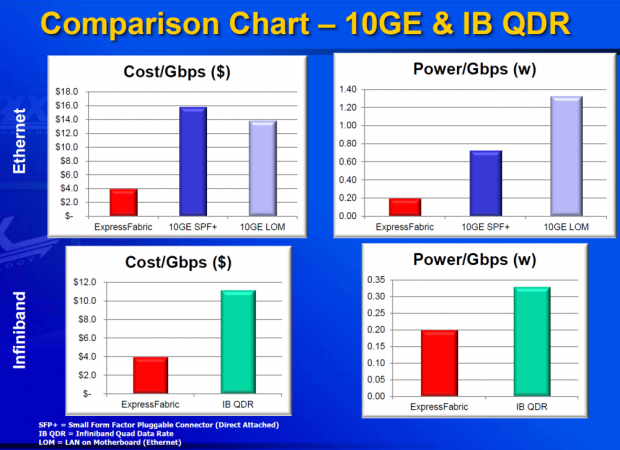

Measured on a Gbps basis, the ExpressFabric converged networking solution provides a radical reduction in cost and power consumption over both traditional Ethernet and Infiniband solutions. Peripheral advantages, such as lower heat output from the servers, simplified management, and reduced maintenance, will add to the benefits of these deployments.

Server Disaggregation

The initial revisions of ExpressFabric are creating a path to full server disaggregation. Disaggregation is the holy grail of efficient architectures. Current software-defined storage and software-defined datacenter approaches will never reach their full potential without resource pooling in disaggregated architectures.

In this design, ExpressFabric allows the spreading of server components into different resource pools. PCIe provides plenty of speed for this enhanced architecture; after all, PCIe is the fabric that binds these components inside today's server. The PCIe interconnect delivers data between components with the same speed and latency experienced in a single chassis. This enables quick and efficient resource allocation to applications that need it most.

The widespread use of PCIe SSDs, unfortunately, leads to stranded resources. PCIe SSDs often have spare capacity and performance that can be allocated to other systems if the architecture supported it. The same concept applies to DRAM, GPUs, and CPUs. These resources are all often stranded and underutilized. This design allows full utilization of premium resources by multiple applications.

Utilizing software for adaptive resource allocation minimizes waste and maximizes production, but also has the side effect of simplifying management. This also lays the foundation for a highly scalable architecture.

Most server components feature disparate refresh cycles. DRAM is usually fine for several generations of CPUs, and storage components often do not require fast replacement cycles. Today's servers often are replaced entirely just to update one component. Components can be replaced on their own cadence with resource pooling. Another benefit is design efficiency. Some components do not require as much airflow or power as others. Consolidating similar components enables denser, more efficient designs.

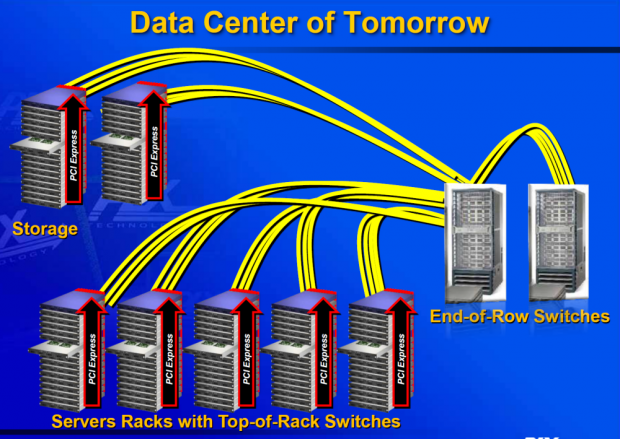

Instead of top-of-rack switches, the future will hold end-of-row switches that handle all communication and resource allocation. The evolution of ExpressFabric will enable intelligent, efficient, and scalable deployment of compute and storage resources.

ExpressFabric Switch

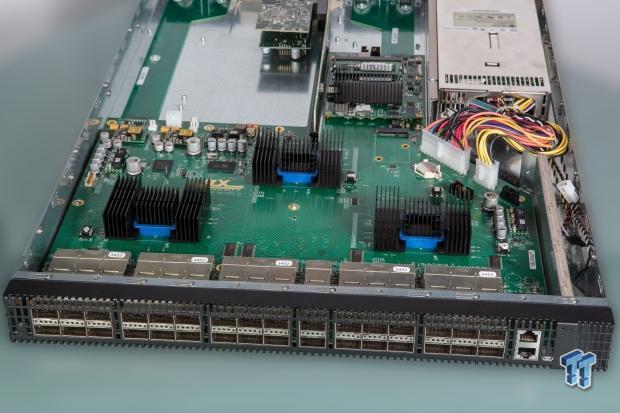

Enabling the future starts with the first generation of ExpressFabric. This 1U top-of-rack switch holds a relatively simple design that houses three PLX PCIe switches under the black heat sinks. The networking card is located to the rear of the switch. This single card provides all Ethernet communication for the cluster.

Utilizing PCIe simplifies application integration. PCIe speeds the performance of existing protocols, and the software stack already understands existing Infiniband and Ethernet protocols.

QFSP+ connectors line the edge of the switch.

This is a working cluster of servers connected to the ExpressFabric switch. Copper and optical interconnects are used to demonstrate the flexibility of the existing interconnect technology. Copper can handle most close-quarters connections, and optical can stretch its legs up to 100 meters.

There are monitors and USB connections to each server in this mockup. In actual deployment, the eight cables in the middle would be gone, and the simple row of four cables on the right provide all communication between the servers. The switch provides plenty of interconnects to allow more servers, and multiple connections per server, if required.

The current implementation tops out at 20Gbits/sec, and we caught a picture of it running at 19.1Gbits/sec during the demo.

Final Steps

Disruptive forces are quickly changing the datacenter, and the calls for enhanced efficiency are reaching a fever pitch. PLX Technology's ExpressFabric converged fabric enables a host of new capabilities to address performance challenges in the datacenter with a more efficient design. Reduction of components and power consumption can significantly reduce TCO in existing designs, as well as lower CapEX in new deployments.

Datacenter evolution does not happen overnight. ExpressFabric initially targets small to medium-sized cloud clusters with up to 1,000 nodes and 8 racks. Easy compatibility with existing protocols such as Ethernet and Infiniband is important as the capabilities of the ubiquitous PCIe connection expand out of the box.

PLX Technology specializes in providing a complete solution. They are a firm believer in the adage "silicon without software is just sand." They focus on providing software development kits, hardware design kits, operating system ports, and firmware solutions for their customers. PLX's long history and numerous partners place them in a good position to steer ExpressFabric into the datacenter.

In the longer term, leveraging PCIe as a converged fabric will begin the transformation of rack-level architectures into resource pooling models. SDDC and SDS movements are falling into place, and the stars are aligning with disruptive initiatives, such as Open Compute, propelling a rapid uptake of new efficient models.

The pace of innovation is quickening, and the need for more power and efficiency will only be served by refreshing new approaches to solving the problems handed down by old architectures. PLX's introduction of ExpressFabric will enable a host of new and exciting opportunities in the datacenter.