Artificial Intelligence - Page 36

All the latest Artificial Intelligence (AI) news with plenty of coverage on new developments, AI tech, NVIDIA, OpenAI, ChatGPT, generative AI, impressive AI demos & plenty more - Page 36.

NVIDIA to make $210 billion revenue from selling its Blackwell GB200 AI servers in 2025 alone

Morgan Stanley has said that the global supply chain will see a huge boost in orders from the industry for AI servers, with NVIDIA continuing (and clawing even more) market share to dominate the AI industry further.

NVIDIA is expected to ship between 60,000 and 70,000 units of its Blackwell GB200 AI servers, which will bring in $210 billion. Each server costs $2 million to $3 million, so 70,000 GB 200 AI servers at $3 million each = $210 billion. NVIDIA is making the NVL72 and NVL36 GB200 AI servers, as well as B100 and B200 AI GPUs on their own.

Morgan Stanley estimates that if NVIDIA's new NVL36 AI cabinet is used as the biggest seller by quantity, the overall demand for GB200 in 2025 will increase to 60,000 to 70,000 units. The big US cloud companies are the customers lining up -- or have already purchased -- NVL36 AI cabinets, but by 2025 we could expect NVL72 AI cabinets to be shipping in higher quantities than NVL36.

Meta releases 'world's largest' AI model trained on $400 million worth of GPUs

Meta has announced its release of Llama 3.1 405B, which is what the company is describing as the "world's largest" open-source large language model.

Meta explains via a new blog post that Llama 3.1 405B is "in a class of its own" with "state-of-the-art capabilities" that rival the leading AI models currently on the market when it comes to general knowledge, steerability, math, multilingual translation, and tool use. Meta directly compares Llama 3.1 405B with competing AI models, such as OpenAI's various GPT models, showcasing the recently released model trained on 15 trillion tokens. A token can be considered a fragment of a question and an answer.

To achieve the training of this 405 billion parameter model, Meta used 16,000 NVIDIA H100 GPUs, which cost $25,000 each. This means the AI model was trained by $400 million worth of NVIDIA GPUs, which required 30.84 million GPU hours and produced approximately 11,390 tons of CO2.

TSMC declined NVIDIA's request for a dedicated packaging manufacturing line for its GPUs

TSMC reportedly turned down a request by NVIDIA CEO Jensen Huang for the Taiwanese semiconductor giant to set up a dedicated packaging manufacturing line for NVIDIA's products.

In the world of chips, semiconductor fabrication and chip packaging have become increasingly important to pump out the amount of AI GPUs and advanced packaging as possible. Unlike fabrication capacity, packaging has struggled to keep up with the insatiable demand for chips, with TSMC having to dedicate considerable resources towards advanced packaging capacity.

NVIDIA CEO Jensen Huang visited TSMC headquarters earlier this year, meeting with TSMC founder Dr. Morris Chang and the firm's former chairman. Industry sources said Jensen requested the company set up a dedicated packaging line for NVIDIA GPUs.

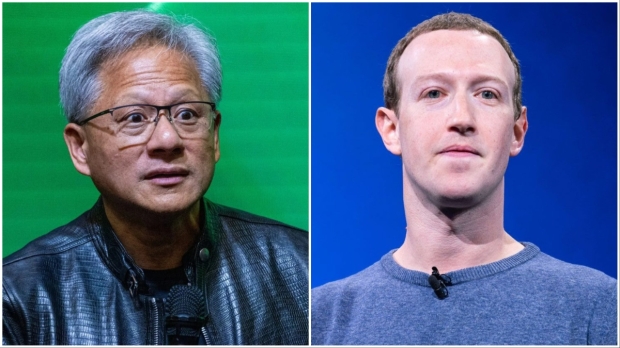

NVIDIA CEO Jensen Huang and Mark Zuckerberg to explore future of AI at SIGGRAPH 2024

SIGGRAPH 2024 has confirmed it will be visited by NVIDIA CEO Jensen Huang and Meta founder Mark Zuckerberg to discuss AI advancements in the tech industry.

For those who don't know, SIGGRAPH 2024, which will be held in Denver, Colorado, from July 28 to August 1, is a conference and exhibition concentrating on the intersection between computer graphics and technology. This year will be the 51st conference and it will feature many keynote presentations from prominent industry figures.

But this year, attendees will get presentations from NVIDIA CEO Jensen Huang, the face of the company powering the now extremely hot large language models powering artificial intelligence tools, and Mark Zuckerberg, the CEO of Meta, which is pioneering the push into virtual worlds with affordable virtual reality solutions. Huang will be discussing AI breakthroughs, generative AI, and open source empowering creators and developers.

Intel launches AI Playground, an AI PC starter app and AI image generator for Arc GPUs

Intel has just launched AI Playground into beta, an open-source project for Windows desktop PCs that runs on systems with an Intel Core Ultra-H processor with integrated Arc Intel Graphics or a dedicated Intel arc GPU with at least 8GB of VRAM. Intel Core Ultra-H support is 'coming soon,' but if you've got an Arc GPU, you can download and fire up AI Playground right now.

So, you're probably wondering what AI Playground is all about. It's described as an 'AI PC starter app' designed to make working with AI easy and flexible. Upon startup, you'll be provided with a list of various models to download and use, or you can provide one of your own. Advanced users will be able to tweak settings to customize the experience.

AI Playground runs locally, allowing users to generate images from text prompts at various resolutions, use AI to enhance or upscale images, or even change their style. AI chatbot functionality can also be called on to answer questions or summarize documents. If this sounds like Microsoft's Copilot+ features, you're on the right track. Apart from the whole "take screenshots of everything you do on your PC" part.

NVIDIA working on B20 AI GPU for China: compliant with US regulations, enters production soon

NVIDIA is reportedly working on a different version of its flagship Blackwell AI GPUs for the Chinese market that would adhere to strict US export controls. That new AI GPU would be the B20 AI GPU.

NVIDIA unveiled its fleet of Blackwell B100, B200, and GB200 AI chips earlier this year, with B200 being over 30x faster in some AI workloads than Hopper H100, but they're far too powerful to be allowed into China. However, a cut-down B20 AI GPU is reportedly in the works, which sticks under the metrics of US export regulations and is destined for China in Q2 2025.

In a new report from Reuters, we're learning that NVIDIA is reportedly preparing the B20 AI GPU for China. Their sources declined to be identified as NVIDIA has "yet to make a public statement." A spokesperson for NVIDIA declined to comment, too.

Elon Musk turns on xAI's new AI supercomputer: 100K liquid-cooled NVIDIA H100 AI GPUs at 4:20am

Elon Musk has just powered on xAI's new supercomputer, powered by 100,000 x NVIDIA H100 AI GPUs worth up to $4 billion at its Memphis Supercluster, the "most powerful AI training cluster in the world".

Elon Musk took to X, posting: "Nice work by xAI team, X team, NVIDIA and supporting companies getting Memphis Supercluster training started at ~4:20am local time. With 100K liquid-cooled H100s on a single RDMA fabric, it's the most powerful AI training cluster in the world!"

NVIDIA's current-gen Hopper H100 80GB AI GPUs cost between $30,000 and $40,000 per AI GPU, so Elon Musk's investment with xAI and its $6 billion raised in May 2024 at a valuation of $24 billion, sees Musk's AI startup investing somewhere between 50% and 67% of its fundraising in purchasing NVIDIA's leading H100 AI GPUs.

Samsung announces partnership with AMD to supply high-perf substrates for next-gen CPUs, GPUs

Samsung has just announced a new partnership with AMD, with the South Korean giant to provide high-performance substrates for AMD's next-gen CPUs and GPUs for data centers.

Samsung Electro-Mechanics (SEMCO) announced the collaboration with AMD today, to supply high-performance substrates for hyperscale data center compute applications. Market research firm Prismark predicts that the semiconductor substrate market will grow at an average annual rate of around 7% from 15.2 trillion KRW to 20 trillion KRW in 2028.

SEMCO is placing a 1.9 trillion KRW investment in the FCBGA factory shows it's committed to pushing substrate technology and manufacturing capabilities to meet the highest industry standards and future technology needs. SEMCO's collaboration with AMD focuses on meeting the unique challenges of integrating multiple semiconductor chips (chiplets) on a single large substrate.

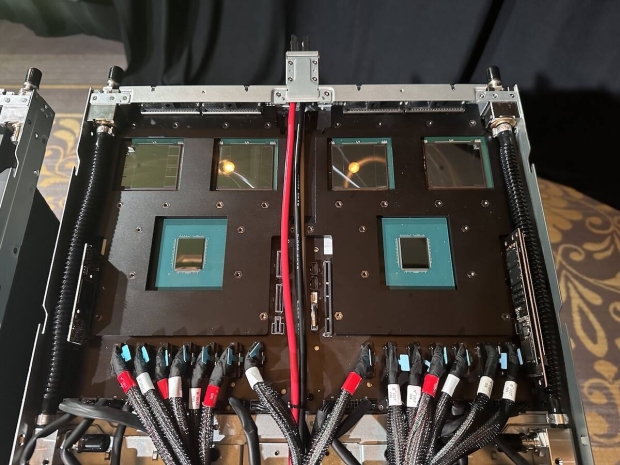

NVIDIA's new GB200 AI server cabinets: leaks in liquid cooling system, emergency work to fix it

NVIDIA was on the eve of unleashing its next-generation GB200 Superchip inside of new AI servers, until rumors of a "flooding" occurred.

UDN is reporting that the key water-cooling components from "major international manufacturers have been shipped out" causing water leakage inside of the GB200 AI server cabinet. The outlet reports that Taiwanese manufacturers like Shuanghong and Qihong are expected to come on-board for emergency rescue and receive transfer orders.

NVIDIA's issues with its new GB200-powered AI server cabinets seems to be coming from outsourcing some of the water cooling components, including the branch pipe, quick connector, and hose. If an issue happens through one of these components, as they're all related to the movement of coolant, a leakage can happen. That's not good for a top-of-the-line $3 million GB200 NVL72 server cabinet.

SK Group boss says AI 'boom could vanish, just like the gold rush disappeared'

The Chairman of the Korean Chamber of Commerce and Industry (KCCI) and SK Group, Chey Tae-won had some choice words to say at the recent 47th KCCI Jeju forum.

The SK Group chairman compared the AI boom to the gold rush, adding: "When there was no more gold, the sellers became unable to sell pickaxes. Without making money, the AI boom could vanish, just as the gold rush disappeared".

Chey expects that NVIDIA will continue to be one of the biggest companies by market capitalization over the next three years. The AI boom recently led NVIDIA to a record $3.3 trillion market cap.