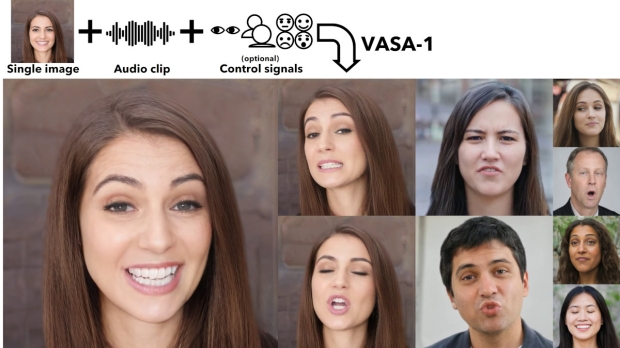

A fresh jaw-dropping initiative for AI, this one from Microsoft, runs like this - give AI a photo of someone, and an audio clip of their voice, and it'll mock up a video clip of that person chatting away.

You don't have to use a photo of a real person, either - the AI can work with paintings, cartoons, or similar mediums (Image Credit: Microsoft)

So, from just an image and a minute long chunk of audio of the person's voice - which is what was used to create the example videos Microsoft shares - you get these fully realized talking faces, complete with lifelike facial expressions, head and eye movements, full lip-syncing, and so on.

In short, the whole works needed for it to look like a totally realistic video of said person espousing their thoughts on any given topic, all generated in real-time by the AI.

Welcome to the world of Microsoft's VASA-1 project. Impressive stuff? It most certainly is. Scary stuff? It most certainly is that, too.

As is the problem with any method of AI video generation, the prospect of faked clips where someone can be the victim of a hoax video making them out to be - well, pretty much anything really - is a clear worry. All you need is a picture and an audio recording of the victim's voice, and you're good to go.

No, this tech won't necessarily be used for nefarious purposes, true enough. And obviously Microsoft, and the researchers behind VASA-1, are keen to point to the many potential good uses of this talking head trickery. Such as "improving accessibility for individuals with communication challenges, and offering companionship or therapeutic support to those in need," which is all laudable, of course.

Election distraction?

However, as MS Power User, which noticed this development, points out, with this AI-powered video clip generation tech all coming to the fore just ahead of the US election - well, the timing isn't great.

The makers do clarify that there are opposed to the use of the tech to create "misleading or harmful contents of real persons," and intend to apply techniques for advancing detecting forgeries. Further noting that the videos made using VASA-1 still contain "identifiable artifacts" and that "numerical analysis shows that there's still a gap to achieve the authenticity of real videos."

Currently, then, you can tell for sure if a video is a fake - but at some future point, will the AI get so good at this, that this becomes impossible? And at that stage, how much bigger a problem does 'fake news' or defamation and the like become? Not easy or comfortable questions to answer, let's face it.

Issues and doubts around video generation and fakery have already become a problem for YouTube, so much so that as we reported recently, the platform moved to introduce rules for uploaders to clearly label 'synthetic content' made by generative AI.