Introduction

Companies have a funny tendency to do just about anything for publicity. Being a storage writer requires staying up to date on the news, and in the normal course of my week, I watch companies clamor for attention daily. Attempts range from the mundane to the hilarious, and sometimes even questionable. Some will do anything to separate themselves from the thunderous cacophony of the thousand other companies screaming for attention.

Personally, I'm happy I'm not the one tasked with promoting companies or their products. I can see that there must be somewhat of an addictive property to nailing it though, as we observed from the splash of Backblaze headlines in the last few weeks.

Backblaze has had their name emblazoned on the front page of every tech website this week with the results of their HDD reliability blog post. Backblaze's blog is a running anthology of their company, and their efforts to provide users with unlimited online backup for a scant $5 a month. The very fact that you read that sentence makes this all worthwhile for Backblaze - they just got their message out.

This latest post is a result of their initial blog entries covering how long HDDs last, and the follow-up that enterprise HDD's are less reliable than consumer drives. Needless to say, there are holes in the methodology big enough to drive a truck through. However, the headlines led to questions from the public about more detailed drive failure rates, and Backblaze complied with the latest post, "Which Hard Drive Should I Buy?".

While Backblaze is somewhat clear about the results, they don't explain the test environment, and they don't do a good job of explaining why much of their data is worthless to the typical consumer. Further digging unearths questionable practices, at least if the goal is to gather data on drive reliability. Backblaze follows the open source mentality of sharing data on their enclosures, and even share schematics so readers can build their own Backblaze servers. This provides us some insight into their activities.

Reading their blog posts during the HDD crisis in 2011 is enthralling, they really do go to any length to continue operation in a cost-efficient manner. Unfortunately, that doesn't fall in line with determining the winners or losers of HDD reliability. To add insult to injury, most tech websites have picked this story up and proclaimed the results as a definitive guide to drive reliability.

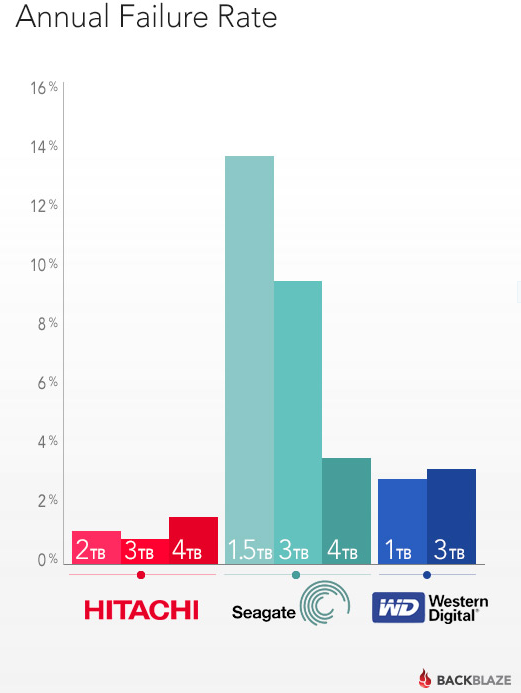

The Backblaze results have been tallied and placed in nice charts, and winners proclaimed. Hitachi and Western Digital appear to be the winners, and Seagate comes in a distant third. However, in this case, even the winners are losers. Let's fire up the truck and drive through the holes in this test, and explain exactly why the results shouldn't affect anyone's purchasing decision.

Drive Sourcing

Backblaze unabashedly sources the cheapest consumer drives they can find for storing their customers' data. Their noble goal is to provide storage as cheaply as possible, and then pass those savings along to their customers. They do not use drives indiscriminately, each model goes through a short test phase to assure the drive suits their purposes. Pairing these cheap drives with various RAID and replication schemes provides enough integrity to safely store data.

During the Thailand flooding in October 2011, Backblaze had to go to extreme lengths to procure enough drives to sustain operations. With the prices of HDD's rising exponentially, and supply in short stock, they literally took to the streets as a drastic form of damage control. They named the practice 'drive farming'.

First, they found that buying external drives was very cost effective. The whims of the market dictate that external drives are often cheaper than internal desktop drives. These drives come in small enclosures and are usually connected via USB or eSATA. Backblaze buys these drives, and strips the drives from the enclosures in a process they lovingly refer to as "shucking". Much like shucking a head of corn, they rip the enclosure apart and out pops a useable HDD.

At first they pillaged area Fry's and Costco's until some employees were eventually banned from purchasing more drives. They fanned out further and enlisted the help of friends and family to continue purchasing drives, even detailing complex drive purchasing schedules to skirt drive purchasing limitations. When this approach began to fizzle in the local area, they even pondered going cross-country in Ryder truck. This eventually led to crowdsourcing. Backblaze offered their readers a $5 bounty per drive for drives purchased and sent to them during a Costco sale.

This practice exhibits amazing ingenuity and a laudable effort to deliver the best bang for the buck to their customers. Unfortunately, it doesn't mesh well with creating a stable sample pool to determine drive reliability. Backblaze also acknowledges using drives that were known RMA's, and refurbished, in the sample pool.

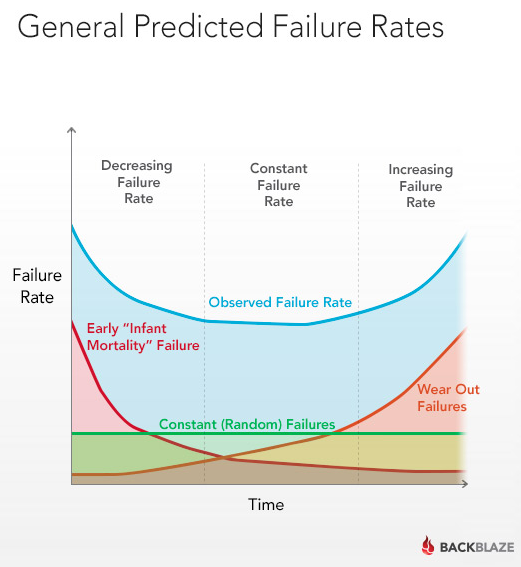

The majority of Backblaze's failures occur in the first few weeks of service, which is understandable considering their drive purchasing methods. The typical 'bathtub curve' of failures is expected with many storage devices, with the highest chance of failure in the beginning and ending stages of the product's life. However, it is feasible to conclude that their drive sourcing methods tainted their results.

Interestingly enough, Backblaze won $5 million in venture funding. Soon after, Costco was offering a sale on external HDD's with a limit of five per person. Even with the massive funding, they chose to take advantage of the sale to get the best bang for their buck. As they stated, old habits die hard.

Unfortunately, these drives are included in their failure ratings.

Enclosures

Backblaze also extends their ingenuity to the server rack. They have designed purpose-built Storage Pod enclosures, and share the schematic freely online. This commendable commitment to information sharing also sheds light on their 'failure rate' data.

The Storage Pod is currently on revision 3.0, with two prior incarnations requiring upgrades to deal with a number of design problems, most notably vibration.

Vibration is every hard drive's enemy, and creates an exponential amount of wear on components. Vibration even has performance implications. A typical desktop HDD experiences a relatively vibration-free existence in a stable environment, and is designed accordingly. One of the major differences in enterprise HDD design is vibration resistance technology. This allows the drive to function well and stand up to the wear and tear of the server chassis and rack.

More HDDs installed in an enclosure raises the amount of vibration. Backblaze packs 45 HDDs per enclosure for maximum storage density. While the drives are initially exposed to vibration from their neighbors inside the server, once placed into the rack, they are exposed to even more vibration from other servers. This creates the 'perfect storm' of vibration, and the use of consumer drives results in horrendous failure rates, as evidenced by the data from Backblaze.

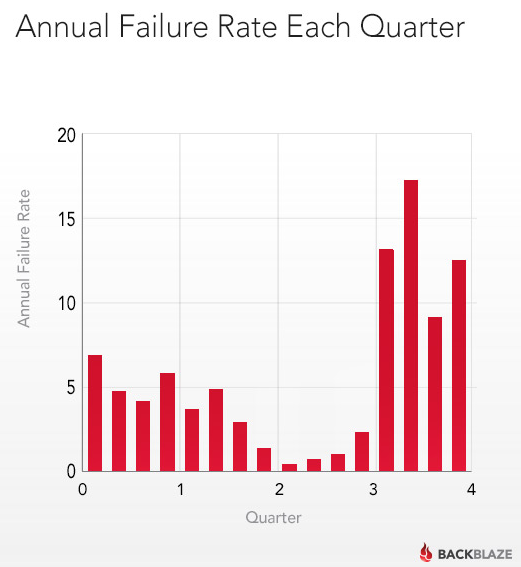

It is no wonder that Backblaze has continued to refine their chassis to provide more resistance to vibration: the early models merely had nylon spacers to dampen vibration. Taking a closer look at their data, we can see that the drives in use the longest suffer the highest failure rates. One likely reason is simple: these older drives are in revision 1.0 of their storage enclosures, which suffer from significant vibration issues that merited a redesign.

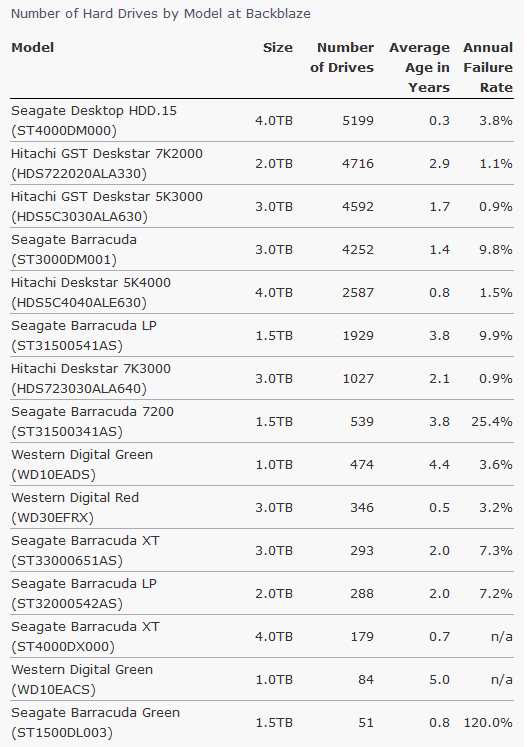

Unfortunately for Seagate, these drives are predominantly from their product lines. This paints them in a very unforgiving light due to obvious chassis issues, with a misleading annual failure rate of 25.4% that would surely put Seagate out of business, if it were realistic.

Backblaze has left a significant amount of information out of the disclosed failure rate data. Segmenting these drives to different chassis revisions would be the responsible approach to information dissemination. We can rest assured that the older drives aren't in the best chassis available, revision 3.0 wasn't released until February 2013.

Environment and Workloads

Environment

Another issue that affects the lifespan of an HDD is drive temperature. Variations in temperature and humidity have an impact upon drive life. Reading through the Backblaze blog, one comment that drew my eye was on server rack temperature;

... we've observed in the past three years that: 1) hard drives in pods in the top of racks run three degrees warmer on average than pods in the lower shelves; 2) drives in the center of the pod run five degrees warmer than those on the perimeter; 3) pods do not need all six fans-the drives maintain the recommended operating temperature with as few as two fans; and 4) heat doesn't correlate with drive failure (at least in the ranges seen in storage pods).

Backblaze claims that drive temperature doesn't affect drive life. That is counter to the observations of many others, including drive manufacturers. There is a reason for specified temperature ranges for HDD's. Though they are likely within these ranges, the Backblaze drives cannot be directly compared to each other with varying temperature ranges, let alone other drives. Once again, the lack of pertinent information makes any real conclusions impossible, and the uneven nature of the test environment spoils the data.

Workloads

Each drive is designed meticulously to provide a tightly-defined service level in its intended environment. These guidelines determine not only the design, but also the type of components used. The most cost-effective drives are designed to deliver exactly the correct performance and longevity in their intended environment, and nothing more. Utilizing robust components above the workload requirements of the drive is wasteful, and adds cost unnecessarily. This design efficiency also means the drives are more likely to fail in untoward conditions.

Backblaze procures the cheapest possible HDD on the market at all times, regardless of its workload rating, and then subjects them to a harsh environment that is virtually guaranteed to destroy the drive. This leads to higher failure rates than observed in the wild. This reflects just how precisely these drives are engineered to fulfil their stated purpose, and nothing more.

Another concern is the direct comparisons between drives, even though they endure varying workloads. Comparing drives of the same make and model is impossible if the same workload isn't applied. Expanding that out to compare different models and manufacturers is even more ridiculous. There is no way to know how many times the drives have spun up and down, and how many times the drives were subjected to varying types of data requests.

Random data requires more movement, and thus creates more wear and tear on delicate HDD heads. Spinning up and down, and also entering and recovering from various sleep states, also wears the drives differently over the course of time. With no real rhyme or reason to the workload distribution, let alone the environment, direct comparisons are impossible.

Only one thing is certain; the drives were subjected to workloads well beyond their design limits.

Final Thoughts

The data from Backblaze should not influence a purchasing decision by any consumer, regardless of what type of drive they are purchasing. The innumerable variables, and lack of documentation, ensures the results are unreliable. Even for the winners, the results aren't good; the failure rates are exponentially higher than those observed in the real-world. One should question whether these companies could survive financially with the massive warranty return rates in real-world scenarios.

We covered some of the most obvious holes in the methodology behind the Backblaze comparisons, but there are many more, such as sample size. With varying numbers of drives for each model, it is possible that some bad batches may have made their way into the sample pool, thus further skewing the numbers.

There is no clearer example of this than in their blog post titled "Enterprise Drives: Facts or Fiction?". In this blog post, Backblaze compared 368 enterprise HDDs, presumably purchased as a batch, to 14,719 consumer drives. Along with the fact that a bad batch may skew the numbers, Backblaze admits they subjected the drives to various chassis, temperatures and workloads. This creates data that is essentially worthless for comparative purposes, but when paired with a catchy title, it serves the purpose of attracting attention.

The enthusiast in me loves the Backblaze story. They are determined to deliver great value to their customers, and will go to any length to do so. Reading the blog posts about the extreme measures they took was engrossing, and I'm sure they enjoyed rising to the challenge. Their Storage Pod is a compelling design that has been field-tested extensively, and refined to provide a compelling price point per GB of storage.

It is the release of the data, in handy charts and graphs that encourage misrepresentation, which brings out the data-storage stickler in me. HDD manufacturers spend billions of dollars in R&D, and their labs are designed to characterize and measure the reliability and endurance of their storage solutions.

The Backblaze environment is the exact opposite. I do not believe I could dream up worse conditions to study and compare drive reliability. It's hard to believe they plotted this out and convened a meeting to outline a process to buy the cheapest drives imaginable, from all manner of ridiculous sources, install them into varying (and sometimes flawed) chassis, then stack them up and subject them to entirely different workloads and environmental conditions... all with the purpose of determining drive reliability.

Of course that wasn't the intention, but that is how some will interpret the data. In my opinion, the intoxicating allure of media coverage overwhelmed common sense, and Backblaze released these numbers with a catchy title that would attract attention. The tech media is to blame as well, with many posting the information with little or no research. Unfortunately, the Backblaze blog post will be copy/pasted innumerable times for years to come as an authoritative source of data, when it is the furthest thing from a comprehensive study imaginable.