IT/Datacenter & Super Computing News - Page 1

UK government spending $273 million to build its fastest-ever AI supercomputer

The UK government has just announced it's working on the most powerful supercomputer it's ever built, which will be known as Isambard-AI, which, when it's built, will be one of the most powerful AI supercomputers in the world.

Isambard-AI will be capable of over 200 quadrillion calculations per second once it's powered on in summer 2024, where it will be housed at the University of Bristol. Hewlett Packard Enterprise is building the new AI supercomputer, which will be powered by 5448 x NVIDIA GH200 Grace Hopper Superchips, capable of over 21 exaflops of AI performance and over 21 quintillion floating point operations per second for AI applications like large language models (LLMs).

The University of Bristol says that the new Isambard-AI supercomputer will be 10x faster than the fastest AI supercomputer that's available in the UK today. Isambard-AI will also get connected to other supercomputers that are based in the UK, which will see performance increase even more. Simon McIntosh-Smith from the Univesity of Bristol said that Isambard-AI "will be one of the most powerful AI systems for open science anywhere".

TSMC working with NVIDIA and Broadcom: 200 new R&D experts for silicon photonics

TSMC is working directly with NVIDIA and Broadcom to super-speed R&D efforts into the next step in the semiconductor industry: silicon photonics.

First, what are silicon photonics? The semiconductor industry is moving into the world of silicon photonics as its next-gen approach to traditional copper transmission cables, which sees the combination of laser and silicon technology that pumps high data transfer speeds. Silicon photonics will have conventional "electricity" delivery with light, meaning we're in for a world of seamless, quicker, secure transmission.

ChinaTimes reports that Taiwan Semiconductor Manufacturing Company -- TSMC -- pumping 200 new R&D specialists into the arms of silicon photonics use in the AI industry. This is why TSMC is teaming with NVIDIA and Broadcam, as the Taiwanese giant is currently walking into Compact Universal Photo Engine (COUPE) that will usher in an energy-efficient solution that will be the big push into its large-scale adoption. GlobalFoundries is also working on silicon photonics, but TSMC is ahead of GlobalFoundries... which shouldn't surprise anyone who knows how much further ahead TSMC is above the competition.

Microsoft is planning to power its data centers with nuclear microreactors

Microsoft is hiring for a new role at the company, Principal Program Manager of Nuclear Technology. Microsoft is going nuclear, with the company planning to implement a global Small Modular Reactor (SMR) and microreactor energy strategy.

With the rise of AI and the exponential increase in data center power requirements, the company is looking to the controversial nuclear power for its Cloud and AI business.

"The ideal candidate will have experience in the energy industry and a deep understanding of nuclear technologies and regulatory affairs," the job listing writes. It's worth noting that this will not involve Microsoft building massive nuclear power reactors all over the globe but will use specialized microreactors that are compact, transportable, and "plug-and-play."

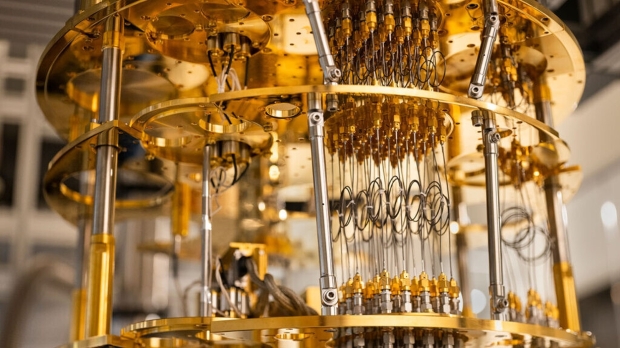

Microsoft plans to build a quantum supercomputer within the next decade

Microsoft has released a scientific-sounding roadmap for building its first quantum supercomputer, built on the research and advances it has made with topological qubits in recent years. Even though more work is needed, Krysta Svore, Microsoft's VP of advanced quantum development, has told TechCrunch that it's on track to build a functional quantum supercomputer in less than 10 years.

"We think about our roadmap and the time to the quantum supercomputer in terms of years rather than decades," Krysta Svore said. It's an ambitious roadmap for sure, and quantum computing is an exciting prospect when it comes to high-performance computing. Quantum computers use qubits, capable of running multidimensional algorithms, and the real kicker is that their power increases exponentially as more qubits are added. Compared to the linear progression of standard computing, it's no wonder it's seen as the next big frontier.

The issue is that quantum computing can have high error rates and needs to be kept extremely cold and free from even the slightest physical interference.

Students use 16 x NVIDIA Jetson Nano modules to make desktop supercomputer

NVIDIA's nifty little Jetson Nano modules have been used by two students at the Southern Methodist University in Dallas, Texas to create a mini supercomputer.

The desktop supercomputer is powered by 16 x NVIDIA Jetson Nano modules, with the students building it into a clear acrylic case, including the power supplies required, cooling fans to get rid of the heat, and a network switch to connect it all together.

Southern Methodist University received outside funding to design the NVIDIA Jetson Nano-powered desktop supercomputer, which will be used to educate students about how a computer cluster works. The project took four months of learning and labor for the design and creation, while the students were learning about networking.

Weather just got a lot more accurate after a big supercomputer upgrade

The National Oceanic and Atmospheric Administration has just replaced two outdated supercomputers with new supercomputers that will bring massive upgrades to weather forecasting.

The National Oceanic and Atmospheric Administration (NOAA) announced back in 2020 that it would be replacing some of its older, now-outdated supercomputers that are used to run weather forecasts and predict patterns. NOAA has now replaced the older models, which were Cry and IBM supercomputers located in Reston, Virginia, Florida, and Orlando - with two Hewlett Packard Enterprise (HPE) Cray supercomputers.

These two supercomputers come with 2,560 AMD Epyc Rome 64-core 7742 server CPUs that collectively provide 327,680 cores that are capable of operating up to 12.1 petaflops. This new setup is approximately three times faster than what NOAA was previously using, and with the new upgraded power, the agency believes it will be able to provide more accurate and detailed weather forecasts to the public. Notably, the two new NOAA supercomputer systems are called Dogwood and Cactus, and rank as the 49th and 50th fastest supercomputers in the world.

Continue reading: Weather just got a lot more accurate after a big supercomputer upgrade (full post)

A new supercomputer can run 'brain-scale' AI, rivaling human brains

The latest generation of Sunway supercomputer rivals the performance of Frontier, which was recently labelled the world's most powerful supercomputer.

According to the researchers behind the latest Sunway supercomputer, the Sunway has over 37 million CPU cores, quadrupling the number found in Frontier, nine petabytes of memory, and 96,000 semi-independent computer systems referred to as 'nodes,' that can exchange data at rates greater than 23 petabytes per second. The Sunway is capable of exascale computing, allegedly up to 5.3 exaFLOPS (5.3 quintillion floating-point operations per second).

The research team trained an artificial intelligence (AI) model, named bagualu (meaning alchemist's pot), with 174 trillion parameters using the Sunway. According to the South China Morning Post, this number rivals that of the number of synapses in the human brain, though some estimates of the true number of synapses go as high as 1,000 trillion.

Continue reading: A new supercomputer can run 'brain-scale' AI, rivaling human brains (full post)

NVIDIA Grace Superchip powers Atos $160 million supercomputer in Spain

Atos is building the new MareNostrum 5 supercomputer, with a new $160 million contract signed in part of the EuroHPC JU initiative, and once it's built it will be the fastest supercomputer in the European Union.

NVIDIA's new Grace Superchips will be powering the new Atos MareNostrum 5 supercomputer, which will be built in Spain and delivered to the Barcelona Supercomputing Centre (BSC) in 2023. Inside, the new MareNostrum 5 supercomputer will pack 314 petaflops of FP64 computing performance.

The compute and storage partitions will be operational within the year, reports HPC Wire, adding that the remainder of the MareNostrum 5 supercomputer to be "operational within the year". NVIDIA has also added that the system is "expected to enter deployment in 2023". BSC says that the new MareNostrum 5 supercomputer will be "fully powered with green energy, and will utilize heat reuse technology".

Continue reading: NVIDIA Grace Superchip powers Atos $160 million supercomputer in Spain (full post)

NREL's Kestrel Supercomputer: AMD, Intel, and NVIDIA minajatwa

The new NREL Kestrel supercomputer details have been unveiled, with the National Renewable Energy Laboratory (NREL) division of the US Department of Energy (DOE) packing some serious horsepower into its new supercomputer.

We're looking at a Minajatwa of silicon between Intel Sapphire Rapids Xeon CPUs, AMD EPYC "Genoa" CPUs, and NVIDIA H100 GPU accelerators. We have 44 petaflops of peak compute performance, up from just 8 petaflops on the previous-gen Eagle supercomputer.

CPU upgrades are big: Intel Sapphire Rapids Xeon CPUs with 52 cores, 112 threads each -- up from the 18 cores and 36 threads from the Intel Xeon-Gold Skylake CPUs in the Eagle supercomputer.

Continue reading: NREL's Kestrel Supercomputer: AMD, Intel, and NVIDIA minajatwa (full post)

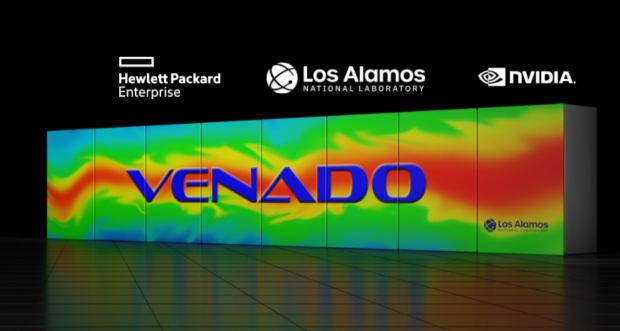

NVIDIA Grace CPU + Grace Hopper Superchip power 'Venado' supercomputer

It's a huge day for supercomputers, with AMD powering the world's fastest supercomputer -- the Oak Ridge National Laboratory (ORNL) "Frontier" supercomputer -- and now NVIDIA has announced it is powering the "Venado" supercomputer.

NVIDIA announced last week that Taiwan tech giants were preparing NVIDIA Grace CPU-powered servers, and now we have our first: the new Venado supercomputer that's being constructed by the Los Alamos National Laboratory. The new VENDAO supercomputer is capable of a huge 10 exaflops of peak AI performance.

It feels like a little bit of "me too" with NVIDIA's announcement of the Venado supercomputer, but more concrete details will be provided at the International Supercomputing Conference in Hamburg, Germany later today. The new AMD CPU + GPU-powered Frontier supercomputer was teased yesterday, and now we have the NVIDIA CPU + GPU-powered Venado supercomputer here today.

Continue reading: NVIDIA Grace CPU + Grace Hopper Superchip power 'Venado' supercomputer (full post)