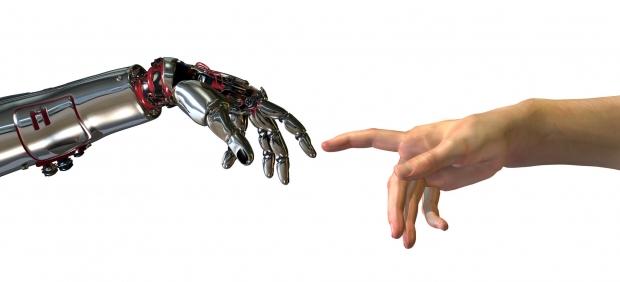

Research into artificial intelligence (AI) continues to evolve, and there is growing concern that uncontrolled AI could have a significant impact on mankind. To prevent this from happening, the Future of Life Institute (FLI) wants AI researchers to sign an open letter to protect humans from intelligent machines.

"We recommend expanded research aimed at ensuring that increasingly capable AI systems are robost and beneficial: our AI systems must do what we want them to do," the letter reads. "The attached research priorities document gives many examples of such research directions that can help maximize the societal benefit of AI. This research is by necessity interdisciplinary, because it involves both society and AI. It ranges from economics, law and philosophy to computer security, formal methods and, of course, various branches of AI itself."

AI is being used in autonomous weapons systems, robots and humanoids, and in autonomous vehicles - raising serious ethical questions that must be answered.

Notable letter signees include Tesla founder Elon Musk, Professor Stephen Hawking, Microsoft research director Eric Horvitz, and numerous other leading professors, engineering students, and private sector leaders.